Data Observability: A New Frontier for Data Reliability in 2024

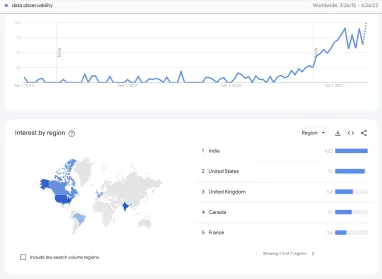

Figure 1. Interest in data observability.1

In today’s data-driven world, data observability has emerged as a critical strategy for ensuring data reliability. With data becoming more important for decision-making and analysis, data quality and accuracy are more important than ever. The practice of measuring and monitoring data systems to ensure their dependability, integrity, and accuracy is known as data observability.

According to Google Search Results, interest in data observability has grown since the middle of 2021 (Figure 1). As a result, interest in data observability is new, and many business leaders are unaware of it. Data observability can enable business leaders to make better-informed decisions based on accurate, reliable, and high-quality data, resulting in improved business outcomes and a competitive advantage. Hence, to provide business leaders with a competitive advantage, we explain data observability in this article, including its:

- Importance

- Use cases

- Benefits

- Best practices

What is data observability?

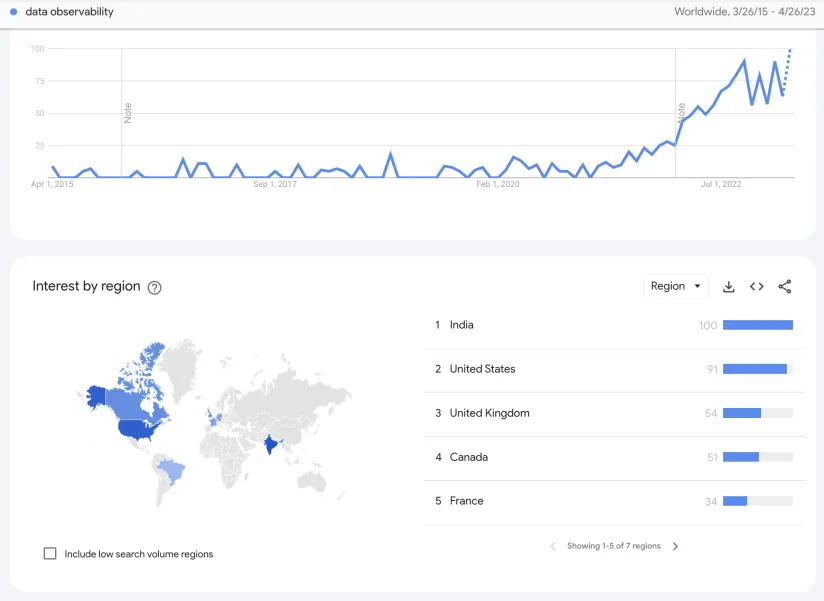

Figure 2. Data observability components.2

Data observability is the practice of continuously measuring, monitoring, and analyzing data systems to ensure data systems’:

- Reliability

- Integrity

- Accuracy

Data observability encompasses the ability to:

- Trace data flow

- Understand data lineage

- Track data quality metrics throughout the data pipeline.

Data observability can be considered as an essential aspect of modern data management because it can:

- Provide a comprehensive view of the data ecosystem

- Enable organizations to proactively address data quality issues.

5 Pillars of Data Observability

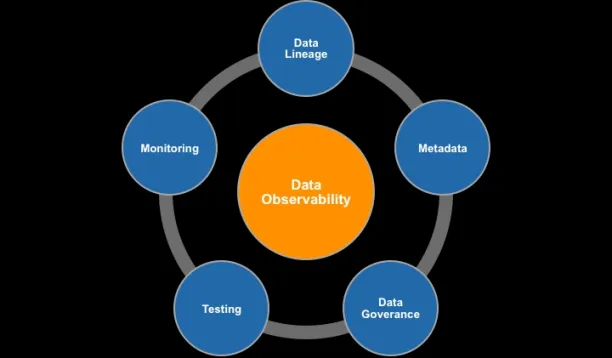

Figure 3. 5 Pillars of data observability. 3

Data observability can be crucial for maintaining reliable and high-quality data systems; the five key pillars that can contribute to achieving this goal are:

- Distribution: Distribution is analyzing data patterns and distributions to identify anomalies and maintain data quality.

- Freshness: Freshness is ensuring that data is regularly updated and accessible to provide timely insights and maintain relevance.

- Schema: Schema is monitoring schema consistency and structure to prevent data integrity issues and maintain a robust data model.

- Lineage: Lineage is tracking data provenance and lineage to enhance traceability and facilitate debugging of data-related issues.

- Volume: Volume is managing data scale and storage to optimize performance and resource usage while handling large amounts of information.

Note that the order of the pillars above can only provide a rough sequence from data collection (distribution) to system performance (volume). The specific importance of the pillars can vary depending on the requirements of a particular project or data system.

Top 5 data observability use cases

Figure 4. Data Ops diagram.4

Choosing a data observability tool or data platform is determined by your business requirements. As a result, we would like to share with you five common data observability tools use cases.

1. Anomaly detection

Using data observability, organizations can detect:

- Anomalies

- Inconsistencies

- Errors in their data systems.

By continuously monitoring data quality metrics and implementing automated processes, organizations can identify potential issues before they escalate. This can lower the risk of inaccurate analyses and decision-making.

2. Data pipeline optimization

Data operations (DataOps) methodologies, in conjunction with data observability, can assist organizations in identifying:

- Bottlenecks

- Inefficiencies

- And areas for improvement in data pipelines.

As a result, data processing can be optimized, operational efficiency can be increased, and data warehouse management becomes more agile.

3. Data governance

Data observability, when combined with DataOps practices, can help data governance initiatives by providing transparency into data lineage and metadata. This can:

- Enable better control over data assets

- Ensure that data policies and standards are consistently followed through automation and collaboration

4. Regulatory compliance

Together, data observability and data operations help organizations meet regulatory requirements by ensuring data:

- Accuracy

- Consistency

- Traceability.

By automating compliance checks and validation processes, the data observability platform can lower the risk of noncompliance penalties.

5. Root cause analysis

Data observability, when combined with DataOps, can enable organizations to conduct root cause analyses and data pipeline monitoring by tracing data issues back to their source. Root cause analysis allows for a better understanding of the underlying factors contributing to data quality issues. This can enable organizations to take corrective actions to prevent future occurrences through continuous improvement and iterative development.

6 data observability best practices

If you consider data observability for your data engineering tools, you should consider the following best practices. To successfully implement data observability, we share six best practices of data observability:

1. Define data quality metrics

Create clear metrics to assess data quality, such as:

- Data completeness

- Data accuracy

- Data consistency

- Data timeliness

These data health metrics should be in line with business goals and data needs.

2. Implement data lineage tracking

Map the data flow from source to destination. This can allow organizations to trace data issues back to their source and understand the impact of changes on downstream systems.

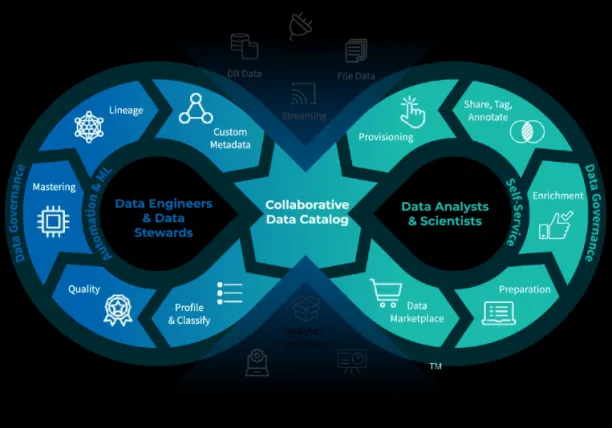

3. Develop a data catalog

Create a centralized repository that documents all data assets, including information related to:

This can enable data engineers to be in a better collaborate and understanding of data across the organization.

4. Monitor data in real-time

Set up real-time data monitoring systems to continuously track data quality metrics and detect anomalies. This can allow organizations to identify and address issues as they arise such as the likelihood of data corruption or loss.

5. Establish data quality thresholds:

Set thresholds for data quality metrics to trigger alerts when data quality falls below acceptable levels. This can help businesses to take immediate action to resolve data downtime issues and maintain data reliability.

6. Foster a data quality culture

Encourage a culture of data quality awareness and responsibility by providing employees:

- Training

- Tools

- And other relevant resources like data quality guidelines.

This can empower individuals to take ownership of data quality and contribute to the organization’s data observability efforts.

If you have further questions on data observability, please contact us at:

External Links

- 1. Google Trends

- 2. “Data Observability – A Crucial Property in a DataOps World”. Eckerson Group. Jul 02, 2018. Retrieved March 15, 2023.

- 3. ”Data Observability”. Cyral. March 15, 2023.

- 4. Teeples, Haley. “Data Observability: Data Management Best-Practice Explained“. Zaloni. September 13, 2021. Retrieved March 15, 2023.

Cem has been the principal analyst at AIMultiple since 2017. AIMultiple informs hundreds of thousands of businesses (as per similarWeb) including 60% of Fortune 500 every month.

Cem's work has been cited by leading global publications including Business Insider, Forbes, Washington Post, global firms like Deloitte, HPE, NGOs like World Economic Forum and supranational organizations like European Commission. You can see more reputable companies and media that referenced AIMultiple.

Throughout his career, Cem served as a tech consultant, tech buyer and tech entrepreneur. He advised businesses on their enterprise software, automation, cloud, AI / ML and other technology related decisions at McKinsey & Company and Altman Solon for more than a decade. He also published a McKinsey report on digitalization.

He led technology strategy and procurement of a telco while reporting to the CEO. He has also led commercial growth of deep tech company Hypatos that reached a 7 digit annual recurring revenue and a 9 digit valuation from 0 within 2 years. Cem's work in Hypatos was covered by leading technology publications like TechCrunch and Business Insider.

Cem regularly speaks at international technology conferences. He graduated from Bogazici University as a computer engineer and holds an MBA from Columbia Business School.

To stay up-to-date on B2B tech & accelerate your enterprise:

Follow on

Comments

Your email address will not be published. All fields are required.