Web scraping is the act of collecting data from websites to understand what information the web pages contain. The extracted data is used in multiple applications such as competitor research, public relations, trading, etc.

Web scraping is not an illegal act. However, a web scraper (human or software) may use the extracted data in a way that contradicts the writer’s interest. That is why there is a constant legal and technical struggle between data collectors (e.g. web scrapers) and data owners, such as the legal case between HiQ Labs and LinkedIn.

Data owners are creating barriers to ensure that only humans reach the data, and as a response, data collectors are using a combination of tech and human resources to overcome these barriers.

Using RPA bots, users can automate web scraping of unprotected websites via drag-and-drop features to eliminate manual data entry and reduce human errors. However, to scrape websites that heavily protect their data and content, users need dedicated web scraping applications in combination with proxy server solutions.

Here, we explore how RPA is used for web scraping:

What is RPA?

Robotic process automation (RPA) is software that automates repetitive tasks by mimicking user interactions with GUI elements. The interest in RPA is rising as the technology matures and vendors provide low/no-code interfaces to build RPA bots.

The global RPA market is expected to reach $11B by 2027. RPA is one of the top candidates to automate any repetitive task, and a typical rules-based process can be 70%-80% automated.

When done manually, web crawling can be a tedious task with many clicks, scrolls, and copy-and-paste repetitions to extract the designated data. That is why it is compelling to use RPA to automate web scraping.

How to automate web scraping with RPA?

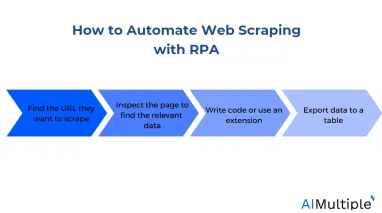

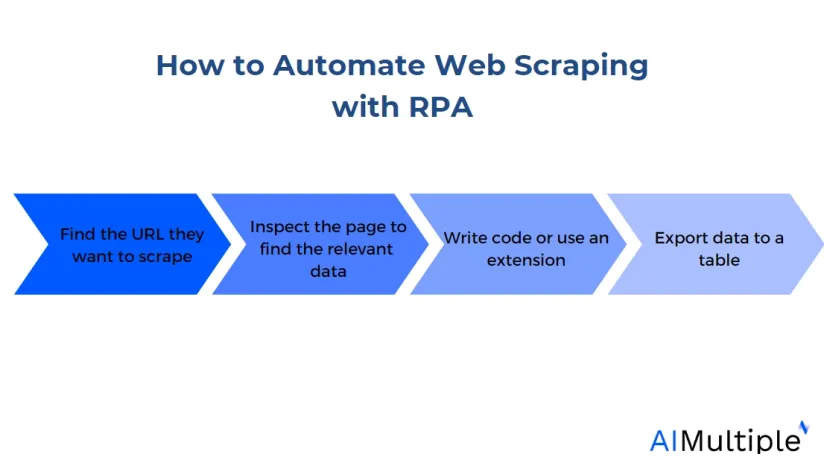

RPA bots perform repetitive tasks by replicating GUI processes, which a human user would typically do to perform a task. For web scraping, users would:

- Find the URL they want to scrape

- Inspect the page to find data relevant to their search

- Write code (e.g. python) or use an extension to extract the data

- Export data to a table

RPA bots can be programmed to log into the designated URL, scroll through multiple pages, extract specific data, and transform it into the required format. The bot can also enter the extracted data directly into another application or system for different usages (e.g. send as an email, modify spreadsheet fields, etc.)

What are the benefits of RPA in web scraping?

Web scraping technology provides the following benefits:

- Eliminate manual data entry errors

- Extract images and videos

- Reduce the time of data extraction and entry

- Automatically monitor websites and portals regularly for data changes

Web scraping tools or RPA software allow users to build scraping bots without writing code or scripts for data extraction.

RPA can be the right tool for web scraping especially if more data processing needs to be done on scraped data. Different technologies can be easily integrated into the RPA bots used in scraping. For example, a machine learning API integrated to the scraping bot, could identify companies’ websites from their extracted logos.

What are the challenges facing RPA in web scraping?

Since RPA bots rely on GUI elements to recognize the wanted data, it is difficult to automate web scraping when pages do not display content in a consistent manner. The top challenges facing RPA in web scraping are:

UI Elements

Some UI elements make scraping harder, but they are challenges that RPA bots can deal with

“Load more” button

Typically, the bot will scroll down a page, extract the data and export it to the output file. Some web pages, especially product pages, load data in parts and allow users to explore more products via “load more” button. When this is the case, the bot will stop extracting the data by the end of the page instead of exploring more products.

The solution is to create an if-loop within the bot program to click the “load more” GUI element if it exists until no more buttons appear on the web page.

Go to the next page

Same as in the “show more” button, some content may be loaded on the following page. The solution would also be to create a loop to click on the “next page” GUI element to open the following URL.

Pop up ads

Pop-up elements or ads can hide GUI elements from the bot’s vision and disable it from extracting the underlying data. The solution would be to use an AdBlocker extension for the browser used for web scraping.

Scrape protection systems

Websites like LinkedIn use sophisticated tech to protect their website from being scraped. In such cases, users have a few options:

- Work with a company that provides the website data in a data-as-a-service manner. In such a model, the supplier handles all the programming and manual verification and provides clean data via an API or CSV download

- Build your own data pipeline. You can rely on web scraping software or RPA in combination with proxy servers to build bots that act in a manner that is not distinguishable from humans

To learn how to bypass web scraping challenges, read Top 7 Web Scraping Best Practices“.

Further reading

To learn more about web scraping, feel free to read our in-depth articles about:

- The Ultimate Guide to Proxy Server Types

- Top 5 Web Scraping Case Studies & Success Stories

- Top 10 Proxy Service Providers for Web Scraping

And if you are interested in using a web scraper for your business, don’t forget to check out our data-driven list of web crawlers.

Comments

Your email address will not be published. All fields are required.