Serverless GPU can provide easy-to-scale computing services for AI workloads. However, their costs can be substantial for large-scale projects. Navigate to sections based on your needs:

- Find the most cost-effective providers by tokens per dollar

- Compare hourly rates across all major providers

- Performance data for inference and fine-tuning throughput

Serverless GPU price per throughput

Serverless GPU providers offer different performance levels and pricing for AI workloads. Compare the most cost-effective GPU configurations for your fine-tuning and inference needs across leading serverless platforms:

Serverless GPU price calculator

Serverless GPU benchmark results

You can read more about our benchmark methodology for serverless GPU.

Shortlisted 10 serverless GPU providers

Companies are sorted alphabetically since this field is an emerging domain and there is limited data available except for the sponsors which are placed at the top of the list with a link to their website.

| Vendors* | Founded | # of GPU types | Price leader in | Rating |

|---|---|---|---|---|

| RunPod | 2020 | 7 | H100 A6000 A5000 A4000 | 4.4 based on 34 reviews |

| Baseten | 2019 | 5 | 5 based on 10 reviews | |

| Beam Cloud | 2022 | 5 | A10G | 0 |

| Fal AI | 2021 | 2 | 0 | |

| Koyeb | 2020 | 3 | V100 | 4.9 based on 16 reviews |

| Modal Labs | 2021 | 6 | 3.7 based on 16 reviews | |

| Mystic AI | 2019 | 4 | T4 | 0 |

| Novita AI | 2023 | 6 | H100 RTX A6000 RTX 4090 | 0 |

| Replicate | 2019 | 3 | 0 | |

| Seeweb | 1998 | 4 | L40S L4 RTX A6000 | 0 |

RunPod

RunPod delivers fully managed and scalable AI endpoints for diverse workloads. RunPod users can choose between GPU instances and serverless endpoints and employ a Bring Your Own Container (BYOC) approach. Some of the RunPod features include:

- Loading process through dropping a container link to pull a pod

- A credit-based payment and billing system.

Baseten Labs

Baseten is a machine learning infrastructure platform that helps users deploy various sizes and types of models from the model library at scale. It leverages GPU instances like A100, A10, and T4 to enhance computational performance.

Baseten also introduces an open-source tool called Truss. This tool can help developers deploy AI/ML models in real-world scenarios. With Truss, developers can:

- Package and test model code, weights, and dependencies using a model server.

- Develop their model with quick feedback from a live reload server, avoiding complex Docker and Kubernetes configurations.

- Accommodate models created with any Python framework, be it transformers, diffusors, PyTorch, Tensorflow, XGBoost, sklearn, or even entirely custom models.

Beam Cloud

Beam, formerly known as Slai, provides easy REST API deployment with built-in features like authentication, autoscaling, logging, and metrics. Beam users can:

- Execute GPU-based long-running training tasks, choosing between one-time or scheduled automated retraining

- Deploy functions to a task queue with automated retries, callbacks, and task status query.

- Customize autoscaling rules, optimizing user waiting times.

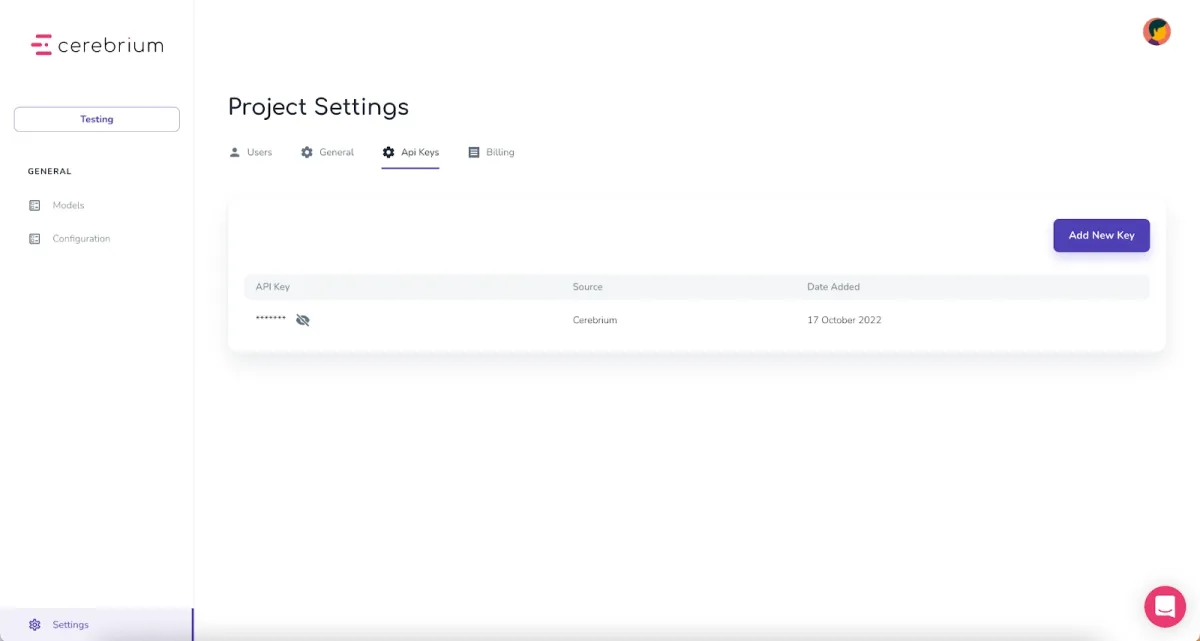

Cerebrium AI

Cerebrium AI offers a diverse selection of GPUs, including H100’s, A100’s, A5000’s,with a total of over 8 GPU types available. Cerebrium allows users to define their environment with infrastructure-as-code and direct access to code without the need for S3 bucket management.

Fal AI

FAL AI delivers ready-to-use models with an API endpoints to customize and integrate to customer apps. Their platform supports Serverless GPUs, such as A100 and T4.

Koyeb

Koyeb is a serverless platform designed for developers to easily deploy applications globally, without the need of manage servers, infrastructure, or operations. Koyeb offers serverless GPU with Docker support and horizontal scaling for AI tasks like generative AI, video processing, and LLMs. Its offer includes H100 and A100 GPUs with up to 80GB vRAM.

Its pricing ranges from $0.50/hr to $3.30/hr, billed by the second.

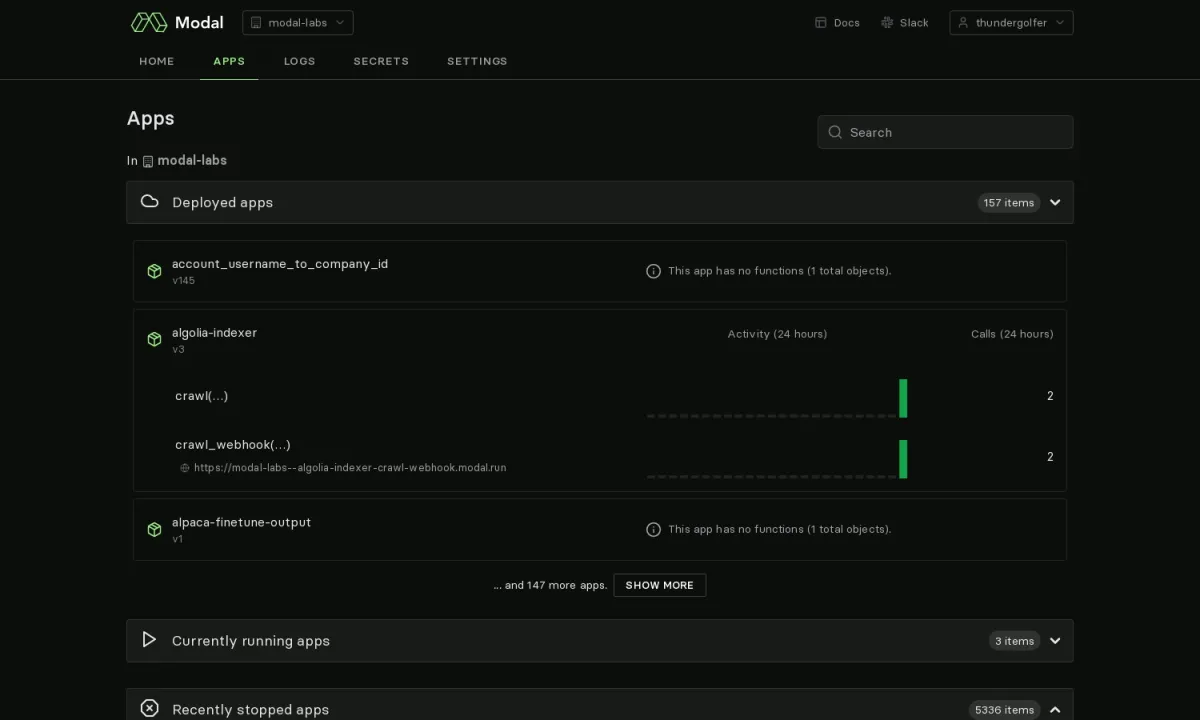

Modal

Modal is a serverless cloud platform that allows developers to execute code remotely, define container environments programmatically, and scale to thousands of containers. It supports GPU integration, web endpoint serving, scheduled job deployment, and distributed data structures like dictionaries and queues. The platform operates with a pay-per-second model and requires no infrastructure configuration, focusing on code-based setup without YAML.

To use Modal, developers sign up at modal.com, install the Modal Python package via pip install modal, and authenticate with modal setup. Code runs in containers within Modal’s cloud, abstracting away infrastructure management like Kubernetes or AWS. Currently limited to Python, it may expand to other languages.

Mystic AI

Mystic AI’s serverless platform is pipeline core which hosts ML models through an inference API. Pipeline core can create custom models with over 15 options, such as: GPT, Stable diffusion, and Whisper. Here are some of the Pipeline core features:

- Simultaneous model versioning and monitoring

- Environment management, including libraries and frameworks

- Auto-scale across various cloud providers

- Support for online, batch, and streaming inference

- Integrations with other ML and infrastructure tools.

Mystic AI also provides an active Discord community for support.

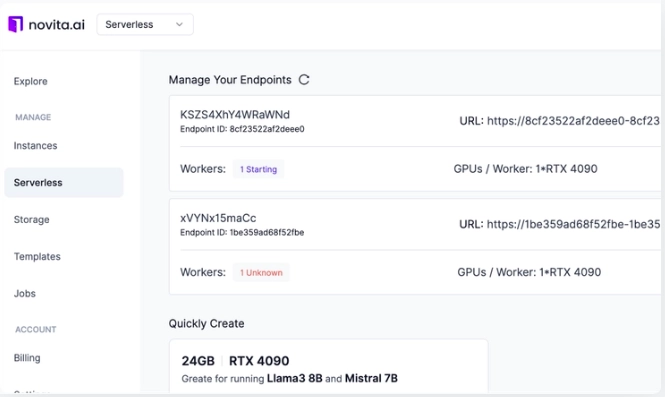

Novita AI

Novita AI is a platform designed to support developers in creating advanced AI products without needing deep expertise in machine learning. It offers a comprehensive suite of APIs and tools for building applications across various domains, including image, video, audio, and large language model (LLM) tasks.

Novita AI’s serverless system offers auto-scaling, deployment with DockerHub support and real-time monitoring.

Replicate

Replicate’s platform supports custom and pre-trained machine learning models. The platform delivers a waitlist for open-source models and offers flexibility with a choice between Nvidia T4 and A100. The platform also includes an open-source library, COG, to facilitate model deployment.

Seeweb

Seeweb is a cloud computing provider that offers serverless GPU solutions to optimize AI workloads. These solutions serve as an entry point for developers looking to run popular models, fork models, and pre-trained models efficiently using Python. They can leverage Kubernetes to speed up deployments

Key features:

- Autoscaling to adjust resources dynamically, reducing cold starts associated with serverless functions.

- GDPR compliance by operating in European cloud and using global network for expanded reach.

- 24x7x365 support ensuring users receiving reliable assistance for managing their ML models.

Provided GPUs include A100, H100, L40S, L4 and RTX A6000.

What are other cloud providers?

Top cloud providers such as Google, AWS and Azure provide Serverless functioning, which does not support GPU at the moment. Other providers like Scaleway or Coreweave delivers GPU inference but do not offer serverless gpus.

Find out more on cloud gpu providers and GPU market.

What are the benefits of serverless GPU?

LLMs like chatGPT has been a hot topic for business world since last year. Thus, the number of these models have drastically increased. Serverless GPUs’ benefits help avoid several LLM challenges, such as:

- Cost efficiency:Users only pay for the GPU resources they actually use, making it a cost-effective solution. In a traditional server setups, users are expected to pay for constant provisioning of resources.

- Scalability: Serverless architectures automatically scale to handle varying workloads. When the demand for resources increases or decreases, the infrastructure dynamically adjusts without manual intervention.

- Simplified management: Developers can focus more on writing code for specific functions or tasks, as the cloud provider handles server provisioning, scaling, and other infrastructure management tasks.

- On-Demand resource allocation: Serverless GPU architecture allows applications to access GPU resources on demand. This helps managing and maintaining physical or virtual servers dedicated to GPU processing. Resources are allocated dynamically based on application requirements.

- Flexibility: Developers have the flexibility to scale resources up or down based on the specific needs of their applications. This adaptability is particularly useful for workloads with varying computational requirements.

- Enhanced parallel processing: GPU computing excels at parallel processing tasks.Therefore, serverless GPU architectures can be utilized in applications that require significant parallel computation, such as machine learning inference, data processing, and scientific simulations.

Serverless GPU benchmark methodology

Prices: Serverless GPU prices are crawled monthly from all providers.

Performance:

- All serverless GPU models performance were measured on Modal cloud platform.

- Text finetuning was measured by finetuning Llama 3.2-1B-Instruct with FineTome-100k dataset using 1M tokens processed over 5 epochs. Number of tokens times number of epochs was divided by finetuning time to identify number of tokens finetuned per second.

- Text inference was measured during inference of 1 million tokens including both input and output tokens. We divided number of tokens by the total duration to calculate the average number of tokens per second during inference.

H200 vs H100 Performance Notes:

- The H200 showing lower finetuning performance than H100 may seem counterintuitive given its newer architecture and larger memory (141GB vs 80GB). Several factors could contribute to this result, including differences in memory bandwidth utilization, software optimization maturity, or thermal management under sustained workloads.

- This benchmark used a relatively small 1B parameter model, which may not fully leverage H200’s additional memory capacity. The performance gap might differ significantly with larger models that better utilize the H200’s expanded memory.

- Performance can also vary based on specific workload characteristics, batch sizes, and the particular software stack used during testing.

Next Steps:

- We plan to expand our benchmarks to include larger models (7B, 13B, and 70B parameters) to better understand how performance scales with model size and memory requirements.

- Future testing will include multi-GPU setups and longer context length scenarios where H200’s architectural advantages may be more apparent.

How to use Serverless GPUs for ML models

In traditional machine learning workflows, developers and data scientists often provide and manage dedicated servers or clusters with GPUs to handle the computational demands of training complex models. Serverless GPU for machine learning takes away such complexities of infrastructure management.

Please follow the guide below to understand how to use Serverless GPU in ML models:

- Training models: Serverless GPU facilitates machine learning model training by offering dynamic resource allocation for efficient training on extensive datasets. Developers benefit from on-demand resources without the hassle of managing dedicated servers.

- Inference: Serverless GPU is crucial for model inference, making quick predictions on new data. Ideal for applications like image recognition and natural language processing, it ensures fast and efficient execution, especially during variable demand periods.

- Real-time processing: Applications requiring real-time processing, such as video analysis, leverage Serverless GPU. Dynamic resource scaling enables swift processing of incoming data streams, making it suitable for real-time applications across domains.

- Batch processing: Serverless GPU handles large-scale data processing tasks in ML workflows involving batch processing. This is essential for data preprocessing, feature extraction, and other batch-oriented machine learning operations.

- Event-driven ML workflows: Serverless architectures are event-driven, responding to triggers or event, such as updating a model when new data becomes available or retraining a model in response to certain events.

- Hybrid architectures: Some ML workflows combine serverless and traditional computing resources. For instance, GPU-intensive model training transitions to a serverless environment for AI inference, optimizing resource utilization.

FAQs

What is GPU inference?

GPU inference refers to the process of utilizing Graphics Processing Units (GPUs) to make predictions or inferences based on a pre-trained machine learning model. The GPU accelerates the computational tasks involved in processing input data through the trained model, resulting in faster and more efficient predictions. The parallel processing capabilities of GPUs enhance the speed and efficiency of these inference tasks compared to traditional CPU-based approaches.

GPU inference is particularly valuable in applications such as image recognition, natural language processing, and other machine learning tasks that involve making predictions or classifications in real-time or near real-time scenarios.

What is serverless GPU?

Serverless GPU describes a computing model where developers run applications without managing underlying server infrastructure. GPU resources are dynamically provisioned as needed. In this environment, developers concentrate on coding specific functions while the cloud provider handles infrastructure, including server scaling.

Despite the term “serverless” suggesting an absence of servers, they still exist but are abstracted from developers. In GPU computing, this architecture allows on-demand GPU access without the need for physical or virtual server management.

Serverless GPU computing is commonly employed for tasks demanding significant parallel processing, like machine learning, data processing, and scientific simulations. Cloud providers offering serverless GPU capabilities automate GPU resource allocation and scaling based on application demand.

This architecture provides benefits such as cost efficiency and scalability, as the infrastructure dynamically adjusts to varying workloads. It enables developers to focus more on code and less on managing the underlying infrastructure.

Why is serverless GPU pricing important?

Megatron-Turing from NVIDIA and Microsoft is estimated to hold a cost of approximately $100 million for the entire project.4 Such system costs prevent enterprise adopting Large language models (LLMs) despite their benefits.

NVIDIA L40 vs L40S

The NVIDIA L40S is a more powerful, AI-optimized version of the L40 GPU. While both use the Ada Lovelace architecture, the L40S delivers significantly higher performance for AI training and inference, due to enhanced tensor core capabilities and support for FP8 precision.

The L40 is better suited for graphics, rendering, and general-purpose workloads, whereas the L40S is ideal for compute-intensive AI tasks in data centers.

Further reading

Discover more on GPU:

Comments

Your email address will not be published. All fields are required.