Web Scraping Tools: Data-driven Benchmarking in 2024

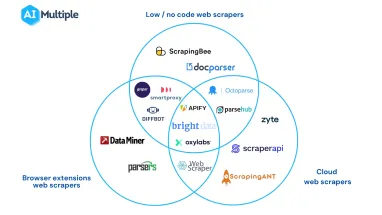

Web scraping tools help businesses automatically collect large amounts of data from multiple web sources. Companies can either build their own scrapers using web scraping libraries or use one of these 3 types of off-the-shelf scrapers:

- Off-the-shelf web scrapers (including low/no code web scrapers)

- Cloud web scrapers

- Browser extensions web scrapers.

However, there are numerous web scraping services available for each type of web scraper. Buyers face confusion regarding the capabilities of web scraping software while choosing a web scraper for their businesses. It is important to understand the web scraping vendor landscape and key capabilities of web scrapers to make an informed decision.

This article evaluates the top web scraping tools and their capabilities to assist businesses in selecting the best fit for their data scraping tasks.

The best web scraping tools of 2024: Quick summary

| Vendors | Pricing/mo | Trial | PAYG | JavaScript rendering* | Built-in Proxy** | Type*** |

|---|---|---|---|---|---|---|

| Bright Data | $500 | 7-day | ✅ | ✅ | ✅ | No-code |

| Apify | $49 | 7-day | ❌ | ✅ | ✅ | API |

| Smartproxy | $50 | 3K free requests | ❌ | ✅ | ✅ | No-code |

| Oxylabs | $49 | 7-day | ❌ | ✅ | ✅ | API |

| Nimble | $600 | 7-day | ❌ | ✅ | ✅ | API |

| NetNut | $750 | 7-day | ❌ | ✅ | ✅ | API |

| SOAX | $59 | 7-day | ❌ | N/A | ✅ | API |

| Zyte | $100 | $5 free for a month | ❌ | ✅ | ✅ | API |

| Diffbot | $299 | 14-day | ❌ | ✅ | ✅ | API |

| Octoparse | $89 | 14-day | ❌ | ✅ | ✅ | No-code |

| Nanonets | $499 | N/A | ✅ | N/A | ❌ | OCR API |

| Scraper API | $149 | 7-day | ❌ | ✅ | ✅ | API |

Supported features

JavaScript Rendering*

Web pages that include JavaScript elements use the document object model (DOM) to change the document structure and content. Websites change their content based on visitors’ activities and behaviors to create a personalized experience.

However, these types of websites represent challenges for web scraping tools that are programmed to scrape and render static HTML elements. When a client makes a connection request to a web server, web scrapers with javascript rendering automatically process and render the target page content on the client’s browser.

Built-in proxy servers**

When collecting data on a large scale from well-protected websites, you must leverage proxy solution. Some web scraping services support proxies with their scrapers to prevent users’ identity from being revealed.

Type***

Web scraping services come in a variety of forms, including no-code scraping tool, scraping APIs or web scraping libraries. The choice between the tools will depend on your data collection needs and technical skills.

Comparing top data scraping tools: Features-based comparison

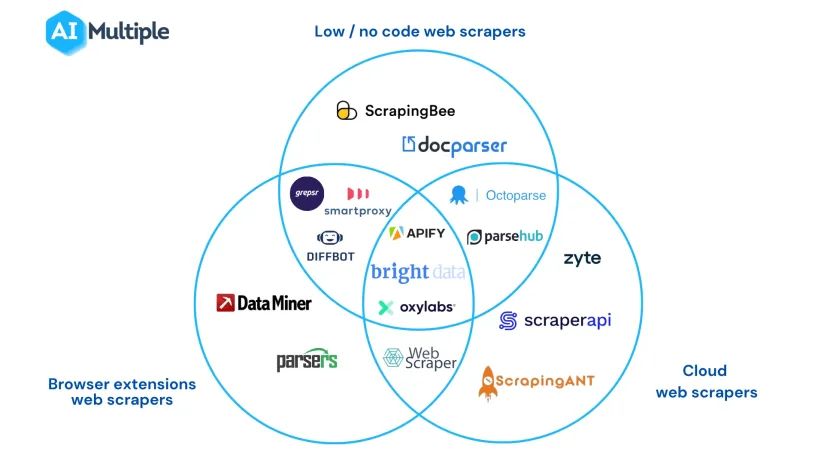

1. Bright Data

Bright Data is a web data platform that offers scraping solutions including Scraping Browser, Web Scraper IDE, and SERP API and proxy services for businesses and individuals. Web Scraper IDE allows users to extract data from any-geolocation while avoiding web scraping measures.

Features:

- Offers Offers built-in fingerprinting, CAPTCHA solving and proxy solutions to avoid anti-bot measures and IP blocks.

- Provides ready-made JavaScript functions and web scraper templates. Users can develop their data scraping tool using pre-built JavaScript functions and code templates.

- Allows you to handle errors and exceptions with built-in debug tools.

2. Apify

Apify is a cloud-based service equipped with an extensive array of tools aimed at facilitating large-scale web scraping, automation, and data extraction projects. The platform offers compatibility with a diverse range of cloud services and web applications, including Google Sheets, Slack, and GitHub.

Features:

- It features a collection of “actors,” which are specialized cloud-based programs capable of executing various automation functions, including ready-to-use scrapers for well-known sites such as Amazon, eBay, and Instagram.

- Users have the option to create custom actors utilizing the Apify Software Development Kit (SDK).

3. Smartproxy

Smartproxy offers no-code web scraper to gather data from JavaScript, AJAX, or other dynamic websites. It scrapes different data points including image URLs, and exports the extracted data in JSON or CSV format.

Features:

- Offers pre-made data extraction templates.

- Changes IPs at set intervals or after each request with the automatic proxy rotation feature.

- Allows you to store the scraped data on the cloud.

4. Oxylabs

Oxylabs Web Scraping API automate the process of data extraction from static and dynamic web pages. The scraping API enables users to gather data from multiple pages at once with up to 1000 URLs per batch.

Features:

- Provides built-in proxies and proxy management, and automatic proxy rotation capabilities.

- Handles failed scraping requests with auto-retry system. It allows you to try the failed attempts again after the failure.

- Executes and renders JavaScript web pages with headless browsers.

5. Nimble

Nimble is a platform specializing in web data collection, featuring various scraper APIs. The Web Scraping API notably includes features such as page interactions and parsing templates, making it useful for dealing with websites in areas like E-commerce and Search Engine Results Pages (SERP).

Features:

- Enables users to process a substantial quantity of URLs in one request, with the capability to handle up to 1,000 URLs simultaneously.

- All requests made through Nimble’s APIs are routed through a proxy network provided by Nimble IP.

- Allows users to perform a range of actions on a webpage during data collection, including clicking, typing, and scrolling. These page interactions function synchronously, executing each operation in a sequential manner, one after the other, with a total time limit of 60 seconds for all actions.

6. NetNut

NetNut provides a SERP Scraper API and various datasets, including those for professional profiles and company information. Pricing for company datasets begins at $800 for 50,000 requests, while professional profile datasets start at $750 for 100,000 requests.

Features:

- NetNut’s scraping solutions allow for customized data extraction plans. The data provided by NetNut is organized in tables and can be accessed in multiple formats, such as CSV and JSON.

- The SERP API offered by NetNut enables the retrieval of Google search engine results pages (SERPs), with options to tailor requests by incorporating specific criteria like geographic location, pagination, and language or country preferences for localization.

7. SOAX

SOAX e-Commerce API help businesses access and gather publicly available data on Amazon pages. You can extract various Amazon data points from search result, product or category pages. It can be used with any programming language.

Features:

- Offers smart pagination features. It navigates through multiple pages of Amazon automatically, and extract data from these pages. It is suitable for large scale of web scraping activities.

- Delivers the data you have scraped raw HTML or structured data in JSON format.

- Includes a testing feature, enabling users to test their code.

8. Zyte

Zyte API is a web scraping API that enables browser automation and automatic extraction of data from websites at scale. Only successful Zyte API responses are charged.

Features:

- Takes the screenshot of the target web page.

- Interact with the target web page. Supports 3 types of browser actions: generic actions, special actions, and browser scripts.

- Allows 1 automatic extraction property per API request.

9. Diffbot

Diffbot web scraping solution automatically classify and collect public data from web sources. They offer two APIs for data collection: Extract and Bulk. Extract leverages computer vision and NLP (natural language processing) to categorize and extract data into structured JSON. Bulk allows you to scrape large lists of exact URLs.

Features:

- Executes and renders page-level Javascript.

- Offers different types of APIs, like product APIs, image extraction APIs, and job APIs.

10. Octoparse

Octoparse web scraping service is UI-based solution that’s easy to understand and use for non-technical users. It scrapes AJAX, JAVA scripts, and other dynamic websites. However, it cannot collect data from XML Sitemap and PDF files. The web scraping solution is not capable of handling CAPTCHA automatically. They also offer free plan with limited scraping features.

Features:

- Allows you to create pagination with auto-detect feature.

- Offers Cloud servers to speed up data scraping process.

- Enables users to build their web scraper without the need for coding.

11. Scraper API

ScraperAPI enables individuals to scrape data from static and dynamic pages with JavaScript rendering and IP geo-targetting. However, they don’t support city level targeting with proxy solutions.

Features:

- Offers unblocking technologies for anti-scraping measures, including proxy rotation, CAPTCHA and anti-bot detection.

- Automatically converts JSON data into the native objects of the language you use with JSON auto parsing capability.

- Allows users to customize the User-Agent header, avoiding detection.

12. Nanonets

Nanonets provides OCR API to extract data from web pages. It can detect HTML tables, images and text, but cannot scrape images and videos. The scraping API can obtain data from Java, headless or static web pages. They also offer PDF scraper for businesses.

Features:

- Offers no-code user interface

- Automatically identify and extract tables from a web page.

What is a web scraping tool?

A web scraping tool is a software that supports extracting web data automatically.

These are the common types of tools that support web scraping:

- Web scrapers offer end-to-end web scraping capabilities

- Proxy servers enable web scrapers to defeat anti-scraping measures

Should you build an in-house web scraper using web scraping libraries?

Developers use web scraping libraries to build in-house web crawlers. In-house web crawlers can be heavily customized but take development and maintenance effort.

Web scraping libraries make it easy for developers to build web scrapers. However, they require their users to have

- basic programming knowledge

- familiarity with HTML structures, elements, and the basics of a necessary library such as Python.

Open source web scrapers are freely available for developers and enable users to modify and customize the source code based on their needs and purposes.

Advantages

- You can customize self-built web scrapers based on your particular web scraping needs and business requirements.

- Building your web scraper can be cheaper in the long run than using a pre-built web scraping tool.

- In-house web scraping gives businesses control over their data pipeline.

Disadvantages

- In-house web scraping tools require development and maintenance effort.

Should you use an off-the-shelf web scraper?

Pre-built web scrapers provide APIs or other User Interfaces (UIs) enabling technical or non-technical users to scrape data. These types of web scrapers can be downloaded and run on users’ machines or the cloud.

Advantages

- No code tools do not require technical knowledge.

- Customer support is provided

- These tools are maintained by their teams leaving your team to focus on business priorities

- Most websites change their HTML structure and website content according to users’ data to display customized content. Websites may also implement new anti-scraping measures. These pose a challenge to web scraping tools. Some of these tools offer web scraping or web data as a service. In such a scenario, the team behind the web scraper constantly updates the code to ensure that data is continuously collected.

Disadvantages

- Compared to in-house web scrapers, outsourcing the development of web scraping infrastructure can be more expensive.

What are the different types of off-the-shelf web scrapers?

1. Low code & no code web scrapers

No code web scrapers enable users to collect web data from web sources automatically without writing a single line of code. If you have limited knowledge of programming language and do not have a technical team to build your own web scraper, a no-code web scraper platform is a good option to automate your data collection projects. No code web scraping tools can be integrated with your browser.

Advantages

- Can be used by non-developers.

Disadvantages

- These solutions can be less flexible than code-based solutions.

2. Cloud web scrapers

Cloud-based web scraping is a type of web scraping that effectively handles large-scale web scraping and stores collected web data on the cloud.

Advantages

Unlike browser-extension-based web scrapers:

- Cloud-based scrapers can run many instances of crawlers and minimize the time spent on data scraping.

- When you build your web scraper, you need to save, and process scraped data in your machine, which can be inefficient for large web scraping projects. Cloud scraping enables businesses to store and process large amounts of web data on the cloud.

Disadvantages

- Can be harder to set up than browser-extension-based web scrapers.

3. Browser extensions web scrapers

Browser-extension-based web scrapers enable users to scrape websites and export data in various formats, such as CSV, XLSX, and JSON, directly from their browsers.

Figure 1: Web scraping decision tree

Limitations and next step

We relied on vendor claims to identify capabilities of tools. As we have a chance to try out these tools, we will update the above table with actual capabilities of these tools observed in our benchmarking

Web data scraping is an evolving market. If we missed any provider or our tables are outdated due to new vendors or new capabilities of existing tools, please leave a comment.

Transparency statement

AIMultiple serves numerous tech companies including Bright Data, Oxylabs and Smartproxy.

Further reading

- Web Crawling vs. Web Scraping: The Main Differences

- The Ultimate Guide to Proxy Server Types

- Top 5 Web Scraping Case Studies & Success Stories

For guidance to choose the right tool, check out data-driven list of web scrapers, and reach out to us:

Comments

Your email address will not be published. All fields are required.