LLMOPs vs MLOPs in 2024: Discover the Best Choice for You

In the rapidly evolving landscape of artificial intelligence and machine learning, new terminologies and concepts frequently emerge, often causing confusion among business leaders, IT analysts, and decision-makers. Among these, two terms have gained prominence: LLMOps vs MLOps.

While sounding similar, LLMOps and MLOps represent distinct approaches that can significantly impact how organizations harness the power of AI technologies.

This article compares LLMOps and MLOPs to equip you with the necessary insights, facilitating informed decisions tailored to your business objectives and technological needs.

What is LLMOps?

LLMOPS stands for Large Language Model Operations, denoting a strategy or system to automate and refine the AI development pipeline through the utilization of expansive language models. LLMOPs tools facilitate the continuous integration of these substantial language models as the underlying backend or driving force for AI applications.

Key Components of LLMOps:

1.) Selection of a Foundation Model: A starting point dictates subsequent refinements and fine-tuning to make foundation models cater to specific application domains.

2.) Data Management: Managing extensive volumes of data becomes pivotal for accurate language model operation.

3.) Deployment and Monitoring Model: Ensuring the efficient deployment of language models and their continuous monitoring ensures consistent performance.

4.) Evaluation and Benchmarking: Rigorous evaluation of refined models against standardized benchmarks helps gauge the effectiveness of language models.

What is MLOps?

MLOps, short for Machine Learning Operations, constitutes a structured approach aimed at automating and enhancing the AI development process’s operational aspects by leveraging machine learning’s power. Practical implementations of MLOps allow the seamless integration of machine learning as the foundational powerhouse for AI applications.

Key Components of MLOps:

- Establishing Operational Capabilities: Creating the infrastructure to deploy ml models in real-world scenarios is a cornerstone of MLOps.

- Workflow Automation: Automating the machine learning workflow, encompassing data preprocessing, model training, deployment, and continuous monitoring, is a fundamental objective of MLOps.

How Is LLMOps Different Than MLOps?

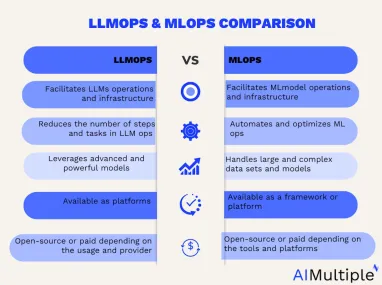

LLMOps is specialized and centred around utilising large language models. At the same time, MLOps has a broader scope encompassing various machine learning models and techniques. In this sense, LLMOps are known as MLOps for LLMs. Therefore, these two diverge in their specific focus on foundational models and methodologies:

Computational resources

Training and deploying large language models and refining extensive ones involve significantly more computations on substantial datasets. Specialized hardware like GPUs is deployed for accelerated data-parallel operations. Access to such dedicated computational resources becomes pivotal for model training and deployment. Moreover, addressing the inference cost underscores the significance of model compression and distillation techniques.

Transfer learning

Unlike conventional ML models built from the ground up, LLMs frequently commence with a base model, fine-tuned with fresh data to optimize performance for specific domains. This fine-tuning facilitates state-of-the-art outcomes for particular applications while utilizing less data and computational resources.

Human feedback

Advancements in training large language models are attributed to reinforcement learning from human feedback (RLHF). Given the open-ended nature of LLM tasks, human input from end users holds considerable value for evaluating model performance. Integrating this feedback loop within LLMOps pipelines simplifies assessment and gathers data for future model refinement.

Hyperparameter tuning

While conventional ML involves hyperparameter tuning primarily to enhance accuracy, LLMs introduce an added dimension of reducing training and inference costs. Adjusting parameters like batch sizes and learning rates can substantially influence training speed and cost. Consequently, meticulous tuning process tracking and optimisation remain pertinent for both classical ML models and LLMs, albeit with varying focuses.

Performance metrics

Traditional ML models rely on well-defined metrics such as accuracy, AUC, and F1 score, which are relatively straightforward to compute. In contrast, evaluating LLMs entails an array of distinct standard metrics and scoring systems—like bilingual evaluation understudy (BLEU) and Recall-Oriented Understudy for Gisting Evaluation (ROUGE)—that necessitate specialized attention during implementation.

Prompt engineering

Models that follow instructions can handle intricate prompts or instruction sets. Crafting these prompt templates is critical for securing accurate and dependable responses from LLMs. Effective, prompt engineering mitigates the risks of model hallucination, prompt manipulation, data leakage, and security vulnerabilities.

Constructing LLM pipelines

LLM pipelines string together multiple LLM invocations and may interface with external systems such as vector databases or web searches. These pipelines empower LLMs to tackle intricate tasks like knowledge base Q&A or responding to user queries based on a document set. In LLM application development, the emphasis often shifts towards constructing and optimizing these pipelines instead of creating novel LLMs.

LLMOPS vs MLOPS: Pros and Cons

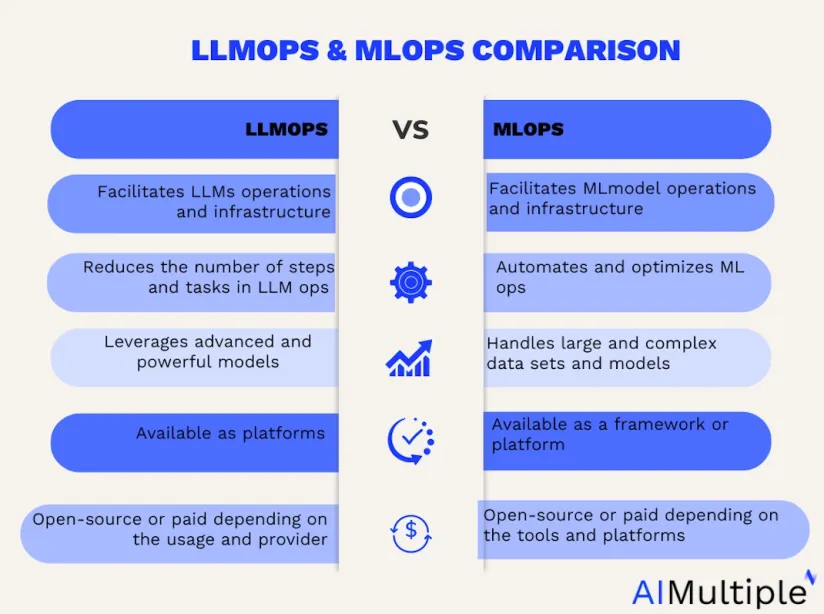

While deciding which one is the best practice for your business, it is important to consider benefits and drawbacks of each technology. Let’s dive deeper into the pros and cons of both LLMOps and MLOps to compare them better:

LLMOPS Pros

- Simple development: LLMOPS simplifies AI development significantly compared to MLOPS. Tedious tasks like data collection, preprocessing, and labeling become obsolete, streamlining the process.

- Easy to model and deploy: The complexities of model construction, testing, and fine-tuning are circumvented in LLMOPS, enabling quicker development cycles. Also, deploying, monitoring, and enhancing models are made hassle-free. You can leverage expansive language models directly as the engine for your AI applications.

- Flexible and creative: LLMOPS offers greater creative latitude due to the diverse applications of large language models. These models excel in text generation, summarization, translation, sentiment analysis, question answering, and beyond.

- Advanced language models: By utilizing advanced models like GPT-3, Turing-NLG, and BERT, LLMOPS enables you to harness the power of billions or trillions of parameters, delivering natural and coherent text generation across various language tasks.

LLMOPS Cons

- Limitations and quotas: LLMOPS comes with constraints such as token limits, request quotas, response times, and output length, affecting its operational scope.

- Risky and complex integration: As LLMOPS relies on models in beta stages, potential bugs and errors could surface, introducing an element of risk and unpredictability. Also, Integrating large language models as APIs requires technical skills and understanding. Scripting and tool utilization become integral components, adding to the complexity.

MLOPS Pros

- Simple development process: MLOPS streamlines the entire AI development process, from data collection and preprocessing to deployment and monitoring.

- Accurate and reliable: MLOPS ensures the accuracy and reliability of AI applications through standardized data validation, security measures, and governance practices.

- Scalable and robust: MLOPS empowers AI applications to handle large, complex data sets and models seamlessly, scaling according to traffic and load demands.

- Access to diverse tools: MLOPS provides access to many tools and platforms like cloud computing, distributed computing, and edge computing, enhancing development capabilities.

MLOPS Cons

- Complex to deploy: MLOPS introduces complexity, demanding time and effort across various tasks like data collection, preprocessing, deployment, and monitoring.

- Less flexible and creative: While versatile, MLOPS confines the application of machine learning to specific purposes, often employing less sophisticated models than expansive language models.

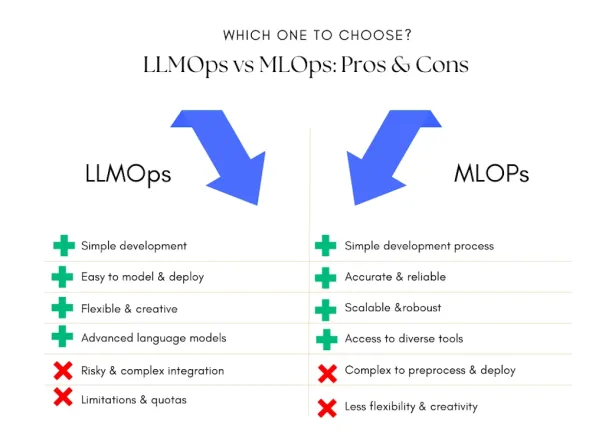

Which one to choose?

Choosing between MLOps and LLMOps depends on your specific goals, background, and the nature of the projects you’re working on. Here are some instructions to help you make an informed decision:

1. Understand your goals: Define your primary objectives by asking whether you focus on deploying machine learning models efficiently (MLOps) or working with large language models like GPT-3 (LLMOps).

2. Project requirements: Consider the nature of your projects by checking if you primarily deal with text and language-related tasks or with a wider range of machine learning models. If your project heavily relies on natural language processing and understanding, LLMOps is more relevant.

3. Resources and infrastructure: Think about the resources and infrastructure you have access to. MLOps may involve setting up infrastructure for model deployment and monitoring. LLMOps may require significant computing resources due to the computational demands of large language models.

4. Evaluate expertise and team composition: Assess your in-house skill set by asking if you are more experienced in machine learning, software development, or both? Do you have members with machine learning, DevOps, or both expertise? MLOps often involves collaboration between data scientists, software engineers, and DevOps professionals and requires expertise in deploying, monitoring, and managing machine learning models. LLMOps involve working with large language models, understanding their capabilities, and integrating them into applications.

5. Industry and use cases: Explore the industry you’re in and the specific use cases you’re addressing. Some industries may heavily favour one approach over the other. LLMOps might be more relevant in industries like content generation, chatbots, and virtual assistants.

6. Hybrid approach: Remember that there’s no strict division between MLOps and LLMOps. Some projects may require a combination of both systems.

Why do we need LLMOps?

The necessity for LLMOps arises from the potential of large language models in revolutionizing AI development. While these models possess tremendous capabilities, effectively integrating them requires sophisticated strategies to handle complexity, promote innovation, and ensure ethical usage.

Real-World Use Cases of LLMOps

In practical applications, LLMOps is shaping various industries:

Content Generation: Leveraging language models to automate content creation, including summarization, sentiment analysis, and more.

Customer Support: Enhancing chatbots and virtual assistants with the prowess of language models.

Data Analysis: Extracting insights from textual data, enriching decision-making processes.

Further reading

Explore more on LLMOps, MLOps and AIOPs by reading:

- 15 Best AiOps Platforms: Streamline IT Ops with AI

- ChatGPT AIOps in IT Automation: 8 Powerful Examples

If you believe your business can benefit from these technologies, then compare vendors through our comprehensive and constantly updated vendor lists for MLOPs platforms and LLMs.

If you have more questions, let us know:

Comments

Your email address will not be published. All fields are required.