MLOps: In-depth Guide to Benefits, Examples & Tools for 2024

Building machine learning models and applying them to business processes requires collaboration between data scientists, data engineers, designers, business professionals, and IT professionals. Efficient collaboration and orchestration is especially critical for businesses that want to adopt AI and ML at scale, which leads to a three-fold increase in ROI over companies in the AI proof-of-concept stage.

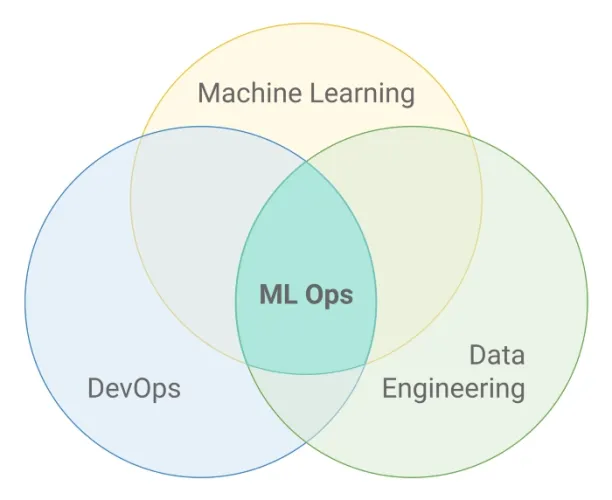

Inspired by DevOps practices for software development, MLOps brings diverse teams in an organization together to speed up the development and deployment of machine learning models. In this article, we’ll provide an in-depth guide to MLOps, how it helps streamline end-to-end ML processes, and some case studies from companies who have adopted it.

What is MLOps?

MLOps (Machine Learning Operations) is a set of practices to standardize and streamline the process of construction and deployment of machine learning systems. It covers the entire lifecycle of a machine learning application from data collection to model management.

MLOps vs. ModelOps

There are 2 common ways in which the term ModelOps is being used:

- Meaning 1: ModelOps involves the operationalization of all types of artificial intelligence models. This includes automation and management of, for instance, rule-based AI models as well as machine learning models. Therefore, it can be said that ModelOps is a superset of MLOps which only deals with ML models.

- Meaning 2: MLOps and ModelOps are commonly being used in an interchangeable way since rule-based AI models are becoming less common and most data scientists are only focusing on ML based models.

MLOps vs AIOps

MLOps and AIOps can resemble each other but they are different technologies in terms of:

- Main Goal:

- MLOps: Improve the management and deployment of machine learning models in production environments.

- AIOps: Enhance the efficiency and effectiveness of IT operations by leveraging AI-driven insights and automation.

2. Application Area:

- MLOps: Applied to machine learning model development and deployment, ensuring efficient collaboration, automation, and scalability.

- AIOps: Applied to IT infrastructure management and monitoring, optimizing operational efficiency, incident resolution, and resource allocation.

3. Automation:

- MLOps: Emphasizes automation of machine learning workflows, including model training, testing, and deployment.

- AIOps: Leverages AI-driven automation to perform tasks such as incident detection, root cause analysis, and remediation.

Discover how AIOps deploy generative AI and best +15 AIOps platforms.

How is it different from DevOps?

Similar to DevOps, MLOps aims to bridge the gap between design, development, and operations in an organization. However, there is a fundamental difference between the two: Machine learning works with data and data is always changing. So a machine learning system should constantly learn and adapt to new inputs. This brings new challenges and differentiates MLOps from DevOps:

- Continuous integration/Continuous delivery (CI/CD) practices in DevOps is the automation of testing and validating code changes from multiple contributors, integrating them into a single software project, and releasing to production. In MLOps, CI/CD involves continuously testing, validating, and integrating data and model, in addition to code. Check our article on CI/CD pipelines in machine learning for more.

- Continuous training (CT) is a property of MLOps that’s not a part of DevOps systems. With continuous training, the model is retrained continuously to adapt to changes in the training data.

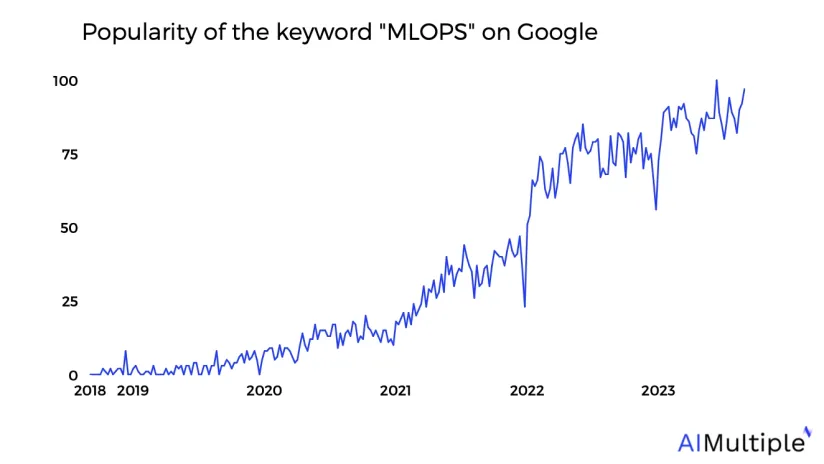

What is the level of interest in MLOps?

According to a report by Deloitte, the market for MLOps solutions is expected to grow from $350 million in 2019 to $4 billion by 2025. As machine learning applications become a key component in organizations, businesses realize that they need a systematic and automated way to implement ML models. The graph below reflects this interest.

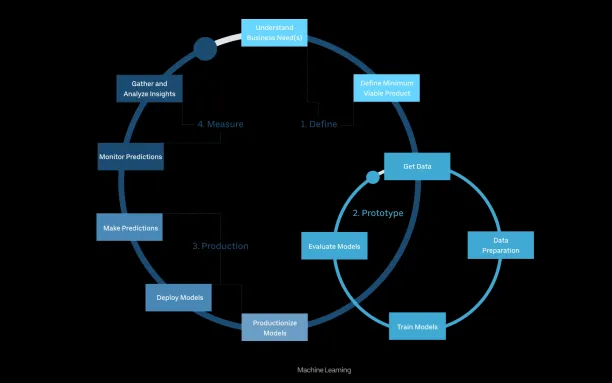

What are the components of machine learning lifecycle?

A typical machine learning model development process consists of:

- Determining business objective

- Data collection and exploration

- Data processing and feature engineering

- Model training

- Model testing and validation

- Model deployment

- Model monitoring

For more, feel to read a detailed explanation of the machine learning life cycle steps.

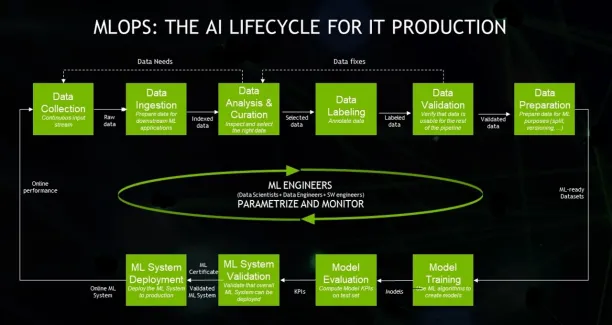

How does MLOps contribute to the ML lifecycle?

MLOps involves creating an end-to-end machine learning pipeline to automate the retraining of existing models and deployment of new models.

If you have only one algorithm that does not require frequent adjustments to changing business environment, getting your machine learning application deployed manually by a small data scientist team can be sufficient. However, if you want to scale your machine learning applications, you need an automated process. An automated machine learning pipeline improves these steps of the ML lifecycle:

Data processing and feature engineering

- Automated data labeling: MLOps tools can automate part of your data labeling processes, which takes a significant amount of time and is prone to errors when done manually.

- Automated feature engineering and feature stores: Features are generated automatically from raw data. Created features can be standardized and stored in feature stores, or feature factories, for easier reuse. Feature engineering requires domain knowledge about the business problem so some manual labor is still required but automation and feature stores free up considerable time.

Model training

- Automated hyperparameter optimization: Automatically search and select hyperparameters that give optimal performance for machine learning models.

- Automated data validation: New data is checked whether it meets certain properties before the model trains on it. If not, the pipeline can be stopped for investigation by data scientists.

- Continuous training (CT): The deployed model is automatically trained with new data after validation. If you already have a deployed model, it is trained automatically based on pipeline triggers.

MLOps also involves tools to improve reproducibility of ML and AI development and training processes with:

- Experiment tracking: Keeping track of important information about different experiments during model training, such as different ML models, hyperparameters, training datasets, codes, etc.

- Model registry: It archives and stores all models and their metadata information in a central repository to access and retrieve models later.

- ML metadata store: A central repository for storing the metadata of ML models, such as the creator of different model versions, when they are created, the training data, parameters, and the place and performance metrics of each version of a model.

- Model versioning: Tracks and manages the changes in ML models over time.

Model testing and validation

Automated model validation: After training, the model performance is examined before production by evaluating performance metrics and comparing the new model with old versions of the model.

Model deployment

Continuous delivery and continuous deployment (CD) of the model: The trained and validated model is delivered as a prediction service and deployed automatically to the production environment, where it provides model predictions. Two types of deployment method can be used:

- Online inference in which the model provides outputs in real-time often through an API endpoint.

- Batch inference in which the model runs periodically and provides results with some latency.

Check our article on model deployment for a detailed account on both methods.

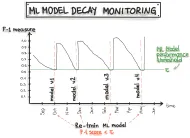

Model monitoring

Monitoring automation: The pipeline is monitored continuously in real-time to ensure model performance is above a threshold and to prevent model and data drift. A trigger executes a new iteration of the pipeline.

Pipeline automation

In order to ensure that these steps are completed in a sequence, MLOps involves:

- Automated transition between steps: Transition between the steps of the ML lifecycle is automated. This enables experimenting with new models rapidly.

- Continuous integration (CI) of the pipeline: Codes and components of the pipeline from various developers are tested, validated, and integrated automatically.

- Continuous delivery (CD) of the pipeline: Integrated codes and components are deployed to the target environment.

- Pipeline triggers: Trigger the pipeline to retrain the deployed model. It can be triggered:

- on a schedule, if new data is constantly available

- with the availability of new data

- if the model performance degrades to a certain level

- on demand

Why is MLOps important now?

MLOps is important for every organization that deploy machine learning solutions because:

- It standardizes the ML development process. It provides a unified framework to follow and facilitates communication between data scientists, subject matter experts, software engineers, ML engineers, and operations professionals.

- It mitigates risks by enabling continuous model monitoring and adjustment. As business environments change, the data that the model makes predictions on also changes. This means the model quality can deteriorate if new data is fundamentally different from the training dataset. The change in the model accuracy can harm your business, depending on the use case.

- It improves reproducibility in AI and ML. Reproducibility is important as it increase reliability and predictability in ML. MLOps provides best practices and tools to improve reproducibility. Check our article on reproducible AI for more.

- It helps to increase the scalability of ML projects. To build and maintain multiple models and control the complex relationships between them requires MLOps.

What are example case studies?

- Uber scaled machine learning for a diverse set of applications such as estimation of meal arrival time, forecasting demand for drivers of different locations, or customer support. They explain that successfully implementing machine learning at scale is more than getting the technology right. It involves efficient coordination between different teams. To standardize the workflow across teams they created their machine learning platform Uber Michelangelo.

- Booking.com has around 150 different machine learning models in production. They explain that an iterative, hypothesis driven process that is integrated with other disciplines was a fundamental part of building and deploying 150 machine learning products.

- Cevo built an automated ML pipeline for its financial sector customer who wanted to deploy and maintain multiple ML models to detect and prevent fraud. They claim that by applying MLOps concepts to the project, their customer has been able to reduce the time to train and deploy ML models from months to days. For instance, a model capable of detecting new types of fraud each month was generated in just 3 hours.

Check our comprehensive article on MLOps case studies for more real world examples.

What are categories of tools to support MLOps?

MLOps platforms facilitate end-to-end ML lifecycle management from deploying and managing to monitoring all machine learning models in a single platform. MLOps tools can be broken down into these categories:

- Feature engineering tools. This is provided by most auto ML software.

- Experiment tracking tools

- AI platforms for building models

- Model risk/performance measurement software

For specific tools and platforms, you can check our article on MLOps tools that explores both closed and open-source MLOps tools. You can also check our data-driven list of MLOps platforms.

If you need help choosing vendors for MLOps or other ML solutions, we can help:

Cem has been the principal analyst at AIMultiple since 2017. AIMultiple informs hundreds of thousands of businesses (as per similarWeb) including 60% of Fortune 500 every month.

Cem's work has been cited by leading global publications including Business Insider, Forbes, Washington Post, global firms like Deloitte, HPE, NGOs like World Economic Forum and supranational organizations like European Commission. You can see more reputable companies and media that referenced AIMultiple.

Throughout his career, Cem served as a tech consultant, tech buyer and tech entrepreneur. He advised businesses on their enterprise software, automation, cloud, AI / ML and other technology related decisions at McKinsey & Company and Altman Solon for more than a decade. He also published a McKinsey report on digitalization.

He led technology strategy and procurement of a telco while reporting to the CEO. He has also led commercial growth of deep tech company Hypatos that reached a 7 digit annual recurring revenue and a 9 digit valuation from 0 within 2 years. Cem's work in Hypatos was covered by leading technology publications like TechCrunch and Business Insider.

Cem regularly speaks at international technology conferences. He graduated from Bogazici University as a computer engineer and holds an MBA from Columbia Business School.

To stay up-to-date on B2B tech & accelerate your enterprise:

Follow on

Comments

Your email address will not be published. All fields are required.