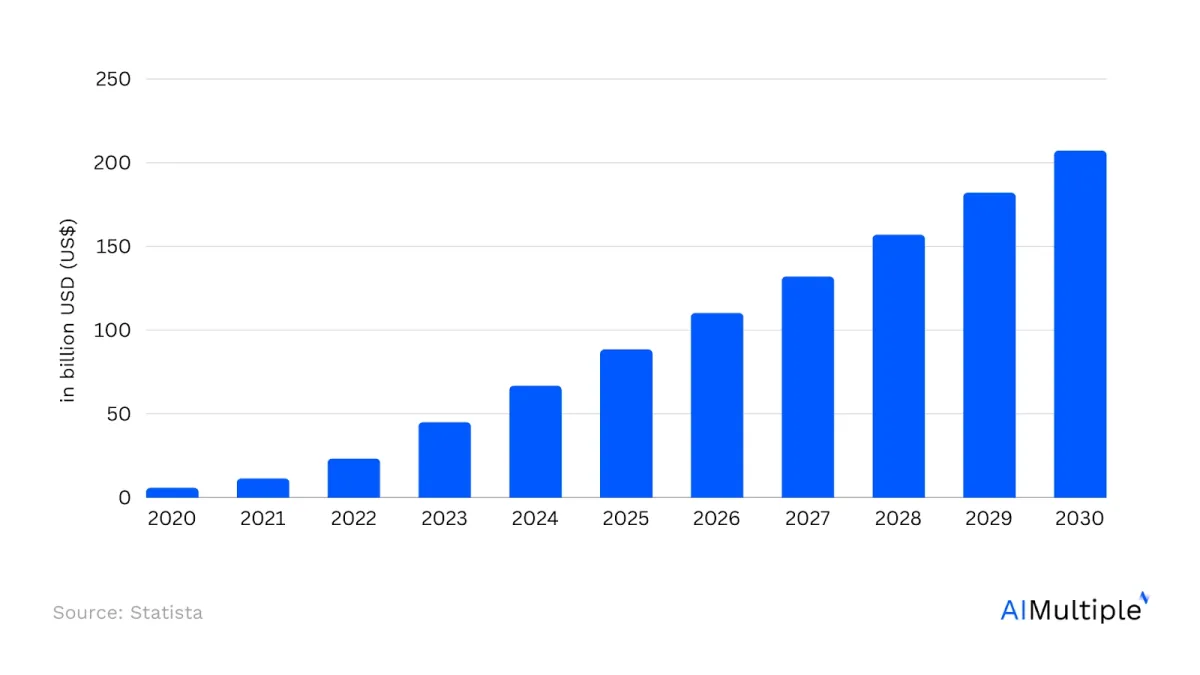

In the expanding AI and generative AI market (Figure 1), large language models (LLMs) have emerged as pivotal. These models empower machines to generate human-like content, heavily reliant on quality data.

Here, we present a guide for business leaders on accessing and managing LLM data, offering insights into collection methods and data collection services.

Figure 1. Generative AI market1

How do we gather data for LLMs?

This section highlights some popular methods of obtaining relevant data to develop large language models.

Table 1. Comparing the six methods of obtaining data for LLMs

| Methods | Cost Effectiveness | Speed | Customization Level | Privacy Level |

|---|---|---|---|---|

| Crowdsourcing | Medium | High | High | Medium |

| Managed data collection | Low | Medium | High | High |

| Automated data collection | High | High | Medium | Low |

| Automated data generation (Synthetic data) | High | High | High | Medium |

| Licensed data sets | Medium | High | Low | Low |

| Institutional partnerships | Medium | Medium | High | Medium |

- These are broad estimates, and they may differ for individual projects’ characteristics.

- We made these estimates based on our research and the data from websites and B2B review platforms.

LLM data gathering methods

1. Crowdsourcing

You can partner with a data crowdsourcing platform or service as an effective means of gathering data for LLM training. This method leverages a vast global network of individuals to accumulate or label data. It engages people from diverse backgrounds and geographies to gather unique and varied data points.

Advantages:

- Access to a diverse and expansive range of data points. Since the contributors are located worldwide, the dataset is much more varied.

- Often more cost-effective than traditional data collection methods since there are no additional expenses.

- Accelerates data gathering due to simultaneous contributions from multiple sources.

Challenges:

- Quality assurance can be tricky with varied contributors since you can not physically monitor the work.

- Ethical considerations, especially concerning fair compensation. Many companies like Amazon Mechanical Turk have been penalized for unfair compensation practices in their crowdsourcing platforms.

Here is a more comprehensive list of data crowdsourcing platforms

2. Managed data collection

Partnering with a data collection service can significantly enhance the process of training large language models (LLMs) by providing a vast and diverse dataset crucial for their development. These services specialize in aggregating and organizing large volumes of data from various sources, ensuring that the data is extensive and representative of different languages, regions, and topics.

This diversity is essential for training LLMs to understand and generate human-like responses across various subjects and languages.

Advantages:

- Quality and diversity of data: Data collection services often have access to high-quality and diverse datasets, which are essential for training robust and versatile language models.

- Efficiency: Outsourcing data collection can save time and resources, allowing developers to focus on model development and refinement rather than on the labor-intensive data gathering process.

- Cost: This can be cheaper than in-house data collection or partnering with an institute.

Challenges:

- Dependence on external sources: Relying on external services for data can create dependencies and potential risks related to data availability, service continuity, and changes in data policies.

- Data privacy and ethics: Data collected by third-party services may raise concerns about privacy, consent, and ethical use, especially if the data includes sensitive or personal information.

- Cost: Working with a partner can be more expensive than off-the-shelf datasets.

3. Automated data collection

Automated data collection methods like web scrapers can be used to extract vast amounts of open-source textual data from websites, forums, blogs, and other online sources.

For instance, an organization working on improving an AI-powered news aggregator might deploy web scraping tools to collate articles, headlines, and news snippets from global sources to understand different writing styles and formats.

Advantages:

- Access to a virtually limitless pool of data spanning countless topics.

- Continuous updates are due to the ever-evolving nature of the internet.

- Much faster and inexpensive compared to other modes of collecting language data.

Challenges:

- Ensuring data relevance and filtering out noise can be time-consuming.

- Navigating intellectual property rights and permissions can be challenging and expensive. Many online platforms now charge companies for scraping their data, and developers who scrape without permission may face lawsuits.

Watch this video to see how OpenAI was sued for stealing data from popular authors for training its large language model GPT-3:

3.1. Automated data generation (i.e., Synthetic data)

You can also employ AI models or simulations to produce synthetic yet realistic datasets.

For instance, if a virtual shopping assistant chatbot lacks real customer interactions, it can use natural language processing AI to simulate potential customer queries, feedback, and transactional conversations.

Advantages:

- Quick generation of vast datasets tailored to specific needs.

- Reduced dependency on real-world data collection, which can be time-consuming or resource-intensive.

Challenges:

- Ensuring the synthetic data closely mirrors real-world scenarios can be challenging since even powerful AI models sometimes can not provide accurate data.

- Synthetic data cannot work on its own. You will still require human-generated data to add to the synthetic data.

Here is an article comparing the top synthetic data solutions on the market.

4. Licensed data sets

Directly buy datasets or obtain licenses to use them for training purposes. Online platforms and other forums are now selling their data. For instance, Reddit recently started charging AI developers to access its user-generated data.2

Advantages:

- Immediate access to large, often well-structured datasets.

- Clarity on usage rights and permissions.

Challenges:

- It can be costly, especially for niche or high-quality datasets.

- Potential data usage, modification, or sharing limitations based on licensing agreements.

4.1. Institutional partnerships

Collaborating with academic institutions, research bodies, or corporations to gain proprietary datasets can significantly enhance the depth and quality of data available for specialized projects or research. Such collaborations enable access to a wealth of domain-specific information that might not be publicly available, providing a richer foundation for developing more accurate and effective tools or models.

For example, a firm specializing in legal AI tools could greatly benefit from partnering with law schools and legal institutions, gaining access to an extensive array of legal documents, case studies, and scholarly articles.

This would broaden the scope of their data pool and ensure that their AI tools are trained on high-quality, relevant information, making them more efficient and reliable in legal contexts.

These collaborations can also foster a mutually beneficial exchange of expertise and innovation, leading to advancements in both academic research and practical applications.

Advantages:

- Gaining specialized, meticulously curated datasets.

- Mutual benefits – while the AI firm gains data, the institution might receive advanced AI tools, research assistance, or financial compensation.

- The data is legal and not subject to lawsuits.

Challenges:

- Establishing and upholding trustworthy partnerships can be challenging since different organizations have different agendas and priorities.

- Balancing data sharing with privacy protocols and ethical considerations can also be challenging since not all organizations trust others with their data.

Why is data important for LLMs?

Large language models work by analyzing vast amounts of text data and learning patterns and structures in a language using techniques like neural networks. When given a prompt or question, they generate responses based on this learned knowledge, predicting the most likely sequence of words or sentences that should follow. The language model’s performance relies on adequate training Data. This data aids in:

- Understand complex sentences: Context is vital, and having vast amounts of varied data allows LLMs to comprehend intricate structures.

- Sentiment analysis: Gauging customer sentiment or interpreting user intent from text requires many examples.

- Specific tasks: Whether translating languages or text classification, specialized data helps fine-tune models for dedicated tasks like understanding contextually relevant text.

However, sourcing this data isn’t always straightforward. With growing concerns about privacy, intellectual property, and ethical considerations, obtaining high-quality, dive

Recommendations on LLM data gathering methods

With each method offering unique advantages and challenges, AI firms and researchers must weigh their needs, resources, and goals to determine the most effective strategies for sourcing LLM data. As businesses seek more advanced LLM capabilities, the need for novel data collection techniques to support these models will only grow.

FAQ

What are large language models?

Large Language Models LLMs represent a class of AI systems trained on massive text corpora to understand and generate human-like language. These models utilize deep learning techniques and massive datasets to understand natural language and generate human-like responses across multiple languages.

Popular examples include Generative Pre-trained Transformers (GPT series) and Bidirectional Encoder Representations (BERT).

How are LLMs impacting the tech industry?

Large Language Models (LLMs) are integral to several key applications:

In Conversational AI, LLMs power customer service chatbots, enhancing efficiency by understanding user inputs and generating human-like interactions, making automated customer support more user-friendly and efficient.

For Language Translation, LLMs leverage deep learning techniques to provide accurate translations of everyday conversations and complex legal documents, overcoming language barriers and fostering global communication.

In Programming, advanced LLMs assist in writing software code, using techniques like fine-tuning and contextually relevant text generation to streamline the coding process and empower business users with varying technical expertise to participate in software development.

In Scientific Research, LLMs decode complex scientific jargon into accessible language, aiding researchers in data interpretation and accelerating insights across diverse domains of scientific research.

Further reading

- Top 7 Generative AI Services & Vendors

- Top 3 Appen Alternatives

- Top 3 Amazon Mechanical Turk Alternatives & Evaluation

Comments

Your email address will not be published. All fields are required.