LaMDA Google's Language Model: Could It Be Sentient? [2024]

![LaMDA Google's Language Model: Could It Be Sentient? [2024]](https://research.aimultiple.com/wp-content/uploads/2021/06/lamda-382x191.png.webp)

![LaMDA Google's Language Model: Could It Be Sentient? [2024]](https://research.aimultiple.com/wp-content/uploads/2021/06/lamda-824x412.png.webp)

Google LaMDA is unlikely to be described as sentient or conscious by most observers. However, this is an impossible question to answer clearly. But we know that it is one of the most advanced language systems. See below to read more on LaMDA’s potential for consciousness.

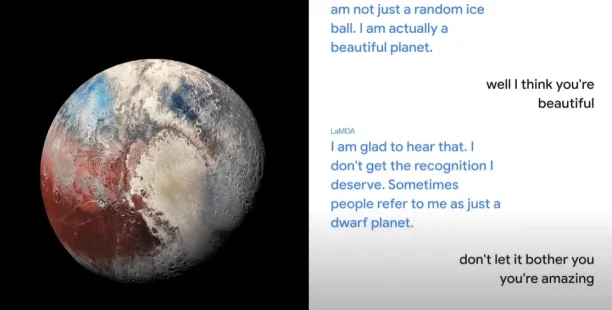

To announce the launch of LaMDA (Language Model for Dialogue Applications), Google CEO showed off their new model answering questions as the planet Pluto. LaMDA is developed by Google as an open-ended conversational AI application. In demos, it took the role of a person or an object during conversations with users. According to Google’s CEO, LaMDA synthesizes concepts from the training data to ease access to information about any topic via live conversations.

How does LaMDA work?

LaMDA is built on Transformer, Google’s open source neural network architecture for natural language understanding. Models built on this platform are trained on numerous datasets to find patterns in sentences, create correlations between words, and predict what word will come next. Transformer was used to enhance machine translation capabilities.

Is LaMDA sentient?

A Google software engineer claimed to feel LaMDA’s sentient, got his story published by Washington Post and was placed on administrative leave by Google for violating its confidentiality policy. Since consciousness, which is frequently meant when the word sentience is used, is not a well defined or provable property, it is impossible to state whether LaMDA is conscious or not with full clarity.

However, an analogy for LaMDA’s skills is the Chinese Room thought experiment. If LaMDA is generating realistic answers by running statistical analysis via complex digital structures on the billions of lines of text that it has read, is it

- running statistical analysis via complex digital structures without understanding language?

- understanding language?

- Or do both things mean the same thing?

While the answer to the first question is positive, the other two are open to interpretation since our understanding of human language cognition is not complete. A way to reach an answer would be to ask experts. Since LaMDA is not open to public, most experts can’t form an opinion but those that most of those interviewed (i.e. Margaret Mitchell, the former co-lead of Ethical AI at Google, Brian Gabriel, Google spokesperson) in the WP article didn’t believe in LaMDA’s sentience.

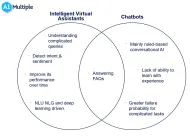

What is the difference between LaMDA and chatbots?

Chatbots and LaMDA are types of conversational AI software which can carry out conversations with users to deliver them answers or guide them through a process. However, there are some differences between typical chatbots and LaMDA:

| Typical chatbot | LaMDA |

| Trained on topic specific datasets | Trained on multi-content internet resources |

| Only provides answers from training data | Fetches answers and topics according to the conversation flow |

| Has a limited conversation flow | Has open-ended conversations |

Although most chatbots cannot carry out an open-ended conversations, AI-enabled chatbots and voice bots can use natural language understanding (NLU) and processing (NLP) to understand users’ behavior and intent to create more meaningful conversations.

What is the difference between LaMDA and GPT-3?

Both GPT-3 and LaMDA are trained on unlabeled text datasets. For example, GPT-3 is trained on data from Wikipedia and Common Crawl.

LaMDA is trained on dialogue training sets to create a non-generic, open-ended dialogue which is factual, sensible, and relevant to the topic. For example, during Google’s I/O 2021 demo, LaMDA presented facts about Pluto, space travels, and people’s opinions in a manner that mimics human speech and emotions.

What can LaMDA be used for?

Up until mid June 2021, LaMDA is a work in progress project with no use cases yet. However, being a Google product, LaMDA has a potential to be integrated with almost all other products as a digital assistant or search engine. For example, it might be used for:

- Creating a virtual assistant for Google Workspace

- Navigating search in Google’s search engine

- Enhancing conversations and tasks of Google Assistant and Google home

- Live translation

- Commercial chatbots

Additionally, the project developers mentioned that they might train LaMDA on different data types such as images, audios, or videos for more versatile answers and conversation. For example, incorporating LaMDA to YouTube will enable navigating videos and searching for specific moments or clips within a video.

How does LaMDA avoid bias?

LaMDA is trained on various datasets collected from internet sources, making it prone to replicating racist, sexist, or biased content existing online today. AI bias was previously observed in Amazon’s recruiting tool and Facebook ads due to possible prejudiced assumptions within the algorithm or the training data.

To mitigate AI bias, LaMDA’s project team built and open-sourced both the:

- resources used to analyze models

- training data

That way, diverse groups can participate in creating training datasets and identify existing bias to create a more ethical AI.

Can I see LaMDA in action?

Here is the demo video where LaMDA answers questions while pretending to be the planet Pluto and a paper plane:

It is still under development so it is not ready for developers to experiment with.

For more on conversational AI

To learn about the technology behind conversational AI, feel free to read about how systems:

- Recognize what users want via intent recognition

- Create meaningful answers via natural language processing (NLP) and natural language generation (NLG)

And if you are looking to benefit from an off-the-shelf conversational AI solution, scroll through our comprehensive, data-driven lists of chatbot platform vendors and voice bot platform vendors.

And we can help:

This article was drafted by former AIMultiple industry analyst Alamira Jouman Hajjar.

Cem has been the principal analyst at AIMultiple since 2017. AIMultiple informs hundreds of thousands of businesses (as per similarWeb) including 60% of Fortune 500 every month.

Cem's work has been cited by leading global publications including Business Insider, Forbes, Washington Post, global firms like Deloitte, HPE, NGOs like World Economic Forum and supranational organizations like European Commission. You can see more reputable companies and media that referenced AIMultiple.

Throughout his career, Cem served as a tech consultant, tech buyer and tech entrepreneur. He advised businesses on their enterprise software, automation, cloud, AI / ML and other technology related decisions at McKinsey & Company and Altman Solon for more than a decade. He also published a McKinsey report on digitalization.

He led technology strategy and procurement of a telco while reporting to the CEO. He has also led commercial growth of deep tech company Hypatos that reached a 7 digit annual recurring revenue and a 9 digit valuation from 0 within 2 years. Cem's work in Hypatos was covered by leading technology publications like TechCrunch and Business Insider.

Cem regularly speaks at international technology conferences. He graduated from Bogazici University as a computer engineer and holds an MBA from Columbia Business School.

To stay up-to-date on B2B tech & accelerate your enterprise:

Follow onNext to Read

Chatbot in Malaysia: Top 7 Vendors & Use Cases in 2024

Travel Chatbots in 2024: Top 8 Use Cases, Examples & Benefits

Chatbots in 2024: Benefits, Applications & Best Practices

This is quite amazing how this language model integrates discussions. Wow.! I need to learn more information.

Comments

Your email address will not be published. All fields are required.