Responsible AI platform market includes two types of software. Follow the links to learn more:

Enterprise-focused responsible AI platforms such as:

- Credo AI and IBM Watsonx.governance for AI governance

- Amazon SageMaker and Dataiku for MLOps with responsible AI capabilities

- Databricks and IBM Watsonx.data intelligence for a data platform with responsible AI features

Open-source responsible AI libraries that deliver specific functionality (e.g. federated learning):

- TensorFlow Federated (TFF) for privacy

- AI Fairness 360 for fairness

- Dalex for explainability (i.e. XAI)

- TextAttack for adversarial ML

These tools are identified as the market leaders based on metrics such as the number of reviews, features, GitHub scores and Fortune 500 references. See the tools’ use cases and focus (such as MLops, AI governance, and data governance) by following the links on them.

Enterprise responsible AI platforms

Platform | Type | # of employees | Rating |

|---|---|---|---|

Holistic AI | AI governance | 85 | Not enough review |

Credo AI | AI governance | 49 | Not enough review |

Fairly AI | AI governance | 13 | Not enough review |

Aporia | MLOPs | 359 | 4.8 based on 68 reviews |

Dataiku | MLOPs | 1,378 | 4.5 based on 198 reviews |

Amazon SageMaker | MLOPs | 130,371 | 4.5 based on 49 reviews |

Databricks | Data governance | 9,280 | 4.6 based on 655 reviews |

IBM Watsonx | Data governance | 4.07 based on 30 reviews | |

Snowflake | Data governance | 8,441 | 4.6 based on 770 reviews |

Expert AI | Responsible NLP | 278 | Not enough review |

* These tools are sorted alphabetically within their category except the sponsors which are placed at the top.

AI governance platforms

AI governance tools assist business units in deploying AI systems that adhere to industry standards.

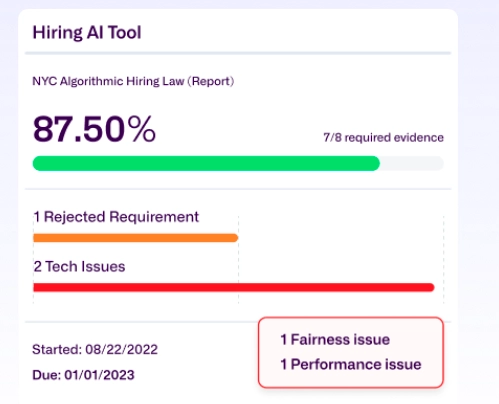

Credo AI

Credo AI, a Responsible AI governance platform, can help businesses:

- Collaborate with tools like evidence gathering, accountability tracking, and simplifying third-party procurement.

- Evaluate AI systems for operational, regulatory, and reputational risks throughout their lifecycle

- Build governance artifacts by translating technical evidence into user-friendly documents, creating model cards, audit reports, risk and compliance reports, and disclosures.

- Ensure compliance with global regulations like the EU AI Act and Canada Data and AI Act, internal policies, and industry standards.

Fairly AI

Fairly AI, now acquired by Asenion, is an AI governance tool that simplifies model validation, auditing process, and risk assessments, ensuring regulatory compliance and risk management. Fairly AI’s responsible AI framework identifies gaps in policies and regulations, helping to select appropriate policies and ensuring comprehensive coverage for effective AI governance and risk management.

Holistic AI

Holistic AI provides AI risk management, compliance and governance frameworks to help companies implement AI responsibly.

- Bias assessment by identifying and mitigating biases in AI systems, offering actionable strategies, continuous support, and comprehensive auditing reports that can be shared with stakeholders.

- Conformity assessment by cataloging and validating high-risk AI systems against AI Act requirements, conducting risk assessments with mitigation strategies, and ensuring technical documentation aligns with legal standards.

- Proactive risk management by receiving regular reports and conducting self-audits for adverse impacts, while using data-driven insights to optimize AI use and inform strategic decisions.

IBM Watsonx.governance

IBM Watsonx.governance can enhance AI trust and transparency by providing enterprise-grade visibility, tracking of AI assets, and compliance of data and AI workflows across various deployment environments, including IBM Cloud and AWS.

Watsonx.governance users can integrate to other IBM watsonx studio tools like watsonx.ai and watson.data to train, validate, tune and deploy AI.

MLOps

Amazon SageMaker and Amazon Bedrock

Amazon provides tools designed to support compliance teams in delivering Responsible AI systems, such as:

- On Amazon Bedrock: A fully managed service that simplifies the development of generative AI applications by providing access to high-performing foundation models without requiring data preparation, model building, or infrastructure management.

- Guardrails: Implements safeguards in generative AI by specifying topics to avoid and automatically detecting and preventing restricted queries and responses.

- Model Evaluation: Evaluates and compares Foundation Models based on custom metrics like accuracy and safety to help select the best model for specific use cases.

- On Amazon SageMaker: A machine learning platform that offers the model creation, training, and deployment processes, making it ideal for customized ML tasks like predictive analytics, recommendation systems, and anomaly detection.

- Clarify: Detects potential bias and provides explanations of model predictions, offering transparency and insights to ensure fair and informed AI decisions.

- Model Monitor: Monitors deployed models by automatically detecting and alerting on inaccurate predictions to maintain model quality.

- ML Governance: Enhances governance by offering tools for controlling and monitoring ML models, including capturing and sharing model information to ensure responsible AI deployment.

- Amazon Augmented AI: Facilitates human review of ML predictions, enabling oversight where human judgment is required.

Explore how Amazon Bedrock delivers responsible AI:

Aporia

The Aporia Responsible AI platform offers comprehensive governance for AI projects by:

- Ensuring data diversity, evaluating fairness metrics, and monitoring systems for biases to maintain ethical AI standards

- Complying with regulations like GDPR and other industry standards

- Promoting accountability and transparency through Explainable AI, ensuring that AI decision-making processes are understandable.

Dataiku

Dataiku is an ML and data science platform that build, deploy, and manage data, analytics, and AI projects. It can support Responsible AI in those projects through several key capabilities:

- Advanced Statistical Analysis: Facilitates thorough data analysis to identify and address potential biases.

- Model Fairness Reports: Provides metrics like Demographic Parity and Equalized Odds to measure and mitigate bias.

- Explainable AI: Offers row-level explanations and what-if analysis to ensure transparency and accountability.

- Data Privacy Compliance: Ensures adherence to regulations such as GDPR and CCPA.

- Model Documentation: Automates the creation of detailed model documentation for regulatory and internal purposes.

- Governance Tools: Implements standard project plans and workflow blueprints to align with Responsible AI practices and regulatory requirements.

Data governance

Data governance refers to the overarching framework that aligns data practices with business goals and accountability structures. A broad application of data governance is in ML applications, called as machine learning data governance.

Databricks

Databricks is a unified data and AI platform that ensures data ownership and control for AI models through comprehensive monitoring, privacy controls, and governance. Databricks delivers responsible AI through its Responsible AI Testing Framework, which includes:

- AI red teaming to identify vulnerabilities

- Automated and manual probing for bias and ethical issues

- Jailbreak testing to understand model behavior under attacks

- Model supply chain security to safeguard AI systems throughout their lifecycle.

IBM Watsonx.data intelligence

Watsonx.data intelligence is a data governance and intelligence platform that ensures high-quality, compliant, and business-ready data for AI models. It delivers responsible AI through its AI-driven data intelligence capabilities, which include:

- Natural language access for users of all skill levels to search and leverage data efficiently

- Automated data discovery and cataloging across structured and unstructured sources

- Data governance and quality controls including lineage, classification, and impact analysis

AI-powered data enrichment and standardization for consistent, usable datasets

Snowflake

Snowflake is a cloud-based data platform for data storage, processing, and analytics, helping businesses manage and use their data efficiently. Its responsible AI approach emphasizes data security, diversity, and organizational maturity, ensuring AI applications are built on a secure, diverse, and well-governed data foundation. Additionally, Snowflake promotes data literacy and cross-functional collaboration to drive responsible AI use across organizations.

Open-source responsible AI tools & libraries

* These tools are sorted based on the GitHub score in their category. Please note that libraries that are not up to date are excluded from this list.

AI privacy

These libraries focus on the use of AI for legitimate purposes while avoiding unethical applications. Organizations adhering to ethical AI standards implement strict guidelines, thorough review processes, and clear objectives to ensure compliance.

- TensorFlow Privacy: A Python library offering implementations of TensorFlow optimizers for training machine learning models with differential privacy.

- TensorFlow Federated (TFF): Designed to support open research and experimentation in Federated Learning (FL), where a global model is trained across multiple clients without sharing their local data.

- Deon: A command-line tool enabling the addition of an ethics checklist to data science projects, promoting ethical considerations and providing actionable reminders for developers.

AI transparency & bias

AI transparency libraries allow stakeholders to understand and explain AI models, ensuring transparency in the motives, data, and intent behind AI models.

- Model Card Toolkit (MCT): Automates the creation of Model Cards, which are documents that offer context and transparency regarding a model’s development and performance.

Fairness

Fairness in AI involves protecting individuals and groups from discrimination, bias, and mistreatment. Models should be evaluated for fairness to prevent biases against specific groups, factors, or variables.

- AI Fairness 360: An open-source toolkit from IBM offering techniques to detect and mitigate bias in machine learning models across the AI lifecycle.

- Fairlearn: A Python package that helps developers assess the fairness of their AI systems and mitigate any identified biases, offering both mitigation algorithms and metrics for model evaluation.

- Responsible AI Toolbox: A suite of tools from Microsoft that provides interfaces for exploring and assessing AI models and data, facilitating the development and deployment of AI systems in a safe and ethical manner.

AI explainability

AI explanability libraries helps improving human understanding and trust in machine learning algorithms’ results.

- DALEX: A model-agnostic package that helps explore and explain model behavior, assisting in the understanding of complex models.

- TensorFlow Data Validation (TFDV): A library for exploring and validating machine learning data, optimized for scalability and integration with TensorFlow and TensorFlow Extended (TFX).

Adversarial ML

Adversarial machine learning is a technique that attempts to exploit artificial intelligence models by using accessible information to create malicious attacks.

- TextAttack: A Python framework for adversarial attacks, training, and data augmentation in NLP, streamlining the process of testing and enhancing the robustness of NLP models.

What is responsible AI?

4 Guiding principles of AI, also known as responsible artificial intelligence (AI), refer to building trust in AI solutions by applying a set of principles which are:

- Fairness

- Privacy

- Safety and security

- Transparency

These principles help guide the design, development, deployment and use of AI.

Why is responsible AI important?

As AI stats and IT automation trends indicate:

- 90% of commercial enterprise applications will feature AI capabilities by next year.

- 9 in 10 leading companies are investing in AI technologies. Following ChatGPT‘s 2022 launch, businesses reported a

- 97% rise in generative AI development interest.

- 72% increase observed in machine learning pipeline adoption to support generative AI strategies.

This increase in adoption of AI, generative AI tools and lead to concerns and precautions, such as:

- 71% of IT leaders are concerned about LLM security vulnerabilities and generative AI risks.

- 77% of companies prioritize AI compliance.

- 69% of businesses have implemented responsible AI practices to assess compliance and identify risks.9

- Data privacy laws, including GDPR (EU) and CCPA (California), aim to prevent privacy breaches.

- The EU AI Act requires organizations to keep their AI inventories current and accurate.

- Increasing AI bias incidences, such as racism, sexism, ableism and ageism.

FAQs

Data governance encompasses the frameworks and tools organizations use to protect and properly utilize their data. Some of the methods, processes and technologies in data governance include:

1- Data collection

2- Data storage

3- Data processing

4- Data cleaning

5- Data stewardship

6- Data sharing in a controlled way to:

6.a- Protect data privacy

6.b- Maintain data quality

6.c- Support compliance with relevant regulations.

7- Insider threat management (ITM).

Reliable AI refers to AI systems that consistently perform as expected: accurately, robustly, and safely under different conditions.

Reliable AI is a relevant term for responsible AI since trust, fairness, and compliance depend on systems that behave predictably. Responsible AI tools ensure reliability through model monitoring, bias testing, explainability, and regulatory alignment.

Further reading

Learn other tools and practices to mitigate generative AI risks, such as:

Be the first to comment

Your email address will not be published. All fields are required.