While some companies rely on AI data collection services, others gather their data using scraping tools or other methods.

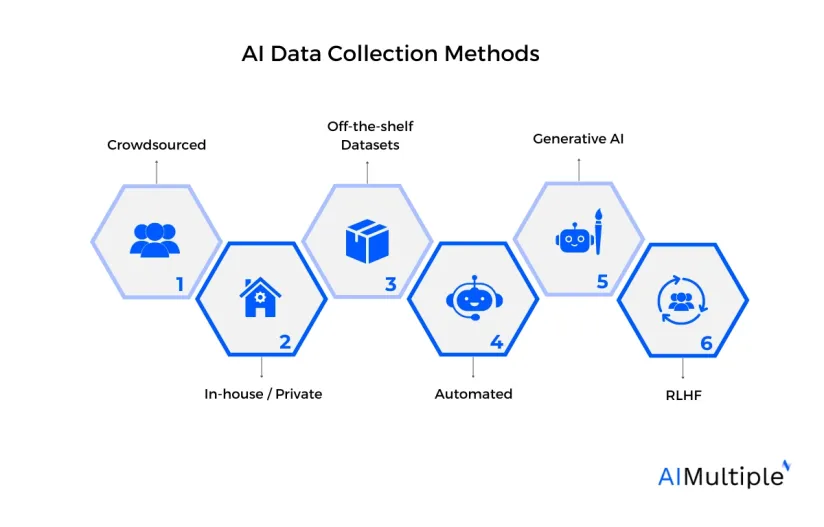

See the top 6 AI data collection methods and techniques to fuel your AI projects with accurate data:

Overview of AI data collection methods

| Method | Cost | Scalability | Customization | Data Quality Control |

|---|---|---|---|---|

| Crowdsourcing | Low to Medium | High | Medium | Medium to Low |

| In-house | High | Low | High | High |

| Off-the-shelf Datasets | Low upfront, higher long-term | High | Low | Low to Medium |

| Automated Collection | Medium to High | High | Low | Medium |

| Generative AI | Low to Medium | High | High | Medium |

| RLHF | High | Low to Medium | High | Medium to High |

1. Crowdsourcing

Online talent platforms, such as crowdsourcing platforms, have various benefits. Data crowdsourcing involves assigning data collection tasks to the public, providing instructions, and creating a sharing platform. Businesses can also work with crowdsourced data collection agencies.

Advantages

- Through crowdsourcing, developers can quickly recruit a wide range of contributors, significantly accelerating the data collection process for projects with tight deadlines.

- For an AI model to be unbiased, it must be trained using a wide range of data. Crowdsourcing enables data diversity since the data is gathered from all over the world. You can also gather multilingual data much more efficiently.

- Crowdsourcing helps eliminate the costs related to data collection procedures, such as hiring, training, and onboarding a team for in-house data collection. You also don’t have to purchase any equipment since the workers from the crowdsourcing platform use their equipment.

- Experienced crowdsourcing firms have domain specialists who can provide high-quality data collection specific to your project needs, ensuring that the data is diverse but also relevant and reliable.

- This method can be used for both primary and secondary data collection, offering versatility in obtaining various types of information, from user-generated content to academic research data.

Disadvantages

- It can be difficult to track if the contributors have sufficient domain and language skills, especially when the project involves highly specialized or technical content.

- It can also be difficult to track whether the data collection assignments are being performed properly since the workers are remote and in large numbers. They may also have varying interpretations of the tasks.

- Data quality can also be challenging to track when crowdsourced data collection is involved due to the variability in the contributors’ expertise and dedication.

- Narrowing down the right contributors for the project can also be challenging, requiring careful consideration of their qualifications and past performance.

You can refer to this guide to find the most suitable crowdsourcing platforms on the market.

Case studies

1.M-Pesa, a mobile money service in Kenya, uses blockchain to enhance transparency in crowdsourced agent networks. Agents in rural areas handle customer inquiries via a decentralized ledger, reducing fraud risks. In 2023, this system expanded to eight more countries, leveraging blockchain to track real-time transactions and agent performance. 1

Advantages:

- Blockchain for immutable transaction records.

- Mobile-based crowdsourcing for financial inclusivity.

2. OpenStreetMap (OSM) uses volunteers worldwide to create open-source maps. Contributors update geographic data, which is used for disaster response (e.g., earthquake relief in Nepal) and urban planning. 2

Advantages:

- Cost-effective alternative to proprietary mapping services like Google Maps.

- Enables multilingual and localized data collection.

2. In-house data collection

AI/ML developers can also collect their data privately. This method is effective when the required dataset is small and the data is private or sensitive. It is also effective when the problem statement is too specific and the data collection needs to be precise and tailored.

AI developers can also gather data privately within the organization. This data collection method is effective when the required dataset is small and the data is private or sensitive. It is also effective when the problem statement is too specific and the data collection needs to be precise and tailored.

Advantages

- In-house data collection is the most private and safest way of gathering primary data.

- A higher level of customization can be achieved through this method.

- While conducting in-house data collection, monitoring the workforce is easier since they are physically present.

Disadvantages

- It is expensive and time-consuming to hire or recruit a data collection team.

- It is difficult to find the domain-specific information and data collection efficiency that crowdsourcing agencies, for instance, can offer.

- Multilingual data is also complex to gather through in-house data collection.

- The data collectors also need to perform data processing and labeling.

Case Study: Tesla Autonomous Vehicles

Tesla collects real-time driving data from its fleet of vehicles using onboard sensors and cameras. This proprietary dataset trains its AI models to navigate complex traffic scenarios. For example, Tesla’s Autopilot system relies on petabytes of video and sensor data to refine lane-keeping and collision-avoidance algorithms. 3

Advantages:

- Ensures privacy and customization for specific use cases.

- Direct oversight of data quality and relevance.

Challenges:

- High costs for sensor infrastructure and data storage.

- Limited scalability for multilingual or global datasets.

3. Off-the-shelf datasets

This data collection method uses precleaned, preexisting datasets available in the market. If the project does not have complicated goals and does not require a wide range of data, this can be a good option. Prepackaged datasets are relatively cheaper than collecting and are easy to implement.

For example, a simple image classification system can be fed with prepackaged data.

Here is an extensive list of off-the-shelf or prepackaged datasets for machine learning models.

Advantages

- This data collection technique has fewer up-front costs since you do not need to recruit anyone or gather the data yourself.

- It is quicker and easier to implement than other data collection methods since the datasets were prepared in the past and are ready to use.

Disadvantages

- These datasets can have missing or inaccurate data, requiring processing. They can also cost more in the long run since more software and manpower might be needed to fill the 20-30% gap.

- These datasets lack personalization/customizability since they aren’t created for a specific project. Therefore, they are not suitable for models that require highly personalized data.

Case Study: Google DeepMind’s AlphaFold

AlphaFold used preexisting protein structure databases (Protein Data Bank) to train its AI model, enabling breakthroughs in predicting 3D protein configurations. This accelerated drug discovery by bypassing years of lab-based data collection. 4

Advantages:

- Cost-effective and time-saving.

- Ideal for generalized tasks like image classification.

Challenges:

- Gaps in dataset relevance.

- Additional processing is required to fill in the missing data.

4. Automated data collection

Another trending data collection method is automation. This is done by using data collection tools and software to obtain data from online data sources automatically. Some commonly used automated data collection tools include;

- Web scraping: One of the most commonly used data collection methods is web scraping. This method focuses on gathering data from all online sources, such as websites and social platforms, using web scraping tools.

- Using APIs: In this data collection method, the data is gathered through the application programming interface (API) provided for each data unit stored on different online sources.

Advantages

- Automated data collection is one of the most efficient secondary data collection methods.

- Automating data collection also reduces human errors that can occur when the data collection tasks become repetitive.

Disadvantages

- Maintenance costs of automating through web scrapers can be high. Since websites often change their design and structures, repeatedly reprogramming the web scraper can be costly.

- Some websites use anti-scraper tools, which can limit the use of web scraping.

- Raw data gathered from automated means can also be inaccurate. To obtain accurate data, your team needs to analyze it after it is gathered.

Case Study: Alibaba’s City Brain

Alibaba uses automated sensors, GPS, and traffic cameras to collect real-time urban data. This system optimizes traffic light timing and reduces city congestion. 5

Advantages:

- High efficiency and reduced human error.

- Scalable for large-scale secondary data.

Challenges:

- Maintenance costs for adapting to changing data sources.

- Limited to existing data, not primary collection.

5. Generative AI

Generative AI is taking over the tech industry and can also generate AI training data. As its name suggests, it is designed to generate content, such as text, images, audio, or any other kind of data.

When we use it to generate training data for machine learning models, we create data from scratch or augment existing data to improve the model’s performance.

Here is how generative AI is being used to gather training data for healthcare tech:

Advantages

Here are some advantages and ways the technology can create data for machine learning models.

Data augmentation

This is about making slight modifications to existing data. For example, in image recognition, we can slightly rotate, zoom, or change the color of images so that the machine learning model becomes robust and recognizes images even under varying conditions.

Synthesizing data

When gathering real-world data is difficult, expensive, or time-consuming, generative AI can create synthetic datasets that closely resemble real-world data.

Privacy

Sometimes, data can’t be shared because of privacy issues. Generative AI can create data similar to the original but doesn’t contain sensitive information, making it safer to share.

Cost-effective

Generating data using AI can be more cost-effective than traditional data collection methods, especially in cases where collecting real-world data is expensive or risky.

Diverse scenarios

Generative AI can create a variety of scenarios or cases, ensuring that a machine learning model is well-trained across different conditions.

Disadvantages

While generative AI offers many advantages, there are also potential drawbacks when using it to create training data:

Data quality and authenticity concerns

One of the main risks of using generated data is that it might not always perfectly represent real-world scenarios. If the generative model has biases or inaccuracies, these can be transferred to the training data it creates. This means the machine learning model being trained might work well with the synthetic data but may perform poorly when faced with real-world data.

Overfitting to synthetic data

Overfitting happens when a machine learning model becomes too tailored to its training dataset and loses the ability to perform well with new, unseen data. Suppose a significant portion of the training data comes from a generative model and doesn’t closely match real-world situations. In that case, the final machine learning model might become too optimized for the synthetic data and perform poorly in real-world applications.

Read more about AI failures and how to avoid them.

Recommendations

Since generative AI is a new technology, here are some recommendations to consider while leveraging generative AI to prepare AI training datasets:

- Ensure Data Diversity: When using generative AI to create training datasets, prioritize diversity in the data. This includes variation in demographics, scenarios, and contexts to prevent biases and ensure the AI model can generalize well across different situations.

- Regularly Validate and Update Data: Continuously validate the generated data against real-world examples and update the training set regularly. This helps maintain the AI model’s relevance and accuracy, especially in rapidly evolving fields.

- Monitor for Ethical and Legal Compliance: Monitor ethical and legal standards closely, especially regarding data privacy and intellectual property rights. Ensure that the generative AI is not replicating or perpetuating harmful biases or using protected or sensitive information without consent.

Case Study: Deep 6 AI for Clinical Trials

Deep 6 AI generates synthetic patient data to identify candidates for clinical trials. Augmenting real-world medical records with simulated scenarios accelerates recruitment while preserving privacy. However, biases in training data can skew synthetic outputs, risking misrepresentation of underrepresented groups. 6

Advantages:

- Fills data gaps (e.g., rare diseases).

- Cost-effective for high-risk scenarios.

Challenges:

- Overfitting to synthetic data (e.g., poor real-world performance).

- Ethical risks if biases propagate into models.

6. Reinforcement learning from human feedback (RLHF)

Reinforcement Learning from Human Feedback, or RLHF, is a method where a machine learning model, especially in the context of reinforcement learning, is trained using feedback from humans rather than relying solely on traditional reward signals from an environment.

How it works:

Initial demonstrations

Human experts showcase the desired behavior through demonstrations, including various activities from playing a game to executing a specialized task. These demonstrations serve as a foundational dataset for the model, illustrating what successful performance looks like in various scenarios.

Model training

The model undergoes training using the demonstration data provided by human experts, learning to imitate the humans’ behaviors and decisions. This training process involves analyzing patterns in the data and developing strategies to replicate the expert’s performance as closely as possible.

Fine-tuning with feedback

After the initial training, the model’s performance is further refined through human feedback, where humans rank or score the different behaviors generated by the model. Based on this feedback, the model adjusts its strategies and decision-making processes to align with human expectations and improve its overall performance.

Advantages

Overcoming infrequent rewards

In many environments, defining a reward function is challenging, or the rewards are infrequent. RLHF can bridge this gap by leveraging human expertise to guide the model towards the right behavior.

Safety and ethical concerns

Instead of letting the model explore all possible actions, some of which might be harmful or unethical, humans can guide the model to behave in desired and safe ways.

Disadvantages

Scalability issues

Continuously relying on human feedback can be resource-intensive. As tasks become more complex, the need for human involvement can become a bottleneck, making it hard to scale the approach for large applications.

Introducing human biases

Human feedback can also introduce biases. If human evaluators have certain preferences, misconceptions, or biases, these can be inadvertently transferred to the AI model, leading to undesired behaviors or decisions.

Case Study: OpenAI ChatGPT

To reduce toxicity in ChatGPT, OpenAI partnered with Sama, a Kenyan outsourcing firm, to label explicit content. Workers earned 1.3–2/hour to review graphic text, including violence and abuse. This RLHF process trained ChatGPT’s safety filters but exposed workers to psychological harm, leading Sama to terminate the contract early. 7

Impact on AI Output:

- Workers’ linguistic patterns influenced ChatGPT’s “AI-ese,” with overuse of terms like “delve” common in African business English.

Advantages:

- Guides AI toward ethical behavior using human judgment.

Challenges:

- Exploitative labor practices and mental health risks.

- Scalability issues due to reliance on low-wage workers.

FAQs for AI data collection methods

-

Why is it important to choose the right AI data collection methods?

Selecting the proper data collection methods is crucial for the success of AI projects. These methods influence the data’s accuracy, quality, and relevance, affecting the effectiveness and efficiency of the AI solutions developed.

Accuracy and Relevance: Choosing the appropriate data collection method ensures the accuracy of the data collected, whether it’s quantitative data from online surveys and statistical analysis or qualitative data from interviews and focus groups. Accurate data collection is fundamental for building reliable AI models.Efficiency: Utilizing the right data collection tools and techniques, such as online forms for quantitative research or focus groups for qualitative insights, can streamline the data collection process, making it less time-consuming and more cost-effective.

Comprehensive Analysis: A mix of primary and secondary data collection methods, along with a balance of qualitative and quantitative data, allows for a more comprehensive analysis of the research question, contributing to more nuanced and robust AI solutions.

Targeted Insights: Tailoring the data collection technique to the specific needs of the project, like using customer data for business analytics or health surveys for medical research, ensures that the collected data is highly relevant and can provide targeted insights for the AI model.

-

Which method is most suitable for my AI project?

Data Type and Quality: Determine whether your project requires image, audio, video, text, or speech data. The choice influences the richness and accuracy of the data collected.

Dataset Volume and Scope: Assess the size and domains of the datasets needed. Larger datasets might require a mix of primary and secondary data collection methods, while specific domains may need targeted qualitative research methods.

Language and Geographic Considerations: Ensure the data encompasses the required languages and is representative of the target audience, potentially necessitating diverse collection methods and tools.

Timeliness and Frequency: Evaluate how quickly and how often you need the data. AI models requiring continuous updates need a reliable process for frequent and accurate data collection.

Further reading

External resources

- 1. ResearchGate - Temporarily Unavailable.

- 2. ResearchGate - Temporarily Unavailable.

- 3. Tesla: The Data Collection Revolution in Autonomous Driving | by Shreyas Sharma | CISS AL Big Data | Medium. CISS AL Big Data

- 4. How to predict structures with AlphaFold - Proteopedia, life in 3D.

- 5. Alibaba’s ‘city brain’ is improving traffic in Hangzhou | CNN Business. Getty

- 6. Clinical Trial Recruitment for Life Sciences Using AI.

- 7. OpenAI Used Kenyan Workers on Less Than $2 Per Hour: Exclusive | TIME. Time

Comments

Your email address will not be published. All fields are required.