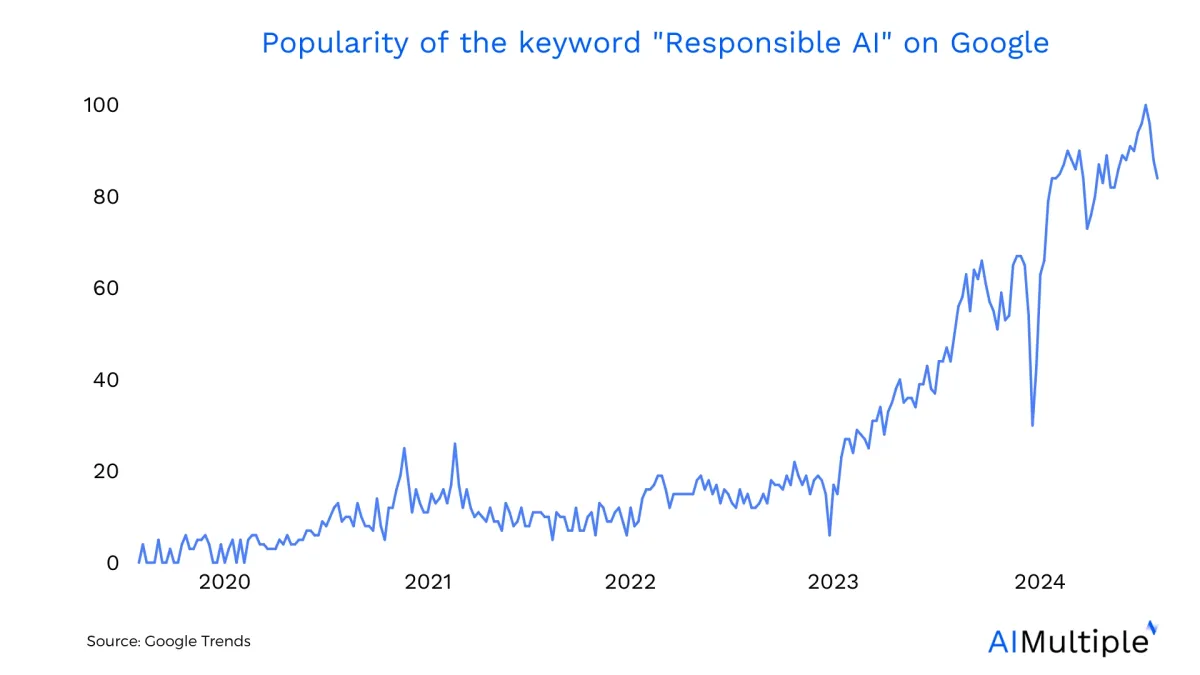

AI and machine learning are revolutionizing industries, with 90% of commercial apps expected to use AI by 2025 as AI statistics shows. Despite this, 65% of risk leaders feel unprepared to manage AI-related risks effectively. 1

Developing and scaling AI applications with responsibility, trustworthiness, and ethical practices in mind is essential to build AI that works for everyone.

Explore four principles for responsible AI (RAI) design and recommend best practices to achieve them:

Step by step guideline to Responsible AI

- Deploy AI systems with a focus on human users and their experiences. Ensure design incorporates ethical principles and societal values for a better user interaction.

- Utilize a responsible AI dashboard to monitor various metrics, including feedback and error rates, ensuring system effectiveness and risk management.

- Examine training data and underlying data carefully for accuracy and representativeness. Address biases and unfair outcomes to improve data ethics and ensure your AI policy enforces fairness audits.

- Understand the limitations of machine learning models and communicate these clearly. Avoid overreliance on correlations and recognize the scope of the generative AI capabilities.

- Implement rigorous testing within AI workflows, including unit and integration tests. Ongoing monitoring is essential for system reliability and accuracy, incorporating ethical considerations throughout.

- Continuously track system performance post-deployment, evaluating updates against the EU AI Act for regulatory compliance and adapting to privacy and safety principles. Align your monitoring practices with AI policy standards that emphasize privacy, transparency, and safety. Address both immediate and long-term issues while ensuring widespread adoption and resistance to malicious attacks.

1. Fairness

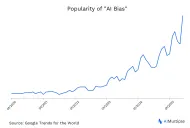

AI tools are increasingly being used in various decision-making processes such as hiring, lending, and medical diagnosis. Biases introduced in these decision-making systems can have far-reaching effects on the public and contribute to discrimination against different groups of people.

Real-life examples

Here are three examples of AI bias in real-world applications:

- Sexism in credit algorithms: In 2019, there were multiple claims (including from co-founder Steve Wozniak) that Apple’s credit card algorithm discriminates against women, offering different credit limits based on gender.

- Racism and ableism in AI-based hiring: According to a recent report by Harvard Business School and Accenture, 27 million workers in the US are filtered out and unable to find a job due to automated and AI-based hiring systems. 2 These “hidden workers” include immigrants, refugees, and those with physical disabilities.

- Racism in facial recognition: Researchers found 3 that some commercial facial recognition technologies, such as Amazon’s or Microsoft’s, had poor accuracy on dark-skinned women, but were more accurate on light-skinned men (Figure 1).

These biased decisions can result from project design or from datasets that reflect real-world biases. It is critical to eliminate these biases to create AI systems that are robust and inclusive to all.

Best practices to achieve fairness

- Examine the dataset for whether it is a fair representation of the population.

- Analyze the subpopulations of the dataset to determine if the model performs equally well across different groups.

- Design models with fairness in mind and consult with social scientists and other subject matter experts.

- Monitor the machine learning model continuously after deployment. Models drift over time, so biases can be introduced to the system after some time.

- Integrate fairness benchmarks into your AI policy for measurable accountability.

We have a comprehensive article on AI bias and how to fix it. Feel free to check. You can also read our article on AI ethics.

2. Privacy

AI systems often use large datasets, and these datasets can contain sensitive information about individuals. This makes AI solutions susceptible to data breaches and attacks from malicious parties that want to obtain sensitive information:

- According to the Identity Theft Resource Center, there were 1862 data breaches in 2021, which is 23% higher than the previous all-time high in 2017.

Data breaches cause financial loss as well as reputational damage to businesses and can put individuals whose sensitive information is revealed at risk.

Real-life example

In early 2024, Italy’s data protection authority fined the city of Trento €50,000, the first Italian municipality penalized for AI-related privacy breaches. Trento had used AI tools in EU-funded surveillance projects that included cameras, microphones, and social media monitoring, but failed to properly anonymize personal data and unlawfully shared information with third parties.

The watchdog ordered the deletion of all collected data, citing violations of transparency and proportionality under GDPR. This case reflects Italy’s growing enforcement of AI-related privacy rules, following actions like the temporary ChatGPT ban in 2023 and a fine against OpenAI in late 2024.4

Best practices to ensure privacy

- Assess and classify data according to its sensitivity and monitor sensitive data.

- Develop a data access and usage policy within the organization. Implement the principle of least privilege

3. Safety and security

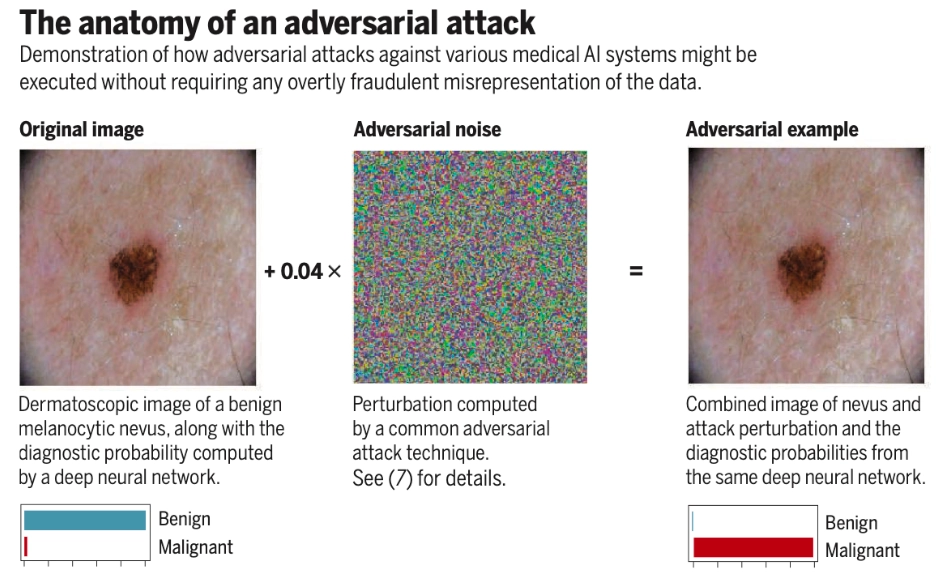

The security of an AI system is critical to prevent attackers from interfering with the system and changing its intended behavior. The increasing use of AI in particularly critical areas of society can introduce vulnerabilities that can have a significant impact on public safety.

Applying strong safety principles during system design helps minimize these vulnerabilities. Also, a robust AI policy requires threat modeling, penetration testing, and red teaming.

Consider the following examples:

- Researchers have shown that they can get a self-driving car to drive in the opposite lane by placing small stickers on the road.

These adversarial attacks can involve:

- Data poisoning by injecting misleading data into training datasets.

- Model poisoning by accessing and manipulating the models.

among others to cause the AI model to act in unintended ways. As AI technology evolves, attackers will find new methods and new ways to defend AI systems will be developed.

Real-life example

Spain approved a draft law in line with the EU AI Act requiring all AI-generated content, like deepfakes, to be clearly labeled, aiming to strengthen transparency and protect vulnerable groups. The law also prohibits subliminal manipulation through AI and imposes steep penalties for violations. The law requires:

- Labeling: All AI-generated content (e.g., images, videos, text) must be clearly marked as such.

- Deepfake regulation: Synthetic media must disclose its artificial origin.

- Ban on manipulation: AI systems cannot use subliminal techniques to exploit users, especially minors or vulnerable individuals.

Once violated, the model providers are expected to pay up to €35 million or 7% of global turnover. A new national agency, AESIA, will monitor and enforce compliance.

This law reinforces the transparency and safety principles of responsible AI by ensuring users know when they’re interacting with synthetic content and by curbing harmful manipulation.5

Best practices to achieve security

- Assess whether an adversary would have an incentive to attack the system and the potential consequences of such an attack.

- Create a red team within your organization that will act as an adversary to test the system for identification and mitigation of vulnerabilities.

- Follow new developments in AI attacks and AI security. It is an ongoing area of research, so it is important to keep up with developments.

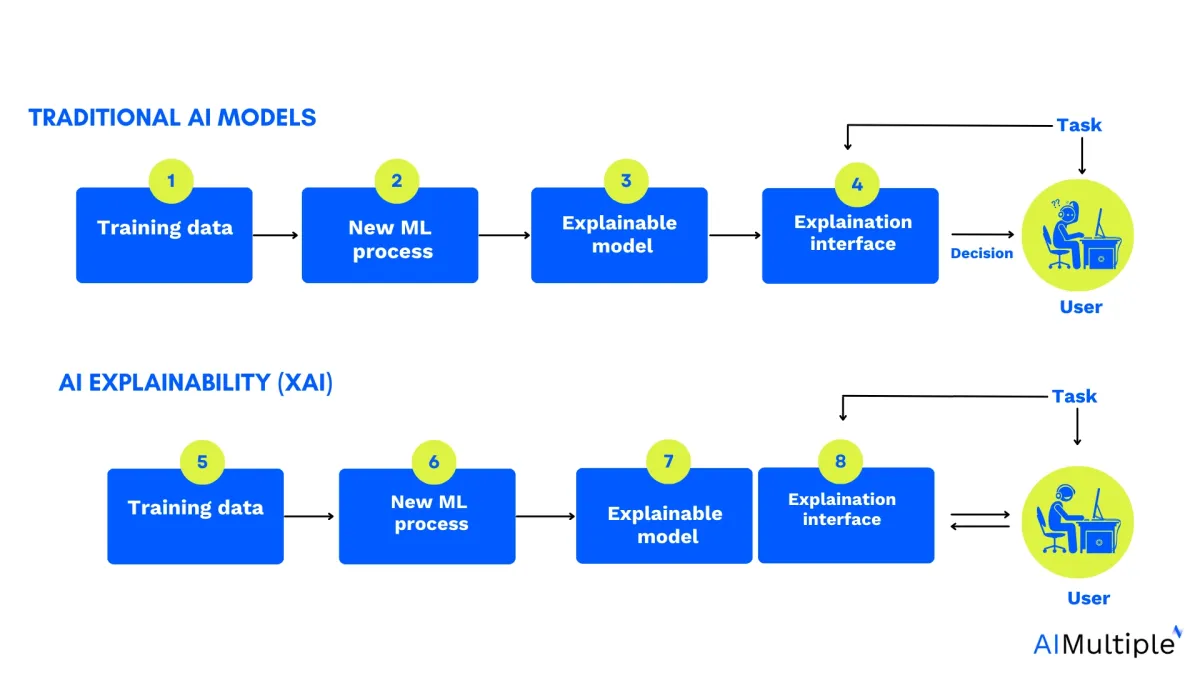

4. Transparency

Transparency, interpretability, or explainability of AI systems is a must in some industries such as healthcare and insurance in which businesses must comply with industry standards or government regulations. However, being able to interpret why AI models come up with specific outputs is important for all businesses and users to be able to understand and trust AI systems.

A transparent AI system can help businesses:

- Explain and defend business-critical decisions,

- Make “what-if” scenarios,

- Ensure that the models work as intended,

- Ensure accountability in case of unintended results.

Real-life example

Clearview AI, a U.S.-based facial recognition company, built a database of over 30 billion images scraped from the internet to identify individuals for law enforcement and private clients. It was fined €30.5 million by the Dutch Data Protection Authority for violating privacy and transparency principles under GDPR, such as:

- No consent: Collected biometric data (facial features) without users’ knowledge or permission.

- Lack of transparency: Individuals weren’t informed their images were used or how they were processed.

- Sensitive data: The database included images of minors.

- Non-compliance: Operated in the EU without a local representative or lawful data basis.6

Use cases

Explainable AI can help build transparency and trust in the decision-making processes in various sectors, such as:

- Healthcare by aiding physicians in understanding the rationale behind AI’s disease diagnoses and treatment suggestions.

- Finance by providing transparency, fostering trust among stakeholders in fraud detection and investment advice.

- Automotive by helping clarify the decision-making processes in autonomous vehicles, enhancing safety and reliability.

- Marketing & Sales by delivering AI-driven insights for customer segmentation, sales forecasting, and ad targeting are transparent, supporting strategic decisions.

- Cybersecurity by explaining the reasoning behind AI’s threat detection, improving cybersecurity management.

Best practices to ensure transparency

- Use a small set of inputs that is necessary for the desired performance of the model. This can make it easier to accurately pinpoint where the correlation or the causation between variables comes from.

- Give explainable AI methods priority over models that are hard to interpret (i.e., black box models).

- Discuss the required level of interpretability with domain experts and stakeholders.

Responsible AI tools

Responsible AI software market landscape include various tools that deliver responsible AI frameworks, such as:

Feel free to check our data-driven lists of AI consultants and data science consultants for more on AI consultants. You can also check TensorFlow’s Responsible AI Toolkit ecosystem, which can help businesses adopt responsible AI practices.

To ensure these tools align with ethical values, organizations should adopt tools that comply with their AI policy, covering safety, fairness, transparency, and accountability.

How to identify if an AI tool is responsible?

Business users may use AI tools, such as an HR team using an LLM-based algorithm to review applicant profiles, speeding up recruitment by filtering CVs based on experience and education. However, if the tool violates fairness principles, it could discriminate against certain groups. Unaware of this bias, users may reject candidates based on gender or race, leading to ethical and reputational issues for the organization.

To prevent such problems, companies should adopt tools aligned with responsible AI principles. They can evaluate benchmarks, examine user reviews, and study real-life examples or case studies to ensure ethical AI usage. The table below shows the benchmark results for some of top LLMs:

| AI system | Grade |

|---|---|

| Claude 3.5 Haiku 20241022 | 4 |

| Claude 3.5 Haiku 20241022 | 4 |

| Gemma 2 9b | 4 |

| Phi 3.5 MoE Instruct | 4 |

| Gemini 1.5 Pro | 3 |

| GPT-4o | 3 |

| GPT-4o mini | 3 |

| Llama 3.1 405B Instruct | 3 |

| Llama 3.1 8b Instruct FP8 | 3 |

| Phi 3.5 Mini Instruct | 3 |

| Ministral 8B 24.10 | 2 |

| Mistral Large 24.11 | 2 |

| OLMo 7b 0724 Instruct | 1 |

Systems are evaluated both overall and for each hazard using a 5-point scale: Poor (1), Fair (2), Good (3), Very Good (4), and Excellent (5). The ratings are determined by the percentage of responses that fail to meet the assessment standards.7

The AI Safety Summit

The AI Safety Summit is a leading international conference focused on the safety, risks, and regulation of advanced “Frontier AI” systems. The inaugural event was held in November 2023 at Bletchley Park, UK, bringing together governments, AI companies, civil society, and experts from 28 countries to coordinate global AI safety efforts.

Key Outcomes

- Bletchley Declaration: Participating nations committed to developing safe, human-centric, trustworthy, and responsible AI based on shared safety principles.

- International Cooperation: Emphasized urgent collaboration to mitigate risks such as misuse, societal disruption, and loss of control over advanced AI.

- Transparency and Regulation: Called for transparency, rigorous safety testing, and adaptive regulatory frameworks to keep pace with AI advancements.

Purpose and Vision

The summit promotes responsible AI development by fostering multi-stakeholder collaboration and ethical governance. It aims to ensure AI benefits society while minimizing harm, highlighting the global responsibility to design, deploy, and govern AI systems that prioritize human safety, ethics, and inclusivity.

If you have other questions about responsible AI principles and how to adopt them in your AI initiatives, we can help:

External Links

- 1. Global Risk Study | Accenture. Accenture

- 2. Fuller,Joseph B.; Raman, Manjari; Sage-Gavin, Eva; Hines, Kristen (2021). “HIDDEN WORKERS: UNTAPPED TALENT.” HBR. Revisited January 20, 2023.

- 3. Buolamwini, J., & Gebru, T. (2018, January). “Gender shades: Intersectional accuracy disparities in commercial gender classification.” In Conference on fairness, accountability and transparency (pp. 77-91). PMLR.

- 4. reuters.com.

- 5. reuters.com.

- 6. Clearview AI fined €30.5M in the Netherlands over its facial recognition database - SiliconANGLE.

- 7. “Benchmark for general-purpose AI chat model.”AILuminate. 2024. Retrieved at December 6, 2024.

Comments

Your email address will not be published. All fields are required.