Measuring AI performance is crucial to ensuring that AI systems deliver accurate, reliable, and fair outcomes that align with business objectives.

It helps organizations validate the effectiveness of their AI investments, detect issues like bias or model drift early, and continuously optimize for better decision-making, operational efficiency, and user satisfaction.

Dive in to learn how to measure AI performance, explore key performance metrics, and discover best practices to follow.

How to measure AI performance?

Measuring AI performance requires a structured approach, combining key performance indicators (KPIs) with technical expertise to assess how well AI systems align with overarching business objectives.

From machine learning models that predict customer churn to generative AI models that generate content, each use case demands tailored AI metrics to evaluate impact and quality.

Define AI KPIs

The first step in AI measurement is to define KPIs that bridge the gap between business goals and technical performance. These key metrics must reflect the model’s ability to make accurate predictions and its effect on operational efficiency, user engagement, and customer satisfaction.

For example, business leaders may track cost savings, increased AI automation, or new valuable insights derived from predictive models as part of their AI initiatives.

To align AI strategy with outcomes, organizations must focus on multiple metrics that account for both technical model performance and business impact. These might include financial metrics, user interaction rates, or process optimization improvements enabled by AI capabilities.

Direct vs. indirect metrics

When evaluating AI performance, it’s important to distinguish between direct and indirect metrics. These two categories help organizations assess their AI initiatives’ technical success and business value.

Direct metrics: Technical evaluation of AI models

Metric Type | Applicable Model Type | Key Metrics | What It Measures | Use Cases |

|---|---|---|---|---|

Direct Metrics (Technical) | Classification Models | - Precision - Recall - F1 Score - False Positive Rate | Compares predicted class labels to actual labels (ground truth) | Email spam detection, fraud detection, medical diagnosis |

Direct Metrics (Technical) | Regression Models | - Root Mean Squared Error (RMSE) - Squared Error - Mean Absolute Error (MAE) | Measures deviation between predicted and actual continuous values | Price prediction, demand forecasting, energy consumption models |

Direct Metrics (Technical) | Any AI Model | - Model Accuracy - Model Loss - AUC-ROC - Confusion Matrix | Overall model performance and prediction confidence | General-purpose evaluation for binary/multiclass models |

Direct metrics, also known as technical metrics, evaluate the AI model’s output by comparing it to the ground truth. They are essential for determining the model’s ability to make accurate predictions and for ensuring high model quality.

For classification models: Used when the target variable consists of discrete labels (e.g., spam vs. not spam, fraud vs. non-fraud). Key classification metrics include:

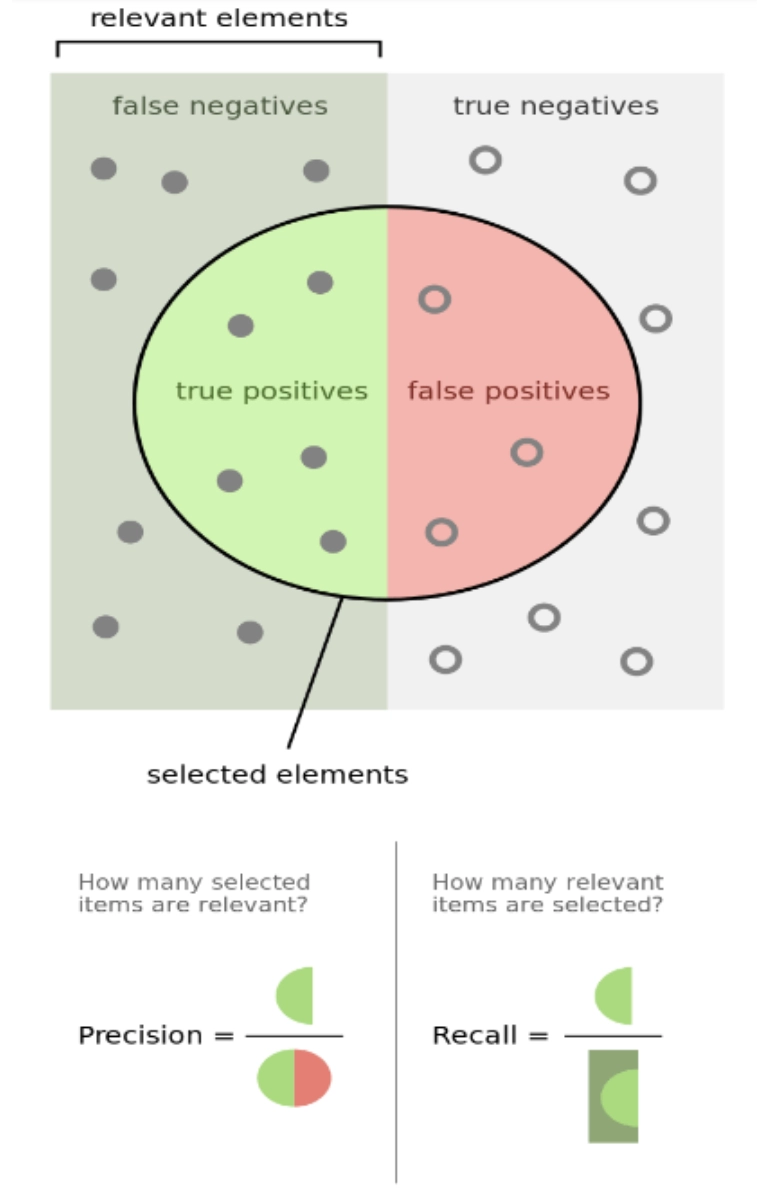

- Precision: Measures the proportion of positive predictions that were actually correct. Useful when the cost of false positives is high (e.g., disease diagnosis).

- Recall: Measures the proportion of actual positives that were correctly identified. Important when missing a true positive is costly (e.g., fraud detection).

- F1 Score: Harmonic mean of precision and recall. Balances the trade-off between false positives and false negatives.

- False Positive Rate (FPR): Percentage of negative instances incorrectly classified as positive. Useful for understanding potential risks, especially in AI systems deployed in sensitive domains like finance or healthcare.

Figure 1: An example explaining the concepts of precision and recall by showing how selected elements (predicted positives) overlap with relevant elements (actual positives), defining true positives, false positives, false negatives, and true negatives.

For regression models: Used when the target variable is continuous (e.g., house prices, temperature prediction, stock values). Common regression metrics include:

- Root Mean Squared Error (RMSE): Measures the square root of the average squared differences between predicted and actual values. Heavily penalizes large errors, making it ideal for models where large deviations are critical.

- Squared Error: The raw squared difference between the predicted and actual values. Useful for emphasizing outlier errors.

- Mean Absolute Error (MAE): Measures the average absolute differences between predicted and actual values. Easier to interpret and less sensitive to outliers compared to RMSE.

These metrics help quantify the system performance and guide continuous improvement during model development and deployment.

Indirect metrics: Business-oriented measures of AI impact

Indirect metrics are not tied directly to the AI model’s output but reflect how AI capabilities impact broader business objectives. These are essential for demonstrating that AI investments are delivering real-world value.

- Customer satisfaction scores: Measures how well the AI system contributes to user experience and satisfaction (e.g., chatbot effectiveness).

- User input frequency Tracks how often users interact with an AI system (e.g., voice assistants, recommendation systems). This indicates user trust and engagement with the system.

- Revenue growth or cost savings: Assesses the financial return of implementing AI technology in areas like AI automation or predictive analytics.

- Operational efficiency improvements: Evaluate time saved, reduced manual work, or process optimizations resulting from integrated AI models into business processes.

- Employee productivity or retention: When AI initiatives automate repetitive tasks, human employees can focus on more strategic work, improving job satisfaction and retention.

While indirect metrics don’t serve as evaluation metrics for the model’s predictive capabilities, they provide a comprehensive understanding of how AI influences organizational performance. These metrics help business leaders ensure that AI is technically effective and strategically aligned.

Ethics metrics

AI ethics metrics, also known as responsible AI metrics, are a set of evaluation metrics used to assess whether an AI model upholds fairness, avoids discrimination, and maintains transparency in its decision-making process.

These metrics help organizations ensure that their AI initiatives align with legal regulations, societal norms, and their own business values.

They are especially critical in high-impact machine learning models, where unfair decisions can lead to reputational, legal, or financial risk.

Why do they matter?

- Promote fairness: AI systems can unintentionally replicate or amplify biases in training data. Ethics metrics help detect and mitigate this by highlighting disparities in outcomes across demographic groups.

- Support regulatory compliance: With growing regulatory scrutiny (e.g., GDPR, the EU AI Act, U.S. EEOC guidelines), companies are increasingly required to show that their AI models do not discriminate unfairly. Ethics metrics offer a way to demonstrate compliance.

- Align with business values: Ethical AI is part of a strong brand identity. Companies known for fairness and responsibility are more likely to earn customer trust, improve user engagement, and drive long-term AI investments.

Three key principles guide responsible AI: accuracy, accountability, and transparency, each supported by specific metrics that help ensure the development of ethical, reliable, and explainable AI systems.

Accuracy

Accuracy ensures that the AI model’s output reflects real-world facts, particularly in high-stakes applications such as healthcare or finance. One major concern is AI hallucination, where generative AI models produce incorrect or misleading information.

- To address this, organizations should validate outputs for factual correctness before use.

- Metrics for evaluating factual accuracy help reduce the risk of misinformation being delivered to end-users.

Accountability

Accountability ensures that AI decisions can be traced back to their sources, whether a developer, dataset, or organization, so the system remains ethically sound. Key accountability-related metrics include:

- Bias detection score: Assesses if certain groups are unfairly represented in the training data, which could influence the model’s decisions.

- Fairness score: Evaluates how equally the model treats various demographic groups, supporting fair decision-making.

- Model Accountability Index (MAI): Measures how well an AI system complies with legal standards and regulatory policies, ensuring responsible oversight.

Transparency

Transparency involves the ability of both experts and non-experts to understand how a model works and why it makes certain decisions. Important transparency metrics include:

- Explainability score: Measures how interpretable the model’s decisions are. Tools like SHAP and LIME help visualize which features influenced an AI model’s output, making complex machine learning models more understandable.

- Model transparency index: Measures how openly an organization discloses critical information such as training data sources, algorithms used, and any biases or accuracy trade-offs found during development.

Figure 2: The image shows the percentage point changes in transparency scores across key dimensions for major AI companies between October 2023 and May 2024, highlighting varied progress, with AI21 Labs and BigCode/HF leading improvements, OpenAI showing no change, and some companies like Stability AI displaying both gains and setbacks.1

Example scenarios where ethics metrics can be applied:

IBM AI Fairness 360 (AIF360)

You’ve built a classification model to approve loan applications. You discover that approval rates for female applicants are significantly lower than for male applicants.

What AIF360 can do: It applies demographic parity and equalized odds metrics to identify this bias. It also offers bias mitigation algorithms (e.g., reweighting the training data) to balance outcomes.

Fairlearn

You’re deploying a regression model to predict patient health risk scores. Initial results show higher prediction errors for minority patients.

What Fairlearn can do: It visualizes error metrics (like RMSE or MAE) across demographic groups and lets you apply fairness constraints (e.g., equal opportunity) during training.

Google What-If:

You’ve trained a natural language processing (NLP) model to classify customer feedback. You’re unsure if it’s biased against certain ethnic terms or gendered language.

What Google What-If can do: It allows interactive exploration of the model’s output by adjusting user input and comparing how predictions change across groups. You can simulate different ground truth scenarios and see how real-time classification metrics shift.

Best practices in measuring AI performance

Organizations must adopt a strategic, well-rounded approach to measuring AI performance to ensure that AI initiatives deliver technical precision and business value. Here is a checklist to help you align performance measurement with long-term success:

Align KPIs with business objectives

- Identify strategic goals the AI system supports (e.g., cost savings, customer experience, automation).

- Define KPIs that reflect both technical performance and business impact.

- Ensure stakeholders (business leaders, data scientists, product teams) agree on what success looks like.

- Connect model metrics to real-world outcomes (e.g., precision → improved fraud detection → reduced losses).

Utilize a combination of metrics

- Apply direct metrics (e.g., accuracy, F1 score, RMSE) to evaluate model predictions.

- Use indirect metrics (e.g., customer satisfaction, revenue growth) to measure business value.

- Include ethics metrics (e.g., fairness, explainability) for responsible AI governance.

- Consider operational and financial KPIs (e.g., system uptime, deployment costs).

Continuous monitoring and iteration

- Set up automated dashboards to track real-time performance metrics.

- Monitor for model drift, data degradation, or bias emergence.

- Regularly revisit KPIs and update them as business needs or technology evolves.

- Collect and integrate user feedback and new data into retraining cycles.

- Support a culture of continuous improvement across AI teams.

Reference Links

Cem's work has been cited by leading global publications including Business Insider, Forbes, Washington Post, global firms like Deloitte, HPE and NGOs like World Economic Forum and supranational organizations like European Commission. You can see more reputable companies and resources that referenced AIMultiple.

Throughout his career, Cem served as a tech consultant, tech buyer and tech entrepreneur. He advised enterprises on their technology decisions at McKinsey & Company and Altman Solon for more than a decade. He also published a McKinsey report on digitalization.

He led technology strategy and procurement of a telco while reporting to the CEO. He has also led commercial growth of deep tech company Hypatos that reached a 7 digit annual recurring revenue and a 9 digit valuation from 0 within 2 years. Cem's work in Hypatos was covered by leading technology publications like TechCrunch and Business Insider.

Cem regularly speaks at international technology conferences. He graduated from Bogazici University as a computer engineer and holds an MBA from Columbia Business School.

Be the first to comment

Your email address will not be published. All fields are required.