Large vision models (LVMs) can automate and improve visual tasks such as defect detection, medical diagnosis, and environmental monitoring.

We benchmarked three object detection models: YOLOv8n, DETR, and GPT-4o Vision, across 1,000 images each, measuring metrics such as mAP@0.5, inference speed, FLOPs, and parameter count. To ensure a fair comparison, all images were resized to 800×800 pixels and evaluated using identical preprocessing, confidence thresholds, and IoU-based matching criteria.

Object detection benchmark: GPT-4o (Vision), YOLOv8n, DETR

mAP@0.5: Mean Average Precision at an Intersection over Union (IoU) threshold of 0.5, measuring the accuracy of object detection by balancing true positives and false positives.

Latency (ms): The average processing time per image, measured in milliseconds, indicates the model’s speed.

Benchmark Results

GPT-4o’s object detection capabilities remain limited compared to specialized models like YOLOv8n and DETR.

Accuracy:

- DETR: mAP@0.5 = 0.55

- YOLOv8n: 0.20

- GPT-4o: 0.02

These results indicate that GPT-4o is not yet suitable for practical object detection tasks.

Latency:

- YOLOv8n: 365 ms

- DETR: 3145 ms

- GPT-4o: 5150 ms

YOLOv8n offers the fastest inference but lower accuracy, while DETR achieves better accuracy at the cost of slower processing.

All models were evaluated using 800×800 input images for consistency. Parameter counts and FLOPS were available for YOLOv8n and DETR but not for GPT-4o, preventing a complete comparison of computational efficiency.

Model Complexity:

- DETR: 41.52M parameters, 59.56 GFLOPS

- YOLOv8n: 3.15M parameters, 6.83 GFLOPS

This shows YOLOv8n’s efficiency for real-time applications, while DETR trades speed for higher accuracy and greater computational demand. The lack of architectural details for GPT-4o limits deeper efficiency analysis.

See our benchmark methodology.

Possible reasons behind the differences in performance

The three models showed different levels of accuracy and speed because they are built for different purposes and process visual information in distinct ways. GPT-4o is a multimodal large language model that accepts both text and images, whereas YOLOv8n and DETR are object detection systems that operate only on images.

GPT-4o interprets visual inputs through a language-driven reasoning pipeline. It can describe scenes and identify objects, but it is not designed to draw bounding boxes or perform high-precision spatial localization.

Its outputs depend on multimodal reasoning rather than detection-specific mechanisms. This makes it slower and less accurate for detection tasks.

YOLOv8n and DETR use architectures explicitly created for object detection. They generate bounding boxes directly rather than reasoning about them.

YOLOv8n is optimized for speed with a lightweight structure, while DETR is a transformer-based detector that processes images differently from YOLO and aims for more accurate predictions.

Both models focus solely on visual inputs and follow training objectives that map image patterns to object locations.

Key differences include:

- Input type

- GPT-4o: image and text

- YOLOv8n and DETR: image only

- Primary function

- GPT-4o: multimodal understanding and reasoning

- YOLOv8n and DETR: object detection

- Output mechanism

- GPT-4o: does not inherently draw bounding boxes

- YOLOv8n and DETR: directly predict bounding boxes

Because YOLOv8n and DETR were developed solely for object detection, they naturally perform better in benchmarks focused on accuracy and latency.

GPT-4o’s broad, non-detection-centered multimodal design results in lower mAP and higher inference times when evaluated in the same setting.

Detailed evaluation of large vision models

OpenAI GPT-4o (Vision)

GPT-4o (Vision) is a multimodal extension of OpenAI’s GPT-4, designed to process and generate responses from both text and images.

This capability allows GPT-4o to interpret visual content alongside textual information, enabling a range of applications that require understanding and analyzing images.

- Image interpretation: GPT-4o can analyze and describe the content of images, including identifying objects, interpreting scenes, and extracting textual information from visuals. This makes it useful for tasks like image captioning and content summarization.

- Visual data analysis: The model can interpret charts, graphs, and diagrams, providing insights and explanations based on visual data. This feature is beneficial for data analysis and education applications.

- Multimodal content understanding: GPT-4o can provide more comprehensive responses by combining text and image inputs. It can also enhance applications in social media analysis and content moderation. For example, it can assess sentiment or detect misinformation in posts that include both text and images.

Despite its advanced capabilities, GPT-4o can sometimes produce inaccurate or unreliable outputs. It can misinterpret visual elements, overlook details, or generate incorrect information, requiring human verification for critical tasks.

The model may also reflect biases present in its training data, leading to skewed interpretations or reinforcing stereotypes. This is a concern in sensitive applications where impartiality is crucial, including demographic inference or content moderation.

OpenAI Sora

Sora is a text-to-video model created by OpenAI. It generates short video clips from user prompts and can also extend existing videos. Its underlying technology is an adaptation of the DALL-E 3 model.

Sora is a diffusion transformer, a denoising latent diffusion model that uses a Transformer. Videos are initially generated in a latent space by denoising 3D “patches,” then converted to standard space using a video decompressor.

Re-captioning is used to enhance the training process, in which a video-to-text model generates detailed captions for videos.

With the latest developments, users can now generate videos up to 1080p resolution, with a maximum duration of 20 seconds, and in widescreen, vertical, or square aspect ratios. They can also incorporate their assets to extend, remix, and blend existing content or create new videos from text prompts.

Figure 1: Sora’s video generation example using the prompt: “A wide, serene shot of a family of woolly mammoths in an open desert”.1

Landing AI LandingLens

LandingLens simplifies the development and deployment of computer vision models. It caters to various industries without requiring deep AI or complex programming expertise.

The platform standardizes deep learning solutions, reducing development time and enabling easy global scaling of projects. Without impacting production speed, users can build their own deep learning models and optimize inspection accuracy.

It offers a step-by-step user interface that simplifies the development process.

Figure 2: The diagram illustrates the LandingLens AI workflow, where input images are processed into data, used to train models, deployed via cloud, edge, or Docker, and continuously improved through feedback.2

Stable Diffusion

Stable Diffusion is a deep learning model designed to create high-quality images from textual descriptions:

- Stable Diffusion is based on diffusion. The process begins by adding random noise to an image, and the model then learns to reconstruct the original by reversing this noise.

- This process enables the model to generate entirely new images by refining the noisy input over multiple steps until a clear, coherent image emerges.

Stable Diffusion utilizes a latent diffusion model to improve efficiency. Instead of working directly with high-resolution images, it first compresses them into a lower-dimensional latent space using a variational autoencoder (VAE).

This approach significantly reduces computational demands, enabling running on consumer hardware with GPUs.

Applications:

In addition to generating images from text, Stable Diffusion can be used for various creative tasks, such as:

- Inpainting: Restoring or filling in missing parts of an image.

- Outpainting: Expanding an image’s edges to add more content.

- Image-to-Image Translation: Converting an existing image into a different style or modifying its appearance based on text input.

Midjourney

Midjourney is an art generator that converts text descriptions into high-quality images.

Capabilities

Midjourney Version 7 features a completely rebuilt architecture with significant improvements in quality and functionality.

Image generation: V7 produces upscaled images at 2048 x 2048 pixels with exceptional prompt precision and near-photographic quality. Key improvements include richer textures, accurate rendering of complex elements like hands and faces, and sophisticated understanding of lighting and composition.

Video generation: Creates 5-21 second video clips with high frame-to-frame consistency. The system generates approximately 60 seconds of video from six images in roughly three hours, targeting professional applications in marketing, filmmaking, and content creation.

3D capabilities: Text-to-3D generation using NeRF-like modeling creates volumetric objects and immersive scenes. These features support game development, product visualization, and architectural applications.

Figure 3: Midjourney’s image editing feature.3

DeepMind Flamingo

DeepMind’s Flamingo is a vision-language model designed to understand and reason about images and videos using minimal training examples (few-shot learning). Here are some of the key features:

- Multimodal few-shot learning: Flamingo can perform new tasks efficiently with just a few examples, unlike traditional AI models that require extensive labeled datasets.

- Perceiver Resampler Mechanism: Flamingo uses a “Perceiver Resampler” to process visual inputs efficiently. It compresses image and video data into a format that can be integrated into a large pre-trained language model.

- Vision-Language alignment with Gated Cross-Attention Layers: Special Gated Cross-Attention layers help Flamingo align and integrate visual data with textual reasoning. This application can be important for understanding image-based conversations.

Flamingo uses frame-wise processing, breaking down videos into key frames and extracting information to efficiently analyze visual elements.

Its context-aware responses help generate captions, descriptions, and answers based on the progression of events within a video to ensure a coherent understanding of the content.

Additionally, Flamingo exhibits temporal reasoning to comprehend sequences, cause-and-effect relationships, and complex interactions over time. This makes it highly effective for video analysis and multimodal reasoning tasks.

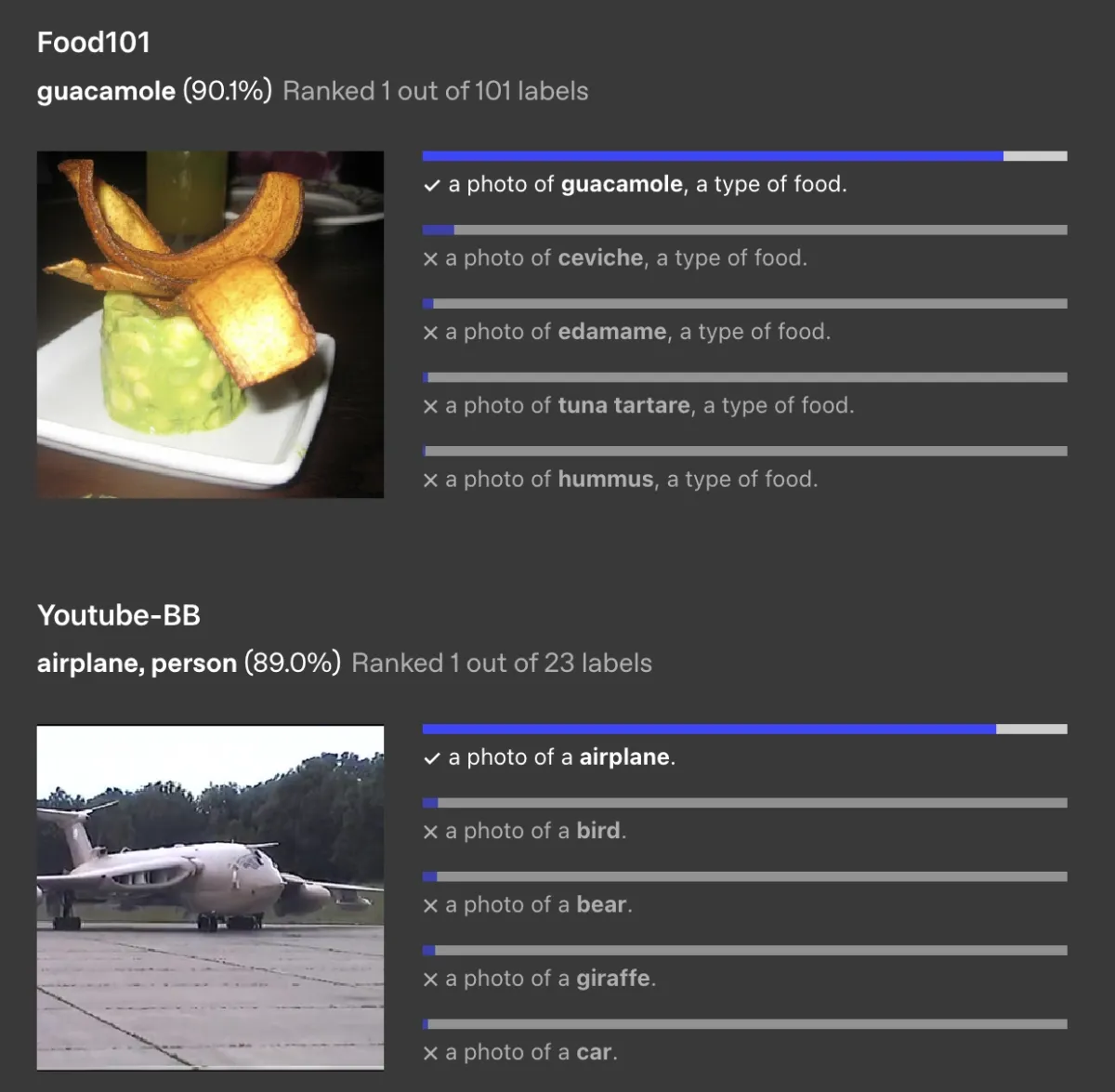

OpenAI’s CLIP (Contrastive Language–Image Pretraining)

CLIP is a neural network trained on a variety of images and text captions.

This model can perform various vision tasks, including zero-shot classification, by understanding images in the context of natural language.

CLIP is trained on 400 million (image, text) pairs to effectively bridge the gap between computer vision tasks and natural language processing. This helps CLIP make caption predictions or image summaries without being explicitly trained for these specific tasks.

Figure 4: Image identification by CLIP from various datasets.4

Google’s Vision Transformer (ViT)

Vision Transformer applies the transformer architecture originally used in natural language processing to image recognition tasks.

It processes images in a manner similar to how transformers process sequences of words, and it has shown effectiveness in learning relevant features from image data for classification and analysis tasks.

In Vision Transformer, images are treated as a sequence of patches. Each patch is flattened into a single vector, similar to how word embeddings are used in transformers for text.

This approach allows ViT to learn the image’ structure and independently predict class labels.

Video-native foundation models

Video-native foundation models represent a fundamental shift from traditional computer vision approaches. Unlike earlier systems that processed videos as sequences of independent frames, these models treat time as an integral dimension of visual data.

Key architectural innovations

OpenAI’s Sora exemplifies this evolution through its diffusion transformer architecture:

- 3D spatiotemporal patches: Processes video data holistically rather than frame-by-frame

- Temporal consistency: Maintains visual quality and narrative coherence across sequences

- Long-range dependencies: Captures cause-and-effect relationships and motion patterns

- Physics-aware generation: Understands realistic motion and object interactions

Current applications

Content creation:

- Automated video editing and scene synthesis

- Style transfer with temporal consistency

- Narrative video generation from text prompts

Medical imaging:

- Cardiac motion analysis in echocardiograms

- Blood flow visualization in angiography

- Dynamic tissue behavior assessment

Security and surveillance:

- Activity recognition and tracking

- Anomaly detection across time

- Context-aware behavior analysis

Remaining challenges

Despite progress, several limitations persist:

- Computational cost: Long-form video generation remains resource-intensive

- Physical plausibility: Complex scenarios can produce unrealistic physics

- Character consistency: Maintaining identity across extended sequences is difficult

- Training requirements: Large-scale video datasets with annotations are scarce and expensive

On-device inference and edge optimization

Edge deployment enables vision models to run locally on smartphones, IoT devices, and embedded systems, eliminating dependence on cloud infrastructure.

Why edge deployment matters

Privacy benefits:

- Sensitive visual data never leaves the device

- Critical for healthcare, surveillance, and personal applications

- Compliance with data protection regulations

Performance advantages:

- Near-zero latency without network round trips

- Real-time processing for AR and autonomous systems

- Reliable operation in offline environments

Cost efficiency:

- Reduced bandwidth consumption

- Lower cloud computing expenses

- Minimal ongoing operational costs

Model compression techniques

Making large vision models viable on edge devices requires sophisticated optimization:

- Quantization: Reduces precision from 32-bit to 8-bit or 4-bit integers

- Smaller model size

- Minimal accuracy loss

- Pruning: Removes redundant parameters and connections

- Creates sparse networks

- Maintains performance with fewer computations

- Knowledge distillation: Transfers knowledge from large to small models

What is a large vision model (LVM)?

Large vision models (LVMs) are designed to process, analyze, and interpret visual data, such as images or videos. They are characterized by their extensive number of parameters, often in the millions or billions. This enables them to learn intricate patterns, features, and relationships in visual content.

Like large language models (LLMs) for text, LVMs are trained on vast datasets, which equip them with object recognition, image generation, scene understanding, and multimodal reasoning (integrating visual and textual information) capabilities.

These models can support applications in areas such as autonomous driving, medical imaging, content creation, and augmented reality.

Structure and design

Large vision models are built using advanced neural network architectures. Originally, Convolutional Neural Networks (CNNs) were predominant in image processing due to their ability to efficiently handle pixel data and detect hierarchical patterns.

Recently, transformer models, which were initially designed for natural language processing, have also been adapted for many different vision tasks, offering improved performance in some scenarios.

Training

Training large vision models involves feeding them visual data, such as internet images or videos, along with relevant labels or annotations in the novel sequential modeling approach. Trainers label image libraries to feed the models.

For example, in image classification tasks, each image is labeled with the class it belongs to. The model learns by adjusting its parameters to minimize the difference between its predictions and the actual labels.

This process requires significant computational power and a large, diverse dataset to ensure the model can generalize well to new and unseen data.

Figure 3: Large vision models training diagram on OpenAI.5

Check out image data collection services to learn more about training data for image classification.

Key features of large vision models

Model Type refers to a vision model’s architecture and design principles. It defines how the model processes and interprets visual data, whether it integrates multiple modalities (e.g., text and images), and what underlying mechanisms (e.g., transformers, contrastive learning, diffusion) it employs to extract meaningful representations:

- Transformer-based Multimodal LLM: A model architecture that combines transformers with multimodal capabilities. It enables the process of understanding both visual and textual inputs simultaneously. It is trained using large-scale datasets to perform complex reasoning across multiple data types.

- Contrastive Learning: A technique used in training models to differentiate between similar and dissimilar data points. This process involves maximizing the similarity of related inputs while minimizing the similarity of unrelated ones. This is often used in self-supervised learning to improve feature representation.

- AI Vision Platform: This system provides infrastructure, tools, and pre-trained models for various computer vision tasks, such as image recognition, object detection, and segmentation. It typically includes model training, deployment, and inference capabilities.

- Transformer: A deep learning architecture that utilizes self-attention mechanisms to process input data. It allows models to capture long-range dependencies, making it effective for natural language and vision-related tasks.

- Diffusion Model: A generative model that gradually removes noise from an initial noisy input and refines it step by step to produce a clear and structured output. It is commonly used for image generation and enhancement.

Training objective: The goal or optimization function that guides how a model learns from data. It determines how the model adjusts its internal parameters during training to improve performance on specific tasks. These are predicting the next data point (autoregressive), distinguishing similar/dissimilar inputs (contrastive learning), or classifying images into categories:

- Autoregressive: A training approach where a model predicts the next data point in a sequence based on previously observed inputs. This is often used in language modeling and generative vision models.

- Contrastive Learning: A self-supervised learning objective where the model learns by distinguishing between similar and dissimilar data pairs. It helps improve the ability to capture meaningful representations without explicit labels.

- Supervised Learning: A learning paradigm where the model is trained using labeled data, meaning each input is associated with a corresponding correct output. This approach is widely used in tasks such as classification and segmentation.

- Image Classification: A specific training objective where a model learns to categorize images into predefined classes based on visual features. The training process involves optimizing a loss function to maximize classification accuracy.

- Denoising Diffusion: A generative learning approach where a model is trained to recover clean images from noisy inputs. This process involves reversing a progressive noise addition process to improve image reconstruction and generation.

Fine-tuning Support: The ability of a model to be adapted to specific tasks by training on smaller, domain-specific datasets while retaining knowledge from its pre-training phase.

Fine-tuning helps improve performance on specialized applications.

Zero-shot/Few-shot Learning: The capability of a model to perform tasks with little to no task-specific training data.

Zero-shot learning allows inference on unseen categories, while few-shot learning enables adaptation with minimal labeled examples.

Multimodal Support: The ability of a model to process and integrate information from multiple modalities (e.g., text, images, audio).

Open-source vs. Proprietary: Open-source models have publicly available code and weights, allowing modification and deployment by the community,

Proprietary models are owned and controlled by private entities, can limit access and customization.

Edge Deployment: The ability of a model to run on edge devices (e.g., mobile phones, IoT devices) rather than relying on cloud-based servers.

Edge deployment helps reduce latency, enhances privacy, and enables real-time processing in resource-constrained environments.

What are the use cases of large vision models?

Healthcare and medical imaging

- Disease diagnosis: Detecting diseases from medical imagery such as X-rays, MRIs, or CT scans to identify tumors, fractures, or abnormalities.

- Pathology: Analyzing tissue samples in pathology for signs of cancer.

- Ophthalmology: Diagnosing diseases from retinal images.

Autonomous vehicles and robotics

- Navigation and obstacle detection: Helping autonomous vehicles and drones to navigate and avoid obstacles by interpreting real-time visual data.

- Robotics in manufacturing: Helping robots in sorting, assembling, and quality inspection tasks.

Security and surveillance

- Activity monitoring: Analyzing video feeds to detect unusual or suspicious behavior.

- Facial recognition: Used in security systems for identity verification and tracking. For example, Amazon Rekognition is a cloud-based service offered by Amazon Web Services (AWS) that provides image and video analysis features such as face detection and recognition, object and scene identification, and text extraction. It can analyze emotions, age ranges, and activities, which would be useful for personalization and security.

See below video to see Amazon Rekognition in action.6

Retail and eCommerce

- Visual search: Allowing customers to search for products using images instead of text.

- Inventory management: Automating monitoring and managing inventory through visual recognition.

Agriculture

- Crop monitoring and analysis: Monitoring crop health and growth using drone or satellite imagery.

- Pest detection: Identifying pests and diseases affecting crops.

Environmental monitoring

- Wildlife tracking: Identifying and tracking wildlife to support conservation efforts.

- Land use and land cover analysis: Monitoring changes in land use and vegetation cover over time.

Content creation and entertainment

- Film and video editing: Automating video editing and post-production processes.

- Game development: Enhancing realistic environment and character creation.

- Photo and video enhancement: Improving the quality of images and videos.

- Content moderation: Automatically detecting and flagging inappropriate or harmful visual content.

What are the challenges of large vision models?

Computational resources

Training and deploying these models require significant computational power and memory, which can make them resource-intensive.

Data requirements

They need vast and diverse datasets for training. Collecting, labeling, and processing such large datasets can be challenging and expensive. However, crowdsource companies can help handle this.

Bias and fairness

Models can inherit biases present in their training data, leading to unfair or unethical outcomes, particularly in sensitive applications like facial recognition.

Interpretability and explainability

Understanding how these models make decisions can be difficult, which concerns applications where transparency is critical. Check out explainable AI to learn how this process works and how it serves AI ethics.

Generalization

While they perform well on data similar to their training set, they may struggle with completely new or different data types.

Privacy concerns

Using large visual models, especially in surveillance and facial recognition, raises significant privacy concerns.

Regulatory and ethical challenges

Ensuring that these models comply with legal and ethical standards is increasingly important, particularly as they become more integrated into society.

Object detection benchmark methodology

In this benchmark, the performances of the object detection models YOLOv8n, DETR (DEtection TRansformer), and GPT-4o Vision were compared on the COCO 2017 validation dataset. 1000 images per model were used for the comparison. All images were resized to 800×800 pixels to ensure consistent input dimensions across models.

The YOLOv8n model was loaded with pretrained weights (yolov8n.pt) from the Ultralytics repository and inference was performed using the predict() method via the Ultralytics YOLO API. The DETR model was loaded with the detr_resnet50 architecture from the Facebook Research library, and its outputs, originally normalized in [center_x, center_y, width, height] format, were rescaled and converted to the [x1, y1, x2, y2] coordinate format. A confidence threshold of 0.5 was applied to the results of both models.

The GPT-4o Vision model was evaluated using OpenAI’s API for object detection capabilities. For this model, images from the COCO validation dataset were downloaded, annotations were loaded, and each image was converted to the appropriate format (resized to 800×800 pixels) before being sent to the API. Only detections belonging to COCO classes were requested in JSON format, and predictions returned by the API were evaluated using the same confidence threshold (0.5) and coordinate format.

In the evaluation, the models’ predicted bounding boxes were compared with ground truth boxes by calculating the Intersection over Union (IoU), with IoU ≥ 0.5 considered a true positive match. Average Precision (AP) was calculated for each class, and the mean of all classes was reported as the mAP@0.5 metric. Besides accuracy, inference times were measured and compared. Additionally, model complexity was analyzed based on FLOPs and total parameter counts.

To ensure a fair comparison, all model inferences were performed on the same hardware (identical GPU/CPU). The same preprocessing steps, resizing all images to 800×800 pixels and applying necessary normalization, were applied across all models. For post-processing, predictions were converted to the same coordinate format, a 0.5 confidence threshold was consistently applied, and uniform IoU calculation criteria were adopted during evaluation.

Within this framework, YOLOv8n, DETR, and GPT-4o Vision model were compared in terms of object detection performance and speed; additional adjustments and methods were employed to benchmark GPT-4o Vision’s capabilities against current object detection models.

Conclusion

Large vision models (LVMs) are changing how machines interpret and act upon visual data across various domains, including healthcare, autonomous systems, security, and the creative industries.

By leveraging advanced architectures, such as transformers and diffusion models, LVMs support a wide range of complex tasks, including medical imaging, real-time object detection, text-to-image generation, and video generation.

Their ability to learn from vast, multimodal datasets enables flexible deployment scenarios, ranging from cloud-based inference to edge computing, allowing for tailored applications that span from industrial inspection to personalized content creation.

However, these capabilities come with significant challenges. The computational cost of training and deploying LVMs remains high, often requiring powerful hardware and specialized infrastructure.

Issues such as data bias, limited interpretability, and ethical concerns surrounding surveillance and privacy underscore the need for careful model governance. As LVMs continue to evolve, striking a balance between innovation and responsibility will be crucial to ensure they are utilized effectively and equitably across various sectors.

Reference Links

Cem's work has been cited by leading global publications including Business Insider, Forbes, Washington Post, global firms like Deloitte, HPE and NGOs like World Economic Forum and supranational organizations like European Commission. You can see more reputable companies and resources that referenced AIMultiple.

Throughout his career, Cem served as a tech consultant, tech buyer and tech entrepreneur. He advised enterprises on their technology decisions at McKinsey & Company and Altman Solon for more than a decade. He also published a McKinsey report on digitalization.

He led technology strategy and procurement of a telco while reporting to the CEO. He has also led commercial growth of deep tech company Hypatos that reached a 7 digit annual recurring revenue and a 9 digit valuation from 0 within 2 years. Cem's work in Hypatos was covered by leading technology publications like TechCrunch and Business Insider.

Cem regularly speaks at international technology conferences. He graduated from Bogazici University as a computer engineer and holds an MBA from Columbia Business School.

Be the first to comment

Your email address will not be published. All fields are required.