As the AI market grows (Figure 1), integrating AI solutions remains challenging due to time-consuming tasks like data collection and annotation.

Many use automated annotation tools to streamline the tedious process of data annotation1 , but robust machine learning models still require human-in-the-loop approaches and human-annotated data. Here, we explore the benefits of human-annotated data and provide recommendations for its use in AI/ML projects.

What is human-annotated data?

Manually annotating data with human annotators is one of the most common and effective ways of annotating data. It is a human-driven process in which annotators manually label, tag, and classify data using data annotation tools to make it machine-readable. After the data annotation process, the data is then used by AI/ML models as training data to develop insights and perform different automated tasks with human-like intelligence.

Data annotations done by humans can be applied to every type of data, such as videos, images, audio, and text. Human annotators can perform various human-annotated data tasks, such as object detection, semantic segmentation, or recognizing the text in an image.

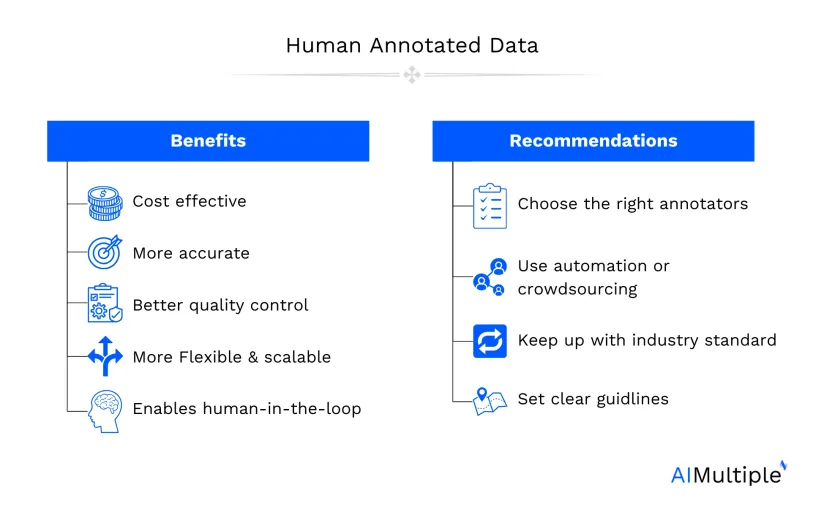

What are the benefits of human-annotated data?

1. Cost-effective

Human annotation is considered to be one of the most cost-effective methods of data annotation. This is mainly because human annotators are more efficient and accurate than automated tools, resulting in fewer mistakes and lower costs. However, this is only the case when the dataset is of a small or medium size. Manually annotating data for large datasets can make the job repetitive and error-prone for human annotators.

2. More accurate

Human annotators are trained professionals who can spot tiny details in large images or videos with high accuracy rates. This ensures that the annotated data is reliable for AI/ML project development.

3. Better quality control

Annotators provide feedback on the annotated data, which helps to ensure quality control over the dataset used in AI models and helps avoid false positives or negatives.

Additionally, human annotators also perform data annotation quality checks for automated labeling tools. For instance, if an automated data labeling tool makes a mistake or an incorrect label, it will continue to make that mistake until a human annotator stops it.

4. More flexible and scalable

Human annotators can easily adapt to new tasks and use their expertise to complete complex tasks quickly and efficiently. This makes human-annotated data even more valuable for AI/ML projects, as it can be used for a variety of applications.

5. Enables human-in-the-loop

Even the most advanced automated labeling tools can not work autonomously and require a human-in-the-loop. Even during the development process of auto-labeling models, human-annotated data is needed.

Recommendations on using human-annotated data for your AI/ML projects

1. Choose the right annotator

Select experienced human annotators with the right skills and qualifications for your AI project. Some industry-specific annotation jobs, such as medical data annotation, require specific labeling skills, so make sure to choose the right people for the job.

2. Use automation or out/crowdsourcing when necessary

Human annotation can become erroneous if the dataset is large and there is a limited number of annotators. In such cases, you can incorporate AI into the process. Automated labeling tools can help speed up the annotation process. However, human annotators should always be involved to ensure accuracy and quality control.

You can also use outsourcing or crowdsourcing for large-scale datasets since, with these methods, the data quality is not compromised.

3. Keep up with industry standards

Make sure to stay up-to-date on the latest industry standards and best practices when manually annotating data.

4. Set clear guidelines

It is also important to create a set of clear guidelines for human annotators to follow during the data labeling process. This will help ensure accuracy and consistency in the final product. It is also important to define the annotation criteria clearly before starting manual data annotation tasks. This includes defining:

- What kind of labels/tags should be used.

- How should they be applied.

- Other relevant details to ensure human annotators accurately complete the task.

Challenges of human-annotated data

1. Time-consuming process

Manual data labeling is a slow, resource-intensive process, especially for large datasets. Scaling human annotation requires a large workforce and proper training, which can be costly.

2. Annotation consistency & subjectivity

Different annotators may interpret data differently, leading to inconsistent labels. Annotation guidelines must be clear and well-defined to reduce subjectivity.

3. Security & privacy risks

Handling sensitive or proprietary data increases the risk of data leaks and breaches. Data security measures, such as NDA agreements, secure work environments, and compliance protocols, are essential.

4. Higher costs for large-scale projects

While human annotation is cost-effective for small datasets, large-scale projects may require significant financial resources. Companies often balance cost and efficiency by integrating AI-assisted labeling with human verification.

5. Annotator fatigue & quality decline

Repetitive annotation tasks can lead to annotator fatigue, resulting in decreased accuracy over time. Implementing quality control mechanisms, such as inter-annotator agreement checks, helps maintain consistency.

Further reading

- Top 20 Data Labeling Tools: In-depth Guide

- Data Labeling For Natural Language Processing (NLP)

- Top 10 Open Source Data Labeling/Annotation Platforms

- Data Labeling: How to Choose a Data Labeling Partner in 2023

If you need help finding a vendor or have any questions, feel free to contact us:

Comments

Your email address will not be published. All fields are required.