Big data is changing the face of the real estate industry as more businesses are trying to implement data-driven decision making strategies to multiply profits, increase customer satisfaction, and mitigate market risks. In turn, web scraping enables real estate businesses to collect real-time data from online resources which provides them with a large pool of insights about the real estate market, competitors, customer expectations, and economical status of certain areas and populations.

In this article, we explore data sources for real estate data, benefits and use cases of web scraping in real estate.

What is web scraping in real estate?

Web scraping in real estate is the practice of collecting property and consumer data from online websites in order to identify available estates, analyze consumer needs, and optimize prices. Data collected for real estate purposes can include:

- Property: Space, number of rooms, number of floors, type of property (apartment, condo, house), etc.

- Pricing: Price ranges by:

- Location

- Property size

- Pricing type (e.g. rental, sale, mortgage)

- Home buyers / customers: Trending neighborhoods, customers’ reviews of properties and prices, etc.

- Competitors: Property availability on competitor website, price ranges, marketing material.

- Public records: Insurance, loans, and mortgage records, average family income statistics, surveys, etc.

What is the best ways to scrape real estate data?

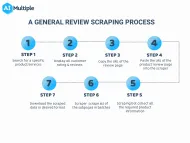

1. In-House web scrapers

Scraping is possible in almost any language, including javaScript, Python, and Ruby. You can build your web crawlers using web scraping libraries that you are familiar with. If you decide to build your scraper, familiarity with a programming language should be your primary consideration. For example, if you are a less experienced developer, javaScript would be more difficult to understand than other languages.

You can also utilize open-source web scrapers free of charge. Open-source web scrapers enable developers to modify and customize pre-built code based on their specific scraping needs. Either build your own scraper or use an open-source scraper; you need to support your scraper with a proxy server solution to avoid being blocked by the websites you are scraping.

2. Off-the-shelf web scrapers

Pre-built web scrapers enable technical and non-technical users to scrape web data from any web source. These solutions can scale up more than in-house solutions for large-scale data extraction projects. The majority of web scraping services handle web scraping challenges and proxy issues without the need for additional development. On the other hand, pre-built web scrapers may be less flexible than code-based solutions.

Pre-built web scraping tools are classified into three types:

- Low code & no code web scrapers

- Cloud web scrapers

- Browser extensions web scrapers

Sponsored:

Bright Data’s Data Collector pulls public data from targeted websites in almost real-time and delivers it to users on autopilot in the designated format (xlsx., JSON, CSV, etc.). The following video demonstrates how Bright Data’s data collector can be used to extract pricing information from competitor websites:

3. Web scraping APIs

Web scraping APIs is another method to obtain data from internal and external sources. If the website you intend to scrape supports API technology, APIs would be an option to collect data.

Sponsored:

Oxylabs’ real estate scraper API enables users to access and gather real-time data from real estate websites, such as Zillow, Redfin and Zoopla. You can access various types of real estate data, including pricing, location, and property type without triggering anti-bot mechanisms

What are the use cases of web scraping in real estate?

Some of the top web data and web scraping use cases in real estate include:

1. Property market research

Web data collected from property listings, real estate agencies’ websites, public insurance and government records can be used for identifying:

- Properties and areas in high demand.

- Ongoing project developments.

- Expectations about the local market.

- Insurance, mortgage, and loan ranges.

2. Price optimization

Leveraging competitors’ data about similar properties enables real estate business owners to understand the current market values and customer expectations, thus, helps businesses optimize their prices in different ways. For instance, estate owners can rely on a competitive pricing model such that they:

- Decrease the price of similar properties to attract more customers.

- Increase the price to reflect a higher value and quality of property.

3. Home buyer sentiment analysis

Collecting home buyer reviews and rankings from property listing websites enables businesses to evaluate customers’:

- Neighborhood requirements (e.g. schools, hospitals, facilities).

- Most valuable features of homes (e.g. size, floors, parking space, directions).

- Relationship with realtors and home owners.

- Reasons for moving to or out of certain areas (e.g. safety and crime rates)

This data can help homeowners and real estate agents to better understand current customer requirements and expectations, and optimize marketing and advertisement strategies accordingly.

4. Targeted advertisement

Web scraping while using proxies enables users to target websites in certain geographic regions, and pull region-specific real estate data (prices, safety, average family income). Understanding price ranges and homebuyer expectations in different areas and neighborhoods helps real estate agents and marketing teams to generate customized advertisements and offers that target potential homebuyers in specific regions.

There are many types of proxy servers available; learn about the types of proxies and their features.

5. Market forecasting

Pulling real-time and historical real estate data enables businesses to analyze market cycles, lowest values, peak prices, and purchase trends. Such data helps decision makers predict future trends and forecast sales and ROI.

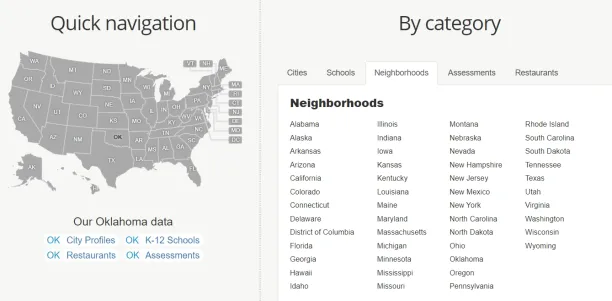

Which websites provide real estate data?

Businesses can scrape the following websites for:

Property value and pricing

- Zillow

- Realtor.com

- Apartments.com

- FSBO (for sale by owners)

- HomeSnap

Neighborhood and amenities

- National Multi Housing Council (NMHC)

- City-data.com

Population demographics

- US Census bureau

- Data.gov

- National Center for Assisted Living

Market data

- National Association of Homebuilders

- Fitch ratings

- National Association of Real Estate Investment Trusts

For more on web scraping

To explore web scraping use cases, feel free to check our in-depth guide about web scraping in finance, recruiting and e-commerce.

To analyze different web scraping tools, feel free to read our in-depth articles about:

- Web Scraping Tools: Data-driven Benchmarking

- Top 5 Web Scraping Case Studies & Success Stories

- Top 18 Web Scraping Applications & Use Cases

And if you believe your business will benefit from a web scraping tool, make sure to scroll through our data-driven list of web crawlers.

And we can guide you through the process:

Comments

Your email address will not be published. All fields are required.