Based on my more than a decade of software development experience including the CTO role at AIMultiple where I lead data collection from ~80,000 web domains, I have selected the top Python web scraping libraries. You can see my rationale behind each selection by following the links:

- Beautiful Soup: For beginners, and those looking for a simple parsing and navigating HTML or XML documents.

- Requests: For those who prefer a high-level API with easy-to-understand syntax.

- Scrapy: For large-scale web scraping projects.

- Selenium: For web scraping dynamic web pages.

- Playwright: For cross-browser automation and testing.

- Lxml: For those who need an efficient parser for large HTML or XML documents.

- Urllib3: For handling basic HTTP requests in a straightforward manner.

- MechanicalSoup: For users who prefer simplicity with basic browser automation.

Beautiful Soup

Requests

Scrapy

Selenium

Playwright

| Library | GitHub Stars | Key Features | License |

|---|---|---|---|

| Beautiful Soup | 84 | HTML/XML parsing Easy navigation Modifying parse tree | MIT |

| Requests | 50.9K | Custom headers Session objects SSL/TLS verification | Apache 2.0 |

| Scrapy | 49.9K | Web crawling Selectors Built-in support for exporting data | 3-Clause BSD |

| Selenium | 28.6K | Web browser automation Supports major browsers, Record and replay actions | Apache 2.0 |

| Playwright | 58.3K | Automation for modern web apps Headless mode Network manipulation | Apache 2.0 |

| Lxml | 2.5K | High-performance XML processing XPath and XSLT support Full Unicode support | BSD |

| Urllib3 | 3.6K | HTTP client library Reusable components SSL/TLS verification | MIT |

| MechanicalSoup | 4.5K | Beautiful Soup integration Browser-like web scraping Form submission | MIT |

Here is more explanation for the best Python web scraping tools & libraries:

1. Beautiful Soup

Beautiful Soup is a Python web scraping library that extracts data from HTML and XML files. 1 It parses HTML and XML documents and generates a parse tree for web pages, making data extraction easy.

Beautiful Soup Installation: You can install Beautiful Soup 4 with “the pip install beautifulsoup4″ script.

Prerequisites:

- Python

- Pip: It is a Python-based package management system.

Supported features of Beautiful Soup:

- Beautiful Soup works with the built-in HTML parser in Python and other third-party Python parsers, such as HTML5lib and lxml.

- Beautiful Soup uses a sub-library like Unicode and Dammit to detect the encoding of a document automatically.

- BeautifulSoup provides a Pythonic interface and idioms for searching, navigating and modifying a parse tree.

- Beautiful Soup converts incoming HTML and XML entities to Unicode characters automatically.

Benefits of Beautiful Soup:

- Provides Python parsers like”lxml” package for processing xml data and specific data parsers for HTML.

- Parses documents as HTML. You need to install lxml in order to parse a document as XML.

- Reduces time spent on data extraction and parsing the web scraping output.

- Lxml parser is built on the C libraries libxml2 and libxslt, allowing fast and efficient XML and HTML parsing and processing.

- The Lxml parser is capable of handling large and complex HTML documents. It is a good option if you intend to scrape large amounts of web data.

- Can deal with broken HTML code.

Challenges of Beautiful Soup:

- BeautifulSoup html.parser and html5lib are not suitable for time-critical tasks. If response time is crucial, lxml can accelerate the parsing process.

Sponsored

Most websites employ detection techniques like browser fingerprinting and web scraping challenges, such as Amazon’s, to prevent users from grabbing a web page’s HTML. For instance, when you send a get request to the target server, the target website may detect that you are using a Python script and block your IP address in order to control malicious bot traffic.

Bright Data provides a residential proxy network with 72+ million IPs from 195 countries, allowing developers to circumvent restrictions and IP blocks.

Check out our research for an in-depth analysis of providers of residential proxies. You can utilize web unblocker technology, an advanced proxy option ideal for sites with stringent anti-bot measures. Explore the top web unblocker solutions.

2. Requests

Requests is an HTTP library that allows users to make HTTP calls to collect data from web sources. 2

Requests Installation: Requests’s source code is available on GitHub for integration into your Python package. Requests officially supports Python 3.7+.

Prerequisites:

- Python

- Pip: You can import Requests library with the “pip install requests” command in your Python package.

Features of Requests:

- Requests automatically decode web content from the target server. There’s also a built-in JSON decoder if you’re working with JSON data.

- It uses a request-response protocol to communicate between clients and servers in a network.

- Requests provides in-built Python request modules, including GET, DELETE, PUT, PATCH and HEAD, for making HTTP requests to the target web server.

- GET: Is used to extract data from the target web server.

- POST: Sends data to a server to create a resource.

- PUT: Deletes the specified resource.

- PATCH: Enables partial modifications to a specified resource.

- HEAD: Used to request data from a particular resource, similar to GET, but does not return a list of users.

Benefits of Requests:

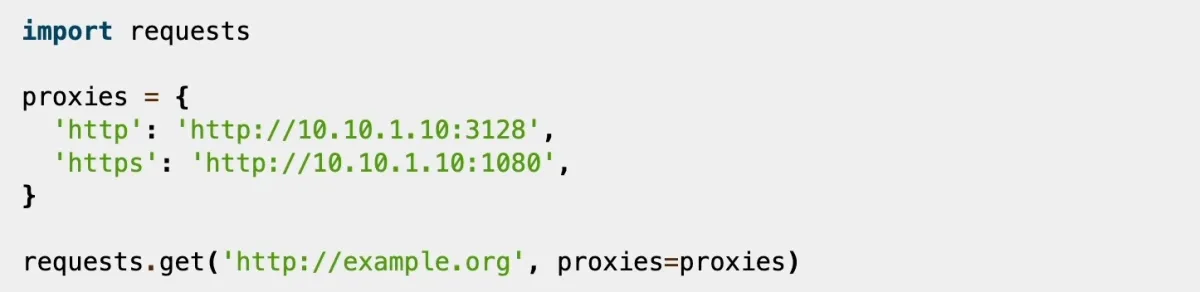

- Requests supports SOCKS and HTTP(S) proxy protocols.

Figure 2: Showing how to import proxies into the user’s coding environment

- It supports Transport Layer Security (TLS) and Secure Sockets Layer (SSL) verification. TLS and SSL are cryptographic protocols that establish an encrypted connection between two computers on a network.

Challenges of Requests:

- It is not intended for data parsing.

- It does not render JavaScript web pages.

3. Scrapy

Scrapy is an open-source web scraping and web crawling framework written in Python.4

Scrapy installation: You can install Scrapy from PyPI by using the “pip install Scrapy” command. They have a step-by-step guideline for installation for more information.

Features of Scrapy:

- Extract data from HTML and XML sources using XPath and CSS selectors.

- Offer a built-in telnet console for monitoring and debugging your crawler. It is important to note that using the telnet console over public networks is not secure because it does not provide transport-layer security.

- Include built-in extensions and middlewares for handling:

- Robots.txt

- User-agent spoofing

- Cookies and sessions

- Support for HTTP proxies.

- Save extracted data in CSV, JSON, or XML file formats.

Benefits of Scrapy:

- Scrapy shell is an in-built debugging tool. It allows users to debug scraping code without running the spider to figure out what needs to be fixed.

- Support robust encoding and auto-detection to handle foreign, non-standard, and broken encoding declarations.

Challenges of Scrapy:

- Python 3.7+ is necessary for Scrapy.

4. Selenium

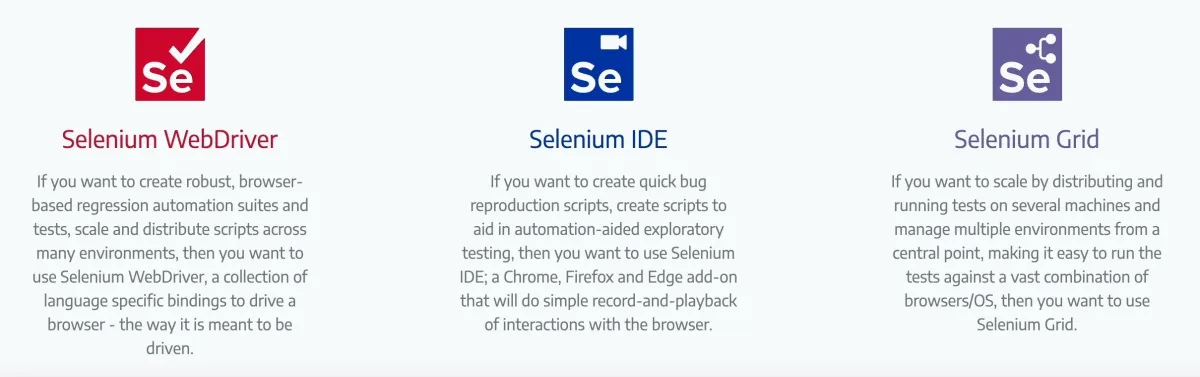

Selenium offers different open-source extensions and libraries to support web browser automation. 5 Its toolkit contains the following:

- WebDriver APIs: Utilizes browser automation APIs made available by browser vendors for browser automation and web testing.

- IDE (Integrated Development Environment): Is a Chrome and Firefox extension for creating test cases.

- Grid: Make it simple to run tests on multiple machines in parallel.

Figure 3: Selenium’s toolkit for browser automation

Prerequisites:

- Eclipse

- Selenium Web Driver for Python

To learn how to setup Selenium, check Selenium for beginners.

Features of Selenium:

- Provides testing automation features

- Capture Screenshots

- Provide JavaScript execution

- Supports various programming languages such as Python, Ruby, node.js. and Java.

Benefits of Selenium:

- Offers headless browser testing. A headless web browser lacks user interface elements such as icons, buttons, and drop-down menus. Headless browsers extract data from web pages without rendering the entire page. This speeds up data collection because you don’t have to wait for entire web pages to load visual elements like videos, gifs, and images.

- Can scrape JavaScript-rich web pages.

- Operates in multiple browsers (Chrome, Firefox, Safari, Opera and Microsoft Edge).

Challenges of Selenium:

- Taking screenshots of PDFs is not possible.

5. Playwright

Playwright is an open-source framework designed for web testing and automation. It is maintained by Microsoft team.7

Features of Playwright:

- Supports search engines such as Chromium, WebKit, and Firefox.

- Can be used popular programming languages including Python, JavaScript, C#, Java and TypeScript.

- Downloads web browsers automatically.

- Provides APIs for monitoring and modifying HTTP and HTTPS network traffic.

- Emulates real devices like mobile phones and tablets.

- Supports for headless and headed execution.

Playwright installation:

Three things are required to install Playwright:

- Python

- Pytest plugin

- Required browsers

Benefits of Playwright:

- Are capable of scraping JavaScript-rendered websites.

- Takes a screenshot of either a single element or the entire scrollable page.

Challenges of Playwright:

- It does not support data parsing.

6. Lxml

Lxml is another Python-based library for processing and parsing XML and HTML content. Lxml is a wrapper over the C libraries libxml2 and libxslt. Lxml combines the speed of the C libraries with the simplicity of the Python API.

Lxml installation: You can download and install the lxml library from Python Package Index (PyPI).

Requirements

- Python 2.7 or 3.4+

- Pip package management tool (or virtualenv)

Features of LXML:

- Lxml provides two different API for handling XML documents:

- lxml.etree: It is a generic API for handling XML and HTML. lxml.etree is a highly efficient library for XML processing.

- lxml.objectify: It is a specialized API for handling XML data in Python object syntax.

- Lxml currently supports DTD (Document Type Definition), Relax NG, and XML Schema schema languages.

Benefits of LXML:

- The key benefit of lxml is that it parses larger and more complex documents faster than other Python libraries. It performs at C-level libraries, including libxml2 and libxslt, making lxml fast.

Challenges of LXML:

- lxml does not parse Python unicode strings. You must provide data that can be parsed in a valid encoding.

- The libxml2 HTML parser may fail to parse meta tags in broken HTML.

- Lxml Python binding for libxml2 and libxslt is independent of existing Python bindings. This results in some issues, including manual memory management and inadequate documentation.

7. Urllib3

Python Urllib is a popular Python web scraping library used to fetch URLs and extract information from HTML documents or URLs. 8 Urllib is a package containing several modules for working with URLs, including:

- urllib.request: for opening and reading URLs (mostly HTTP).

- urllib.parse: for parsing URLs.

- urllib.error: for the exceptions raised by urllib.request.

- urllib.robotparser: for parsing robot.txt files. The robots.txt file instructs a web crawler on which URLs it may access on a website.

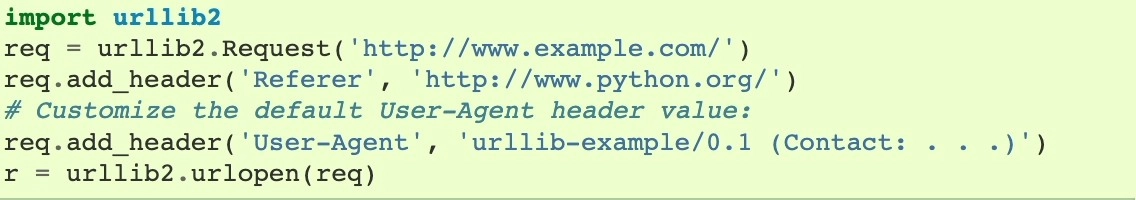

Urllib has two built-in Python modules including urllib2 and urllib3.

- urllib2: Sends HTTP requests and returns the page’s meta information, such as headers. It is included in Python version 2’s standard library.

Figure 4: urllib2 sends request to retrive the target page’s meta information

- urllib3: urllib3 is one of the most downloaded PyPI (Python Package Index) packages.

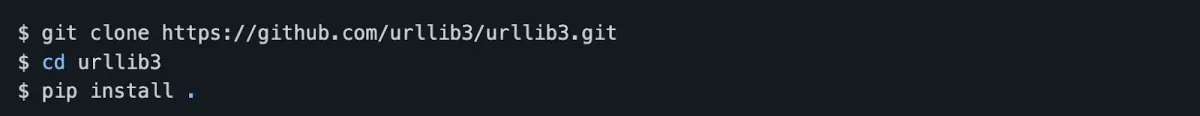

Urllib3 installation: Urllib3 can be installed using pip (package installer for Python). You can execute the “pip install urllib3” command to install urllib in your Python environment. You can also get the most recent source code from GitHub.

Figure 5: Installing Urllib3 using pip command

Features of Urllib3:

- Proxy support for HTTP and SOCKS.

- Provide client-side TLS/SSL verification.

Benefits of Urllib3:

- Urllib3’s pool manager verifies certificates when making requests and keeps track of required connection pools.

- Urllib allows users to access and parse data from HTTP and FTP protocols.

Challenges of Urllib3:

- It might be challenging than other libraries such as Requests.

8. MechanicalSoup

MechanicalSoup is a popular Python library that automates website interaction.11

MechanicalSoup installation: Install Python Package Index (Pypi), then write “pip install MechanicalSoup” script to locate MechanicalSoup on PyPI.

Features of MechanicalSoup:

- Mechanicalsoup uses BeautifulSoup (BS4) library. You can navigate through the tags of a page using BeautifulSoup.

- Automatically stores and sends cookies.

- Utilizes Beautiful Soup’s find() and find all() methods to extract data from an HTML document.

- Allows users to fill out forms using a script.

Benefits of MechanicalSoup:

- Supports CSS and XPath selectors. XPaths and CSS Selectors enable users to locate elements on a web page.

Challenges of MechanicalSoup:

- MechanicalSoup is only compatible with HTML pages. It does not support JavaScript. You cannot access and retrieve elements on JavaScript-based web pages.

- Does not support JavaScript rendering and proxy rotation.

Further reading

If you have more questions, do not hesitate contacting us:

External links

- 1. Beautiful Soup: We called him Tortoise because he taught us..

- 2. Requests: HTTP for Humans™ — Requests 2.32.4 documentation.

- 3. Advanced Usage — Requests 2.32.4 documentation.

- 4. Scrapy 2.13 documentation — Scrapy 2.13.2 documentation.

- 5. In-Depth Guide to Puppeteer vs Selenium in 2025. AIMultiple

- 6. Selenium

- 7. Fast and reliable end-to-end testing for modern web apps | Playwright.

- 8. urllib — URL handling modules — Python 3.13.5 documentation.

- 9. 20.6. urllib2 — extensible library for opening URLs — Python 2.7.18 documentation.

- 10. GitHub - urllib3/urllib3: urllib3 is a user-friendly HTTP client library for Python.

- 11. Welcome to MechanicalSoup’s documentation! — MechanicalSoup 1.4.0 documentation.

Comments

Your email address will not be published. All fields are required.