Imagine a healthcare startup developing an AI system to detect rare diseases, but there’s a problem: there’s not enough labeled data to train a traditional machine learning model. This is where few-shot learning (FSL) comes in.

From diagnosing medical conditions to improving natural language processing, few-shot learning is changing how AI adapts to new challenges with minimal data.

Explore how few-shot learning works, its key techniques and applications, and what the future holds for few-shot learning.

What is few-shot learning (FSL)?

Few-shot learning is a machine learning approach that enables training models to perform well with only a few examples rather than relying on large amounts of task-specific data. Unlike supervised learning, which requires a significant number of labeled samples, few-shot learning approaches allow machine learning models to generalize to new tasks with minimal training data.

Few-shot learning belongs to the broader category of shot learning:

- Few-shot learning: Learns from only a handful of labeled examples.

- One-shot learning: Learns from a single prompt.

- Zero-shot learning: Makes predictions without labeled data, relying on pre-existing knowledge.

These approaches leverage meta learning and transfer learning to adapt to new classes and related tasks using pre-existing knowledge from pre-training. Methods such as prototypical networks and embedding space representations help enhance a model’s ability to handle unstructured data in various tasks, including natural language processing (NLP) tasks, content creation, and question answering.

The role of prompt engineering in few-shot learning

A crucial aspect of few-shot learning work is prompt engineering, where models are given a few-shot prompt or a zero-shot prompt containing concrete examples to guide their responses. This prompt engineering technique is particularly effective for large language models, which can be fine-tuned with additional context rather than requiring more data or additional training.

The primary goal of few-shot learning is to reduce reliance on specific training data while maintaining accuracy in test set performance, making it a powerful tool for efficiently adapting AI to new examples and specific tasks.

Learning through Q learning in few-shot scenarios

Although Q learning is primarily used in reinforcement learning settings, its principles can be extended to few-shot learning tasks where decision-making is required.

For instance, when a model must learn optimal actions with limited feedback, Q learning mechanisms help update action-value estimates.

In environments such as robotics or sequential decision problems, this integration allows models to learn through exploration, even with scarce labeled data.

Leveraging distributed representations for generalization

Distributed representations are key in enabling few-shot learning models to generalize across tasks. Models can capture semantic relationships between classes by encoding input data into high-dimensional vector spaces.

Distributed representations, learned during pre-training, are a foundation for comparing new instances using metric-based approaches such as prototypical networks and matching networks.

The role of distributed training in few-shot learning

Distributed training becomes essential for accelerating experimentation and optimization as few-shot learning models scale in complexity.

Training across multiple computational nodes allows for parallel processing of diverse tasks and classes, improving convergence rates.

Distributed training is beneficial when employing meta-learning strategies that require frequent updates across many small training episodes.

How does few-shot learning work?

1. Support set creation

- A small set of labeled data (e.g., few-shot prompt) is provided for training.

- This dataset includes examples provided for each class.

2. Query set consists of new data

The model receives a query set (unseen data samples) and must classify them correctly.

3. Meta-Learning and Transfer Learning

- Meta learning teaches models how to learn from few examples by adapting to related tasks.

- Transfer learning helps leverage pre-existing knowledge to recognize new classes without requiring additional training.

4. Embedding space representation

- The model maps input data into an embedding space where similar classes are clustered together.

- Techniques like prototypical networks improve classification by comparing distances in this space.

5. Fine-tuning on target task

The model is adjusted with additional context to better predict outcomes on the test set.

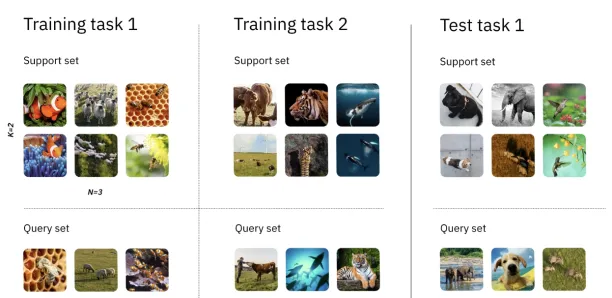

N-Way K-Shot classification in few-shot learning

Few-shot learning often follows an N-way K-shot classification framework, where:

- N represents the number of classes the model must distinguish.

- K represents the number of examples (shots) per class used for training.

Here is how N-Way K-Shot classification works:

- Training with support and query sets: The support set provides K labeled examples for each of the N classes to help the model learn class representations. The query set contains unlabeled examples, and the model must classify them based on the knowledge learned from the support set.

- Different learning scenarios:

- 3-way 2-shot learning: The model is trained with 3 classes, with 2 examples per class.

- One-shot learning (K=1): The model sees only one example per class.

- Zero-shot learning (K=0): The model classifies without any labeled examples, relying on pre-existing knowledge.

- Training and optimization: The model undergoes multiple training episodes, with each episode containing new training tasks using different data classes.

- A loss function measures how well the model classifies the query set.

- After each training step, the model’s parameters are adjusted to minimize loss and improve future performance.

- Generalizing to new classes: Since meta-learning focuses on generalization rather than memorization, the model is trained on different classes in each episode. During testing, the support set and query set contain entirely new classes that the model hasn’t seen before. The model’s success is determined by its ability to correctly classify unseen data based on prior learning.

Figure 1: A 3-way 2-shot classification task example in few-shot learning.1

Why is it important?

Test base for learning like a human

Humans can spot the difference between handwritten characters after seeing a few examples. However, computers need large amounts of data to classify what they “see” and spot the difference between handwritten characters. Few-shot learning is a test base where computers are expected to learn from few examples like humans.

Learning for rare cases

By using few-shot learning, machines can learn rare cases. For example, when classifying images of animals, a machine learning model trained with few-shot learning techniques can classify an image of a rare species correctly after being exposed to small amount of prior information.

Reducing data collection effort and computational costs

As few-shot learning requires less data to train a model, high costs related to data collection and labeling are eliminated. Low amount of training data means low dimensionality in the training dataset, which can significantly reduce the computational costs.

What are the applications of few-shot learning?

Few-shot learning is particularly valuable in scenarios where data collection is challenging or costly. Below are key applications of few-shot learning across various domains:

Computer vision

Few-shot learning addresses an important challenge of computer vision: limited labeled images. It helps facilitating rapid model adaptation in areas such as:

- Image classification & object recognition: Enables models to identify and categorize objects with minimal labeled examples, which is crucial for recognizing rare or new classes.

- Facial recognition: Assists in identifying individuals from a few facial images, enhancing security systems and personalized user experiences.

- Medical imaging: Supports the detection and diagnosis of diseases from limited medical images, aiding in early intervention and treatment planning.

- Autonomous vehicles: Allows self-driving cars to recognize and respond to uncommon obstacles or scenarios by learning from a few instances.

Natural Language Processing (NLP)

In NLP, few-shot learning empowers models to perform language-related tasks with limited textual data:

- Text classification: Enables categorization of text into predefined labels, such as spam detection or topic identification, with minimal labeled examples.

- Sentiment analysis: Assesses the sentiment or emotional tone of text, aiding businesses in understanding customer opinions from a few reviews.

- Machine translation: Facilitates translation between languages with scarce parallel corpora, expanding the reach of information across linguistic barriers.

- User intent classification: Enhances chatbots and virtual assistants in accurately interpreting user intentions from limited interactions, improving response relevance.

Audio processing

Data that contains information regarding voices/sounds can be analyzed by acoustic signal processing and few-shot learning can enable deployment of tasks such as:

- Voice cloning from a few audio samples of the user (e.g. voices in GPS/navigation apps, Alexa, Siri, and more).2

- Voice conversion from one user to another.3

- Voice conversion across different languages.

Robotics

Few-shot learning enables robots to acquire new skills and adapt to tasks with minimal demonstrations:

- Imitation learning: Robots can replicate complex movements or tasks by observing a single demonstration, reducing the need for extensive programming.

- Manipulation actions: Allows robots to learn handling of various objects with few examples, enhancing their utility in dynamic environments.

- Visual navigation: Supports robots in navigating new spaces by learning from limited visual cues, essential for exploration and mapping.

Healthcare

In the healthcare industry, few-shot learning contributes to advancements such as:

- Drug discovery: Accelerates the identification of potential drug candidates by learning from a small number of known compounds.

Internet of Things (IoT) analytics:

Facilitates the analysis of data from diverse IoT devices with limited labeled data, improving predictive maintenance and anomaly detection.

Mathematical applications

Assists in tasks like curve-fitting, where models learn to approximate functions from a few data points, enhancing scientific computations.

Logical reasoning

Enables models to perform deductive reasoning tasks with minimal examples, contributing to advancements in artificial intelligence and problem-solving.

What are the different approaches to few-shot learning?

| Approach | Focus | Useful in |

|---|---|---|

| Metric-based | Learning a distance or similarity function to compare new examples with known ones. | Classification and verification tasks |

| Optimization-based | Training a model to quickly adapt to new tasks by optimizing its initialization and learning strategies. | Robotics, control, and few-shot learning tasks. |

| Generative | Generating synthetic data to augment the training set for better performance in few-shot scenarios. | Image and text generation or augmentation. |

There are several approaches to train models with very few labeled examples per class, categorized into three main types: metric-based, optimization-based, and generative approaches. Below are the primary methods within these categories:

1. Metric-based approaches

These methods learn a feature space where similar instances are close together, enabling the model to classify new examples based on distance metrics.

- Siamese networks: Use twin networks with shared weights to compare pairs of images using a similarity metric like cosine similarity or Euclidean distance.

- Matching networks: Utilize an attention mechanism to compare new samples against a support set in an embedding space.

- Prototypical networks: Compute a class prototype (centroid) for each class in the embedding space, classifying new samples based on proximity to these prototypes.

- Relation networks: Learn a non-linear similarity function between query and support set examples instead of relying on predefined distance metrics.

2. Optimization-based approaches

These methods aim to train models that can quickly adapt to new tasks with minimal updates.

- Model-Agnostic Meta-Learning (MAML): A meta-learning framework that optimizes for initial weights that allow fast adaptation to new tasks with few gradient updates.

- Reptile: A simpler alternative to MAML, which updates the model weights based on multiple tasks to improve generalization.

- Meta-Learner LSTM: Uses an LSTM-based optimizer to learn an efficient parameter-updating strategy for few-shot classification.

3. Generative approaches

These methods generate additional samples or useful features to enhance learning.

- Data augmentation: Uses techniques like GANs (e.g., Conditional GANs, Adversarial Autoencoders) or Variational Autoencoders (VAEs) to generate synthetic data for training.

- Memory-augmented networks: Utilize external memory modules (e.g., Neural Turing Machines) to store and retrieve past experiences to aid classification.

4. Hybrid approaches

- Meta-learning with prototypical networks: Combines meta-learning principles with prototypical networks to enhance adaptability.

- Graph Neural Networks (GNNs) for few-shot learning: Models relationships between support and query samples using graph structures.

How is it implemented in Python?

There are several open-source few-shot learning projects available. To implement few-shot learning projects, users can refer to the following libraries/repositories in Python:

- Pytorch – Torchmeta: A library for both few-shot classification and regression problems, which enables easy benchmarking on multiple problems and reproducibility.4

- FewRel: A large-scale few-shot relation extraction dataset, which contains more than one hundred relations and lots of annotated instances across different domains.5

- Meta Transfer Learning: This repository contains the TensorFlow and PyTorch implementations for Meta-Transfer Learning for Few-Shot Learning.6

- Few Shot: Repository that contains clean, readable and tested code to reproduce few-shot learning research.7

- Few-Shot Object Detection (FsDet): Contains the official few-shot object detection implementation of Simple Few-Shot Object Detection.8

- Prototypical Networks on the Omniglot Dataset: An implementation of “Prototypical Networks for Few-shot Learning” on a notebook in Pytorch.9

Future of few-shot learning

The future of few-shot learning, particularly in open-world settings, is expected to evolve in several key areas10 :

Handling varying concepts

Traditional models assume each instance represents a single concept, but in open-world scenarios, an instance may embody multiple concepts simultaneously. Approaches like multi-label learning are being explored to address this complexity.

Defending against adversarial attacks

As few-shot models become more widely used, they need to be resistant to adversarial attacks. Future research should focus on developing models that can withstand these disruptions, enhancing their reliability in real-world applications.

Cross-domain and incremental learning

Few-shot learning models are being extended to handle data from different domains and new, unseen classes over time. Techniques like incremental learning will allow models to continue learning as new classes emerge, without forgetting previously learned classes.

Data augmentation and multi-phase learning

Future approaches will also likely include better data augmentation strategies, improving generalization across domains, and multi-phase learning to deal with continuously evolving data.

Embedding few-shot learning into broader learning systems

Few-shot learning contributes to the development of more adaptive and efficient learning systems.

These learning systems combine different learning paradigms, including supervised, unsupervised, and reinforcement learning, to solve complex real-world problems.

By embedding few-shot learning modules, learning systems can maintain performance in data-scarce settings and extend functionality across domains.

External Links

- 1. What Is Few-Shot Learning? | IBM. IBM

- 2. [2102.05630] Voice Cloning: a Multi-Speaker Text-to-Speech Synthesis Approach based on Transfer Learning.

- 3. [2107.03748] Expressive Voice Conversion: A Joint Framework for Speaker Identity and Emotional Style Transfer.

- 4. Home - Torchmeta.

- 5. GitHub - thunlp/FewRel: A Large-Scale Few-Shot Relation Extraction Dataset.

- 6. GitHub - yaoyao-liu/meta-transfer-learning: TensorFlow and PyTorch implementation of "Meta-Transfer Learning for Few-Shot Learning" (CVPR2019).

- 7. GitHub - oscarknagg/few-shot: Repository for few-shot learning machine learning projects.

- 8. GitHub - ucbdrive/few-shot-object-detection: Implementations of few-shot object detection benchmarks.

- 9. GitHub - cnielly/prototypical-networks-omniglot: An implementation of "Prototypical Networks for Few-shot Learning" on a notebook in Pytorch.

- 10. [2408.09722] Towards Few-Shot Learning in the Open World: A Review and Beyond.

Comments

Your email address will not be published. All fields are required.