As the demand for voice recognition and virtual assistants grows 1 , so does the need for audio data collection services.

You can also work with an audio or speech data collection service to acquire relevant training data for your speech processing projects.

What does audio data collection mean for AI/ML?

Audio data collection/harvesting/sourcing means gathering audio data to train and improve an AI/ML model. Such data includes:

- Speech data (Spoken words by humans in different languages, accents, and dialects)

- Different sounds (Animal sounds, sounds of objects, etc.)

- Music data (music or song recordings)

- Other digitally recorded human sounds, such as coughs, sneezes, or snores

- Far-flung speech or other background noises

All this audio data can be used to train the following technologies:

- Virtual assistants in smartphones,

- Smart home devices and appliances (Google Home, Siri, Alexa, etc.)

- Smart car systems

- Voice recognition systems for security

- Voice bots

- Other voice-enabled solutions

Example of ChatGPT

ChatGPT introduced voice input and output features that can accurately process user voice inputs and provide realistic voice outputs. This is possible through gathering human-generated audio data.2

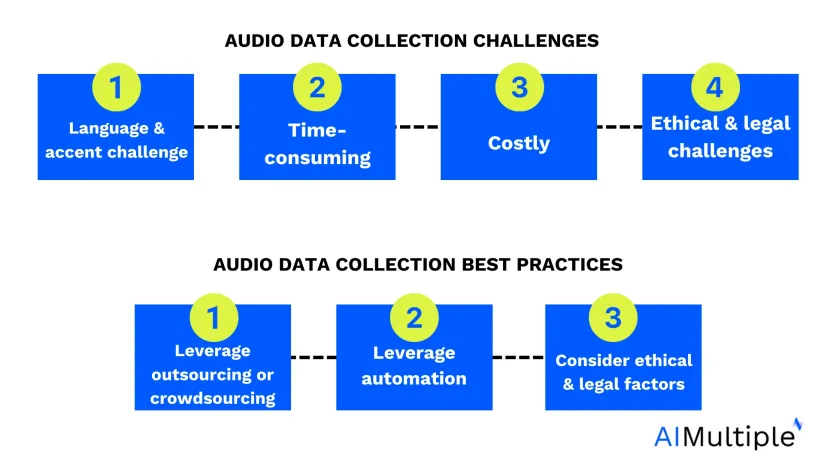

What are the challenges in collecting audio data?

1. Language and accent challenge

There is a rising global demand for smart home devices.3 To extensively deploy such devices, you need audio data in different languages and accents. Acquiring it takes time.

For instance, Amazon Echo is available in more languages and countries than Google Home since they have been in the market for a longer period of time. Even though Google Home was launched 6 years ago, it has only been launched in around 11 countries. One of the reasons for this has been the difficulty in expanding datasets with different languages and accents.

2. Time-consuming

Recording audio data consumes more time than image data. This is because audio data is recorded in real time and can not be captured at a single point in time like an image.

Audio data collection can be more time-consuming if the data is being gathered:

- in different languages and accents

- of different types of voices (female, male, high/low pitched, etc.)

- is of different resolutions and formats

- includes jargon or variations in the voice (such as emotions)

For example, in a recent study on developing voice-based human identity recognition, collecting audio data from only 150 participants from a single region took over 2 months.

3. Costly

Depending on the project’s scope, in-house audio data collection can be expensive and labor-intensive. If off-the-shelf datasets are insufficient for your project, audio data collection can significantly increase the project budget.

Studies show a positive correlation between the size of the data and the accuracy of the model being trained. Consequently, the larger the dataset, the higher the cost of collection.

Some factors that can impact the cost of audio data collection include

- Recruitment of contributors and collectors

- Voice recording and storage equipment

4. Ethical and legal challenges

Another challenge in gathering audio data, specifically speech data, is people’s unwillingness to share it. Due to reasons such as privacy and security, many people are hesitant to share their voice data since it is a type of biometric data.

To learn more, check out these comprehensive articles on:

What are some best practices for audio data collection?

To overcome the challenges as mentioned earlier, the following best practices can be considered:

1. Leverage outsourcing or crowdsourcing

Audio data collection processes can be outsourced or crowdsourced depending on the size and scope of the project. Outsourcing can be the way to go if the dataset needs to be simple and small/medium. On the other hand, large and diverse datasets can be collected through crowdsourcing.

For instance, data collection service providers working with a crowdsourcing model will use microtasks to reduce the cost of collecting data and make it diverse.

Through these methods, the company can also transfer the burden of ethical and legal considerations to the third-party service provider.

2. Leverage automation

Another way of data collection is automation. You can program a bot to collect audio data through online sources. This can be done in-house and reduces the need for excessive recruitment of contributors. However, in automated data collection, maintaining the data quality can be challenging since the data is collected in masses without any scrutiny.

3. Consider ethical and legal factors

It is important to consider ethical and legal factors before collecting any type of data to avoid expensive lawsuits. As mentioned earlier, audio data is biometric data; therefore, the data collectors must ensure transparency.

For instance, if a smart home device collects voice data from the user to train itself, it must inform the user and provide an option for the user to opt out.

You can also check our data-driven list of data collection/harvesting companies to find the option that best suits your project needs.

Use cases for audio data collection with real-life examples

1. Healthcare: Early Disease Detection

- Collecting cough, sneeze, and breathing sounds to train an AI that detects respiratory diseases (e.g., asthma).

- With tools like Hyfe AI’s cough-tracking app and Sonde Health’s vocal biomarkers.

2. Automotive: Noise-Robust Voice Assistants

- Recording voices in noisy environments (e.g., highways, rain) to improve in-car systems like Mercedes’ MBUX.

- With tools like Brüel & Kjær’s acoustic testing kits and Audio Analytic’s edge noise filters.

3. Customer Service: Emotion-Aware Voice Bots

- Capturing vocal tones (anger, frustration) to train bots like Salesforce Einstein to escalate calls with Beyond Verbal’s emotion analytics.

4. Entertainment: AI-Generated Music

- Licensing music catalogs to train models like OpenAI’s Jukedeck for royalty-free tracks without copyright infringement in AI compositions.

5. Agriculture: Pest Monitoring

- Using bioacoustic sensors to detect insect infestations via crop sounds.

- With tools like FarmSense’s acoustic traps and Google’s Bioacoustic Monitoring API.

6. Retail

- Training models to understand shopping intent via voice queries.

- With tools like SoundHound’s dynamic voice search and Amazon Lex’s intent recognition.

FAQs

What is the best source for accessing fresh human-generated audio data?

Crowdsourcing platforms have emerged as an essential resource for gathering fresh human-generated audio data, offering a cost-effective method to collect high-quality audio recordings from a diverse pool of native speakers in multiple languages. This approach is particularly beneficial for developing machine learning models, including speech recognition systems, voice-enabled applications, and virtual assistants. By leveraging these platforms, researchers can access a vast range of speech data, encompassing various spoken languages, dialects, and accents, which is essential for training algorithms to recognize human speech accurately. Additionally, these services facilitate the collection of voice data in different scenarios, including varying background noises and sound effects, enhancing the robustness of automatic speech recognition (ASR) systems. The resulting audio datasets are invaluable for advancing natural language processing, audio analysis, and ensuring high data quality for applications in artificial intelligence, from smart home devices to sophisticated speech-to-text and text-to-speech conversions.

Which audio data service is best for AI training?

Selecting the optimal audio data collection service is pivotal, ensuring it aligns with your specific data requisites. It’s crucial to verify that the service can provide the exact type of audio data you need, encompassing the desired languages and dialects, especially if your project involves speech recognition or natural language processing for virtual assistants or voice-enabled applications.

Equally important is the compatibility with your required audio data formats and the assurance that these services fall within your financial parameters. A service that offers high-quality audio data, alongside capabilities for audio transcription and speaker identification, can significantly enhance your machine learning models. Additionally, considering services that provide audio data annotation can offer a substantial advantage, enriching data quality and facilitating more accurate machine learning outcomes.

By meticulously evaluating these aspects, you can effectively narrow down your choices, ensuring access to the best-suited audio data collection services for your project’s success.

Further reading

External resources

- 1. Voice recognition market size worldwide from 2020 to 2029 (in billion U.S. dollars). Statista. Accessed: 13/May/2025.

- 2. ChatGPT can now see, hear, and speak | OpenAI.

- 3. OpenAthens / Sign in.

Comments

Your email address will not be published. All fields are required.