Based on features and user experiences shared in review platforms, here are the top 6 open-source sensitive data discovery tools:

Administrative features

| Tool | Graphical dashboard | Search-based | Data lineage | Federated database system |

|---|---|---|---|---|

| DataHub | ✅ | ✅ | ✅ | ✅ |

| Apache – Atlas | ✅ | ✅ | ✅ | ❌ |

| Marquez | ✅ | ✅ | ✅ | Not shared. |

| OpenDLP | ❌ | ❌ | ❌ | ❌ |

| Piiano Vault – ReDiscovery | ❌ | Not shared. | ❌ | ❌ |

| Nightfall AI – Sensitive data scanner | ✅ | ✅ | ❌ | ❌ |

Feature descriptions:

- Graphical dashboard – allows to visualize your data findings.

- Search-based functionality – allows searching for data assets.

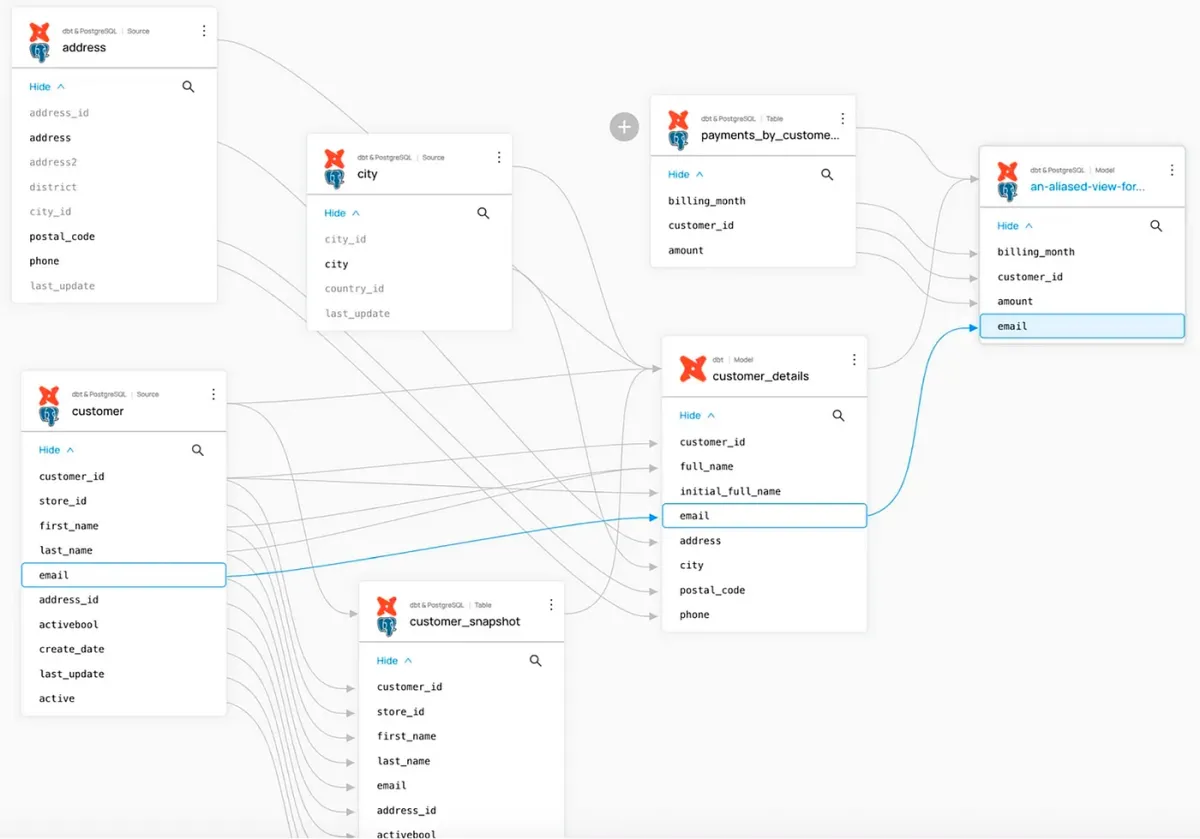

- Data lineage – allows users to visualize how data is generated, transformed, transmitted, and used across a system over time.

- Federated database system – maps multiple autonomous database systems into a single federated database.

These functionality (especially data lineage and search capabilities) allow businesses to:

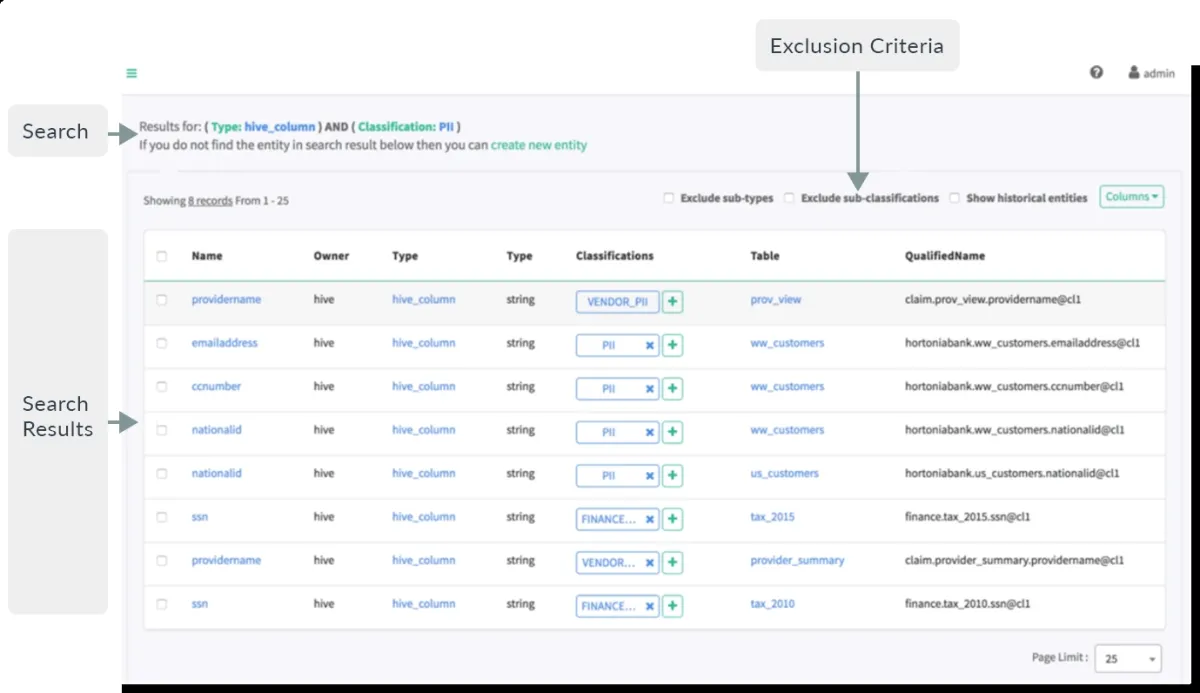

- Uncover the location of their personal information (PII), payment card industry (PCI) data, etc., stored across multiple databases, apps, and user endpoints.

- Comply with industry regulatory data protection and privacy standards such as General Data Protection Regulation (GDPR), and California Consumer Privacy Act (CCPA).

Data security features

| Tool | Data masking | Data loss prevention |

|---|---|---|

| DataHub | ✅ | ❌ |

| Apache – Atlas | ✅ | ❌ |

| Marquez | ❌ | ❌ |

| OpenDLP | ❌ | ✅ |

| Piiano Vault – ReDiscovery | ✅ | ❌ |

| Nightfall AI – Sensitive data scanner | ✅ | With integrations. |

Feature descriptions:

- Data masking – allows hiding data by modifying its original letters and numbers, so that it has no value to unauthorized intruders while remaining usable for authorized employees.

- Data loss prevention (DLP) – detects potential data breaches and prevents them by blocking sensitive data.

Categories and GitHub stars

| Tool | Category | GitHub stars | Source code |

|---|---|---|---|

| DataHub | Data governance & metadata | 9,900+ | datahub |

| Apache – Atlas | Data governance & metadata for Hadoop | 1,800+ | atlas |

| Marquez | Data governance & metadata | 1,800+ | marquez |

| OpenDLP | DLP | 90+ | opendlp |

| Piiano Vault – ReDiscovery | Sensitive data discovery | 30+ | ReDiscovery |

| Nightfall AI – Sensitive data scanner | Sensitive data discovery | 10 | sensitive-data-scanner |

Tool selection & sorting:

- Number of reviews: 10+ GitHub stars.

- Update release: At least one update was released last week as of November 2024.

- Sorting: Tools are sorted based on GitHubStar numbers in descending order.

DataHub

DataHub is an open-source unified sensitive data discovery, observability, and governance platform built by Acryl Data and LinkedIn. It is also commercially offered by Acryl Data as a cloud-hosted SaaS offering.

Key features:

- Detailed data lineage: Provides cross-platform and column-level lineage.

- Automated data quality checks: AI-driven anomaly detection for identifying data quality issues.

- Extensibility: Features rich APIs, and SDKs for customization.

- Enterprise-scale: The platform is used at an enterprise scale, with notable users like Netflix relying on the platform.

Integrations: 70+ Native integrations:

- Data warehousing and databases: Snowflake, BigQuery, Redshift, Hive, Athena, Postgres, MySQL, SQL Server, Trino

- Business intelligence (BI): Looker, Power BI, Tableau, and more.

- Identity and access management: Okta, LDAP.

- Data lakes and storage: S3, Delta Lake.

Apache – Atlas

Apache Atlas is a tool for managing metadata and governance in Hadoop ecosystems. It is a solid choice for building data discovery and lineage on top of cloud data assets such as SQL databases on AWS, Databricks, and Azure ADLS Gen2.

Key features

- Dynamic classification: Apache Atlas allows creating custom classifications such as PII (Personally Identifiable Information), EXPIRES_ON, DATA_QUALITY, and SENSITIVE.

- Metadata types: The platform provides pre-defined metadata types for Hadoop and non-Hadoop environments. This allows users to manage metadata for several data sources, such as HBase, Hive, Sqoop, Kafka, and Storm.

- SQL-like query language (DSL): The platform supports a domain-specific language (DSL) that provides SQL-like query functionality to search entities. This makes it accessible for users familiar with SQL.

- Integration with external tools: Apache Hive, Apache Spark, Kafka, and Presto, making it adaptable for big data environments.

Considerations

Setup complexity: Configuring Apache Atlas in a multi-cloud environment can be difficult, particularly for companies that require unique interfaces. Ensuring smooth connectivity across AWS, Azure, and Databricks could require additional effort, particularly in bridging the gaps between the platforms’ APIs.

Ecosystem fit

- Atlas is well-suited for large data systems such as Hadoop, Spark, and Hive; however, for more specific cloud-native solutions such as AWS Redshift or Azure Synapse, additional configuration may be required to record lineage efficiently.

- Native integrations with cloud platforms such as AWS and Azure (for example, AWS Glue for data cataloging) may offer smoother solutions with less overhead for complicated lineage tracking.

Marquez

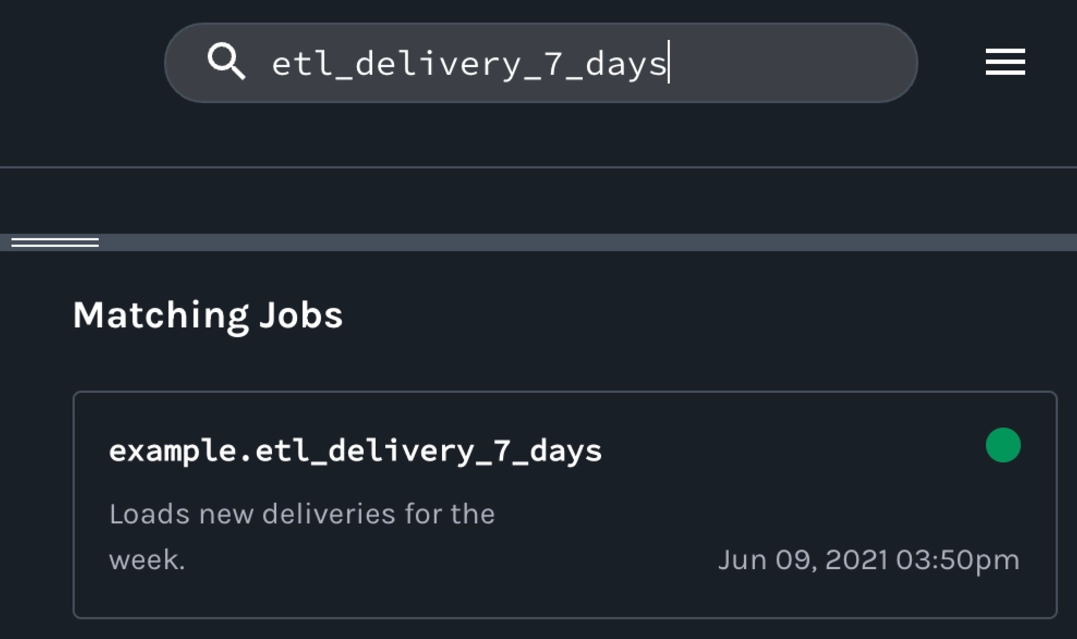

Marquez is an open-source data catalog that collects, aggregates, and visualizes metadata from a data ecosystem. Marquez simplifies the discovery of datasets and their associated metadata through a Web UI and API. It allows users to:

- Search datasets: Users can easily search for datasets, view their attributes, and understand their dependencies across the data ecosystem.

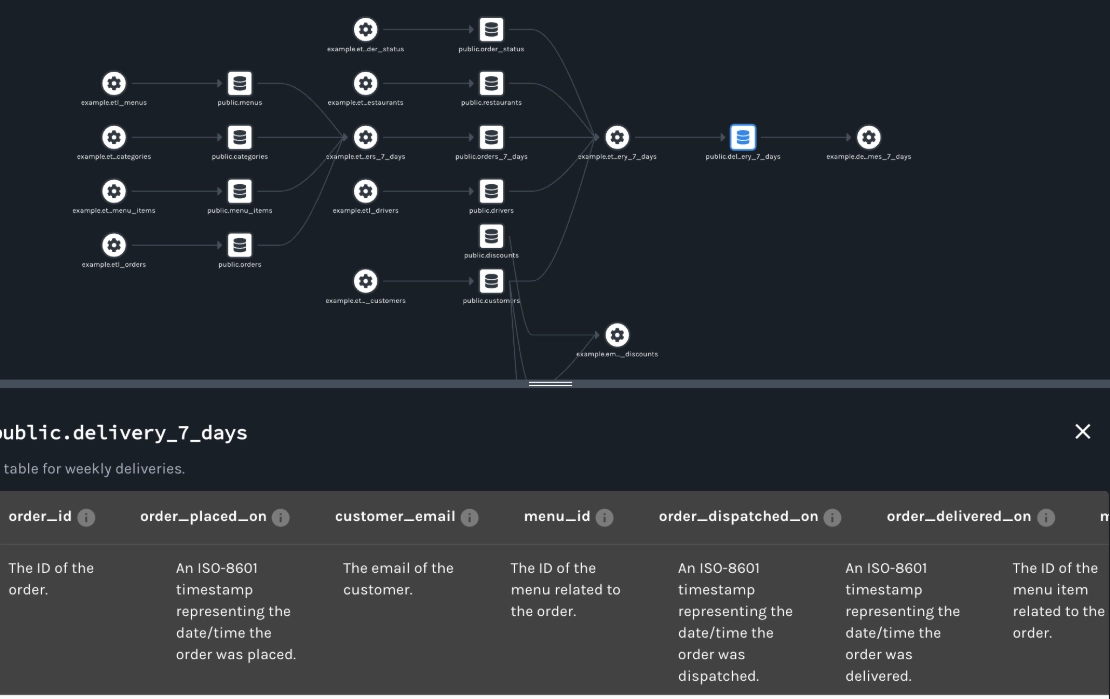

- Visualize lineage: The lineage graph in Marquez provides a clear, interactive view of how datasets are connected and transformed through workflows. This is crucial for understanding data pipelines, tracing errors, and ensuring data reliability.

- Centralized metadata repository: Marquez aggregates metadata from diverse sources, consolidating it into a single system for easy access and management.

Examples:

- Searching data: To access Marquez’s lineage metadata, navigate to the UI. Then, utilize the search box in the upper right corner of the website to look for the task etl_delivery_7_days.

- View input dataset metadata: Navigate to the output dataset public.delivery_7_days for etl_delivery_7_days. You should see the

- dataset name,

- schema,

- and description.

OpenDLP

OpenDLP is a free and open-source data loss prevention tool that is agent-based, centrally controlled, and widely distributed under a general public license.

In addition to performing data discovery on Windows operating systems, OpenDLP also supports performing agentless data discovery, without requiring the installation of additional software agents or components on your system across the following databases:

- Microsoft SQL Server

- MySQL.

Agentless file system and file share scans: OpenDLP 0.4 allows you to execute the following scans:

- Agentless Windows file system scan

- Agentless Windows share scan

- Agentless UNIX file system scan

Piiano Vault – ReDiscovery

Piiano Vault offers data protection for sensitive personal information. With automated compliance controls, it enables you to store sensitive personal data in your own cloud environment.

Piiano Vault can be installed within your system, alongside other databases used by the apps. It should be used to store the most sensitive personal data, such as credit cards and bank account numbers, names, emails, national IDs (e.g., SSNs), etc.

The primary benefits are:

- Data encryption.

- Searchability for data assets over encrypted data.

- Audit records for all data accesses.

- Granular access controls.

- Data masking and tokenization.

Nightfall

With Nightfall users can discover what lives at rest in your data silos. Nightfall scans directories, exports, and backups for sensitive data (such as PII and API keys) using Nightfall’s data loss prevention (DLP) APIs. directories. Nightfall uses machine learning to detect PII, credentials, and secrets.

The free tier:

- Scans the full commit history of any public or private repos

- Detects credentials

- Runs up to 100 scans per month

Distinct feature: Nightfall provides data security capabilities and can send alerts in Slack when new violations are detected and push results to a SIEM, reporting tool, or webhook.

Example: You can scan a backup of your Salesforce server to detect sensitive data. This service will:

(1) submit Salesforce backup data to Nightfall for file scanning.

(2) operate a local webhook server to obtain sensitive results from Nightfall.

(3) export sensitive discoveries to a CSV file.

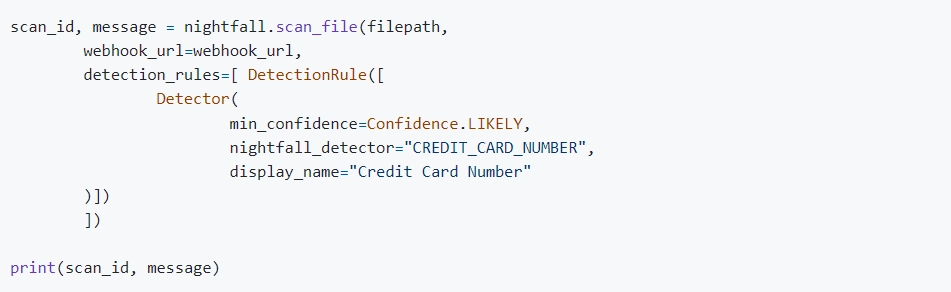

Here is an example of detecting credit card numbers by file scanning (1). In this example, the “scan_file” function and “Detection Rule” is used.

Once Nightfall executes “scan_file” function, the request will be received application (e.g. Salesforce) server at the /ingest webhook endpoint. Thus, in the above code, the webhook data is parsed, and then the URLs that will provide access to sensitive findings are requested.

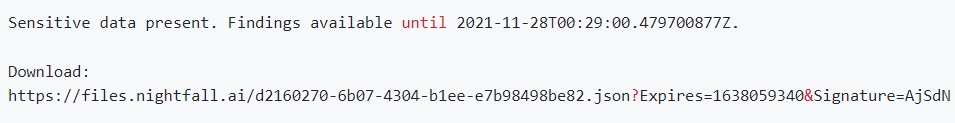

The above URL is provided by Nightfall. It is the temporary signed S3 URL to retrieve the sensitive findings that Nightfall identified.

What is sensitive data discovery software?

Gartner defines sensitive data discovery solutions as “discovering, analyzing, and classifying structured and unstructured data to generate actionable results for security enforcement and data life cycle management.”1

This software provides guidelines and methods for data management and security projects by combining metadata, content, contextual information, and machine-learning-based data models.

Sensitive data discovery software is similar to a variety of products, including

- data loss prevention (DLP) software

- data-centric security software

- data security posture management (DSPM) software

- database security software

In general, these tools include a built-in feature for discovering sensitive data.

Note that, sensitive data discovery differs from data discovery software, a subset of business intelligence software that allows businesses to dive into their data to identify outliers and analyze data trends visually.

Comments

Your email address will not be published. All fields are required.