Automotive firms face rising safety, cost, and efficiency pressures. Computer vision helps address these challenges by enabling automation, quality control, and accident prevention.

Explore the top 5 computer vision automotive use cases that business leaders can leverage to stay competitive.

Advanced driver assistance systems

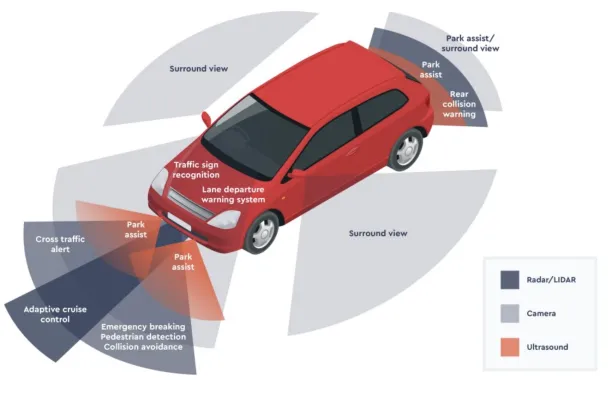

Computer vision plays a central role in the development of advanced driver assistance systems (ADAS). These systems process visual data from cameras and other sensors to enhance driver safety and support decision-making in real-time driving scenarios.

- Lane departure warning: The system detects unintended lane changes by analyzing camera images of road markings. By alerting the driver, it helps prevent accidents caused by inattention or drowsiness.

- Traffic sign recognition: Computer vision algorithms and machine learning techniques enable the identification and interpretation of road signs, such as speed limits, leading to improved compliance with traffic laws. These systems often rely on convolutional neural networks trained on vast visual data to achieve high accuracy.

- Adaptive cruise control: Leveraging vision systems combined with sensor fusion, this feature automatically adjusts the vehicle’s speed to maintain a safe distance from the car ahead, improving road safety.

- Blind spot detection: Utilizing computer vision systems with other sensors, blind spots are continuously monitored, providing warnings about nearby objects and enhancing the capabilities of driver assistance systems’ ADAS.

These automotive applications help reduce human errors and improve safety in various driving scenarios.

Figure 1: An example image of how ADAS and sensors work.1

Real-life example: Peugeot Driving Aids

Peugeot vehicles integrate advanced driver assistance technologies designed to improve safety, comfort, and vehicle control. These systems support handling in various driving conditions and contribute to risk reduction.2

Key features are:

- Driver assistance: Adaptive cruise control, lane-keeping assist, and traffic sign recognition support speed management and lane positioning.

- Parking aids: Automated parking and 360° vision facilitate precise parking in confined spaces.

- Safety systems: Automatic emergency braking, blind spot monitoring, and night vision enhance hazard detection and occupant protection.

- Connectivity: Real-time data and alerts provide situational awareness.

Autonomous driving

In the domain of autonomous vehicles, computer vision, combined with machine learning, deep learning, and sensor fusion, enables vehicles to perceive and interpret their surroundings.

- Object detection and tracking: The system identifies and monitors vehicles, pedestrians, and obstacles, even at high speed, using camera images processed through computer vision models and convolutional neural networks.

- 3D mapping and localization: Vehicles generate detailed 3D maps of their environment by fusing visual data and data from other sensors, ensuring precise positioning and navigation.

- Scene understanding and path planning: Computer vision systems interpret complex traffic environments, enabling autonomous vehicles to make decisions about lane changes, stops, and turns without human input.

These systems play a crucial role in achieving fully autonomous driving, thereby supporting road safety and enhancing efficient traffic flow.

Real-life example: Tesla’s first autonomous vehicle delivery

On June 28, 2025, Tesla completed its first fully autonomous vehicle delivery. A Model Y SUV traveled from Tesla’s Austin Gigafactory to a customer’s home without human input or remote assistance, using Tesla’s standard Full Self-Driving software. The 30-minute trip reached speeds up to 72 mph.

This milestone follows the launch of Tesla’s Robotaxi service in Austin, which remains in a limited trial with safety monitors.3

Driver monitoring systems

Driver monitoring systems (DMS) apply computer vision to observe and assess the driver’s condition, helping to prevent accidents caused by inattention or fatigue.

- Drowsiness detection: By analyzing eye movement and facial features through vision systems, the system identifies signs of fatigue and issues timely alerts.

- Attention monitoring: The system ensures the driver remains focused on the road and warns of distractions, utilizing visual AI technologies.

- Facial recognition: Beyond safety, this capability enhances personalization (e.g., seat adjustments, infotainment preferences) and security, restricting vehicle access to authorized users.

These features address one of the key challenges in the automotive industry: mitigating risks associated with human errors.

In-cabin experience enhancement

Computer vision systems contribute to a safer and more comfortable in-cabin environment, adapting automated systems to the needs of passengers.

- Gesture recognition: Allows occupants to interact with infotainment and climate controls without physical contact, improving usability.

- Occupant detection: Detects passengers and their positions, enabling automated systems to adjust airbags, seat settings, and issue alerts for unfastened seatbelts.

- Child presence detection: Alerts caregivers if a child is left unattended, addressing critical safety concerns.

Real-life example: Alif Semiconductor

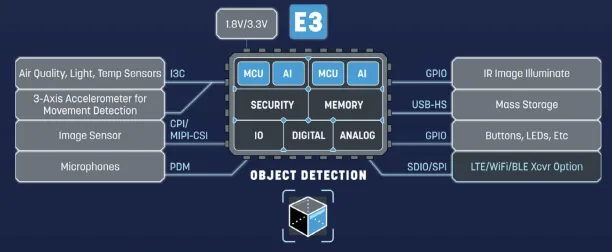

Utilizing advanced microcontrollers (MCUs) with embedded machine learning (ML) capabilities, Alif Semiconductor’s systems perform real-time monitoring and response functions.

Key systems are:

- Driver Monitoring System (DMS): Employs AI to track facial expressions and eye movements, detecting signs of distraction or drowsiness. Alerts are issued when necessary, and the system can personalize settings by recognizing individual drivers.

- Cabin monitoring system (CMS): Monitors passenger behavior and adjusts in-vehicle settings such as air temperature and driving modes. It also ensures proper seating positions, which are crucial for activating autonomous driving features, and can modulate airbag deployment to minimize injury risks.

- Gesture recognition and life presence detection: Combines machine learning algorithms with Time-of-Flight (3D) cameras to enable intuitive vehicle interactions through gestures. Additionally, it detects the presence of occupants, including children and pets, to prevent incidents such as heatstroke.

Figure 2: Architecture diagram of the Alif Semiconductor Ensemble E3 Series for AI-based security cameras.4

Automotive manufacturing and quality control

In the automotive manufacturing sector, computer vision systems ensure high product quality and process efficiency through automated visual inspection.

- Defect detection: Computer vision algorithms identify flaws in car parts, ranging from minor surface scratches to structural issues, thereby improving accuracy and reducing human errors in quality control.

- Assembly verification: Vision-guided automated systems check that all components are correctly positioned and secured during the manufacturing process, supporting efficiency and reducing rework.

- Surface inspection: Camera images are analyzed for paint defects, dents, or inconsistencies, helping ensure that vehicles meet industry standards before delivery.

- Predictive maintenance: Systems detect early signs of wear, such as brake pad thinning or tire degradation, allowing timely repairs.

Challenges of computer vision automotive systems

Data accuracy and reliability

A key concern in computer vision automotive systems is ensuring the accuracy and reliability of visual data processing in diverse driving scenarios. Environmental factors such as low light, glare, fog, rain, or snow can degrade the performance of computer vision models. Misinterpretation of road signs, lane markings, or objects may lead to errors in driver assistance systems ADAS, increasing the risk of unsafe decisions.

Additionally, camera images may be obstructed by dirt, ice, or physical damage, which can reduce the accuracy of computer vision algorithms. Ensuring optimal performance under all conditions remains a continuous technical challenge in the automotive sector.

Computational demands and efficiency

Processing video data and high-resolution images from multiple cameras and other sensors in real time requires significant computational resources.

Computer vision systems often rely on deep learning models, such as convolutional neural networks, which may need specialized hardware accelerators or cloud computing support.

Balancing high accuracy with real-time responsiveness, while keeping costs and power consumption manageable, is a significant concern, particularly when trying to reduce dependence on expensive sensors.

Integration and sensor fusion challenges

Computer vision automotive solutions often work in conjunction with radar, LiDAR, and ultrasonic sensors in sensor fusion architectures.

Achieving reliable integration between these systems is a complex task. Mismatches or latency in combining visual data and other sensor inputs can lead to inconsistent outputs, reducing the efficiency of automated systems and undermining driver safety.

Ethical and legal issues

The deployment of computer vision automotive technologies, particularly in autonomous vehicles, presents significant ethical and legal challenges. For example:

- How should a trained model prioritize outcomes in unavoidable accident scenarios?

- Who is liable when a computer vision system makes an incorrect decision that results in harm?

These questions are crucial as automotive industry players strive to establish trust in automated systems.

Comments

Your email address will not be published. All fields are required.