Bot management identifies real users, good and bad bots, safeguarding websites, APIs, and digital assets from automated threats. We benchmarked the bot management capabilities offered by leading web security platforms:

Bot mitigation benchmark results

We used our bot mitigation benchmark methodology to show the effectiveness of platforms in mitigating bots against our simulated traffic, indicating the percentage of malicious requests blocked.

It is important to note the specific versions or tiers of the platforms utilized during this benchmark:

- Cloudflare: Cloudflare’s free tier.

- Imperva: Trial version of Imperva App Protection.

- Barracuda: Trial version of Barracuda Application Protection.

Bot management providers

Imperva App Protection

Imperva is a cybersecurity company that provides bot management as part of its broader application security offerings. Their solution aims to identify and mitigate a wide range of automated threats.1

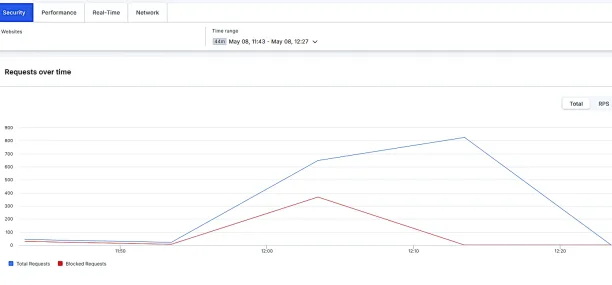

The “Requests over time” graph displays total incoming traffic (blue line) and the volume of “Blocked Requests” (red line). During the peak of our test, a sharp increase in total requests is evident, and the red line representing blocked requests rises almost identically. This indicates that Imperva, leveraging its WAF and Advanced Bot Protection capabilities, successfully identified and neutralized most malicious bot traffic directed at the site.

Barracuda Application Protection

Barracuda Networks offers security solutions, including bot protection, often integrated within its Web Application Firewall (WAF) products. Its focus is on protecting against various automated attacks.2

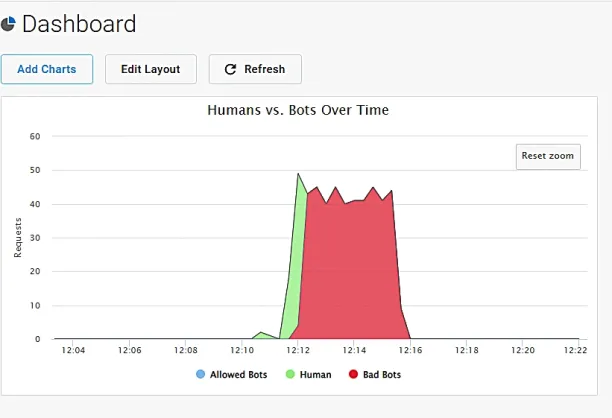

The “Humans vs. Bots Over Time” graph shows a significant spike in activity classified as “Bad Bots” (red area) when our test was running. Simultaneously, the “Total Humans vs. Bots” pie chart visually represents that the overwhelming majority of traffic during this period was identified as malicious bot activity.

Cloudflare

Cloudflare is a widely known provider of CDN and security services. Their bot management capabilities are integrated into their global network, offering detection and mitigation features.

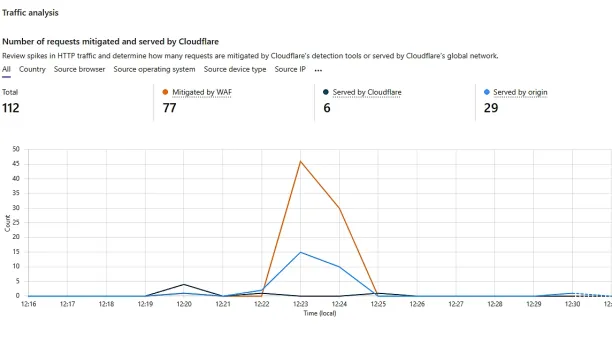

The traffic analysis graph overviews how Cloudflare handled the simulated requests over the test period. It shows a spike in traffic, where the majority of requests were mitigated by WAF (which includes bot management rules on the free tier).3

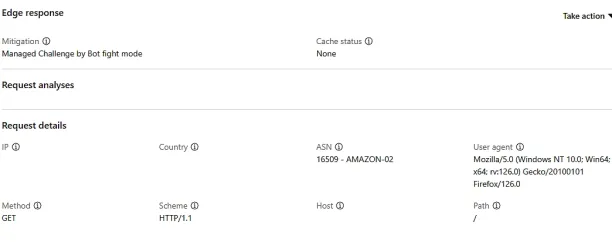

The Mitigation status shows that Cloudflare’s Bot Fight Mode, a feature often found in free plans, detected the request and triggered a Managed Challenge for verification.

Common types of malicious bot attacks

Bots can be used for various malicious activities. Common types of bot attacks include:

Credential stuffing: Using stolen usernames and passwords to gain unauthorized access to user accounts.

Unethical web scraping: Extracting large amounts of data from websites without permission, such as pricing information or content.

Denial of service (DoS/DDoS): Overwhelming a website or application with traffic to make it unavailable to legitimate users.

Account takeover (ATO): Gaining unauthorized access to and control over a user’s online account.

Carding/payment fraud: Using bots to test stolen credit card details or make fraudulent purchases.

Inventory hoarding: Bots buying up limited-stock items (e.g., tickets, sneakers) faster than humans can for resale at higher prices.

Ad fraud: Generating fake clicks or impressions on online advertisements to defraud advertisers.

Spamming: Posting unsolicited content and comments or creating fake accounts.

Detection and mitigation techniques explained

Bot management solutions employ a multi-layered approach to detect and mitigate bot traffic:

Detection:

- Signature-based detection: Identifying known bad bots based on IP addresses, User-Agent strings, or malicious signatures.

- IP reputation: Checking the source IP against databases of known malicious IPs, proxies, or data centers commonly used by bots.

- Browser Fingerprinting: Collecting detailed information about the client’s device and browser environment to create a unique fingerprint. Bots often have inconsistent or tell-tale fingerprints.

- Behavioral analysis: Analyzing patterns of interaction to distinguish human users from automated scripts.

- Challenges: Presenting challenges that are easy for humans but difficult for bots to solve, such as CAPTCHAs, JavaScript challenges, or biometric tests.

Mitigation:

- Blocking: Denying access to traffic identified as malicious.

- Rate limiting: Restricting the number of requests a client can make within a certain time period.

- Serving alternate content: Providing bots with different, less resource-intensive content or cached data.

- Tarpitting: Intentionally slowing down connections from suspected bots.

- Challenging: Requiring the suspected bot to pass a CAPTCHA or other verification step.

- Reporting and analytics: Providing insights into bot traffic, attack types, and the effectiveness of mitigation strategies. This data helps refine detection rules and understand the threat landscape.

The role of behavioral analysis

Behavioral analysis is a technique in modern bot detection. Instead of relying solely on static signatures (like IP addresses or User-Agent strings), it examines how a user or bot interacts with a website or application over time. Key aspects include:

Mouse movements and keystrokes: Analyzing mouse movement patterns, clicks, and typing speed.

Navigation patterns: How a user navigates through a site, the order of pages visited, and the time spent on each page.

Request frequency and timing: The rate of requests and intervals between them.

Interaction with dynamic elements: Whether the client interacts with JavaScript-rendered content or AJAX calls.

Bot mitigation benchmark methodology

Tooling: We utilized OWASP ZAP, specifically its Fuzzer component, to generate and send HTTP requests. An enhanced HttpSender Script was developed within ZAP to customize the behavior of each outgoing request.

Behavior: For each simulated request, the script implemented several techniques to mimic bot behavior, attempting to appear more human-like:

- User-Agent spoofing: For each request, a realistic User-Agent string was randomly selected from a predefined list. This prevents detection based on a single, static User Agent.

- Language header variation: Each request received a random `Accept-Language` header, further diversifying the request profile.

- Standard headers: To maintain a degree of normalcy, standard `Accept` and `Accept-Encoding` headers were consistently included in all requests.

- Randomized delays: Short, random delays, ranging from 150 to 750 milliseconds, were introduced between consecutive requests to avoid the rapid, predictable timing often associated with simple bots.

- No JavaScript execution: The simulated bot did not execute JavaScript. This is a key differentiator from full browser emulation and tests the platform’s ability to detect bots without relying on client-side script challenges.

FAQ

How does a bot manager differentiate between search engine bots and malicious bots when identifying bots in network traffic?

Bot managers use a combination of techniques. They often maintain whitelists of known search engine crawlers (like Googlebot or Bingbot) based on their IP addresses and reverse DNS lookups. For other traffic, they analyze behaviors, User-Agent strings, and IP reputation. Malicious bots often exhibit aggressive request patterns, use known bad IPs, or have inconsistent fingerprints, helping in identifying bots that are not legitimate.

What active challenges can be used to mitigate bot threats from malicious or unwanted bots, while still allowing legitimate bots like search engine crawlers?

Active challenges like CAPTCHAs or JavaScript-based tests are primarily aimed at suspicious traffic that isn’t clearly a known good bot. Bot managers are typically configured to allow legitimate bots, such as search engine crawlers, to bypass these challenges to ensure site indexability. The goal is to block or challenge unwanted bots without disrupting legitimate access or causing business challenges for search engine visibility.

How effective is blocking malicious bot traffic in preventing issues like spam content or attempts to access multiple accounts, and can it also help against DDoS attacks?

Blocking malicious bot traffic is highly effective. By identifying and stopping bots responsible for posting spam content or attempting credential stuffing attacks on multiple accounts, a bot manager directly mitigates these threats. While dedicated DDoS protection services are specialized, effective bot management significantly reduces the volume of malicious traffic, which can be a component of, or a precursor to, some DDoS attacks, thereby offering a degree of mitigation.

What are the primary business challenges if a system fails to distinguish between malicious bots and legitimate bots, such as search engine bots?

Failing to identify bots properly can lead to significant business challenges. Blocking legitimate bots, especially search engine bots, can severely harm SEO and site visibility. Conversely, allowing malicious or unwanted bots can lead to resource drain, skewed analytics, content scraping, and security vulnerabilities. A robust bot manager aims to accurately identify and mitigate bot threats while ensuring legitimate bots can access the site as needed.

Comments

Your email address will not be published. All fields are required.