Affective computing systems automatically identify emotions. These tools are used in several business cases, such as marketing, customer service, and human resources, to increase customer satisfaction and ensure safety.

Explore leading companies in affective computing as well as the definition, method and use cases of affective computing systems:

Leading companies in emotion detection

We identified the leading companies and their number of employees to give a sense of their market presence and their specialization areas. While larger companies are specializing towards specific solutions like marketing optimization, almost all companies in the space offer APIs for other companies to integrate affective computing into their solutions.

| Company | # of employees | Example business applications |

|---|---|---|

| Cogito | 147 | Customer service optimization, sales optimization, care optimization in healthcare |

| Brand24 | 97 | Market research, customer feedback, online reputation management |

| Affectiva | 52 | Applications in automotive, marketing optimization |

| Sightcorp | 20 | Visitor insight, customer analytics |

| Kairos | 10 | Customer service optimization, access control |

| Realeyes | 6 | marketing optimization |

| Park.IO | 1 | Financial fraud detection, customer feedback, sales prediction and more |

What is affective computing?

Affective computing combines AI, computer science, and cognitive science to create systems that recognize and respond to human emotions through cues like facial expressions, voice tone, and physiological signals. By integrating techniques like speech recognition and neural networks, these systems enhance human-computer interaction and emotional intelligence.1

While it may seem unusual that computers can do something inherently human, research shows that they achieve acceptable accuracy levels of emotion recognition from visual, textual, and auditory sources. Emotion AI helps businesses understand how customers feel in the moment, so they can respond in a more personal and helpful way.

Can software understand emotions?

Although it is a big question whether computers can recognize emotions, recent research shows that AI can identify emotions through facial expressions and speech recognition. As people express their feelings in surprisingly similar ways across different cultures, computers can detect these clues to classify emotions accurately. Machines are better than humans in identifying emotions from speech, software achieved an accuracy of 70%.2 , while human accuracy is ~60%.3

You can read more about this in the related section.

Why is affective computing relevant now?

The increasing ubiquity of high-resolution cameras, high-speed internet and machine learning capabilities, especially deep learning, enable the rise of affective computing. Affective computing relies on

- high resolution (ideally) dual cameras to capture videos

- fast broadband connections to communicate those videos

- machine learning models to identify emotions in those videos

All of these things have improved greatly since 2010s with almost all smartphone users having high-resolution cameras and an increasing number of users experiencing real-time video capable upload speeds thanks to symmetric fiber-to-the-home (FFTH) installations. Finally, deep learning solutions which require significant amounts of data and computing power have become easier to deploy.

How does affective computing work?

Affective computing leverages advances in artificial intelligence and machine learning to enable human-computer interaction systems to recognize, interpret, and respond intelligently to human emotions.

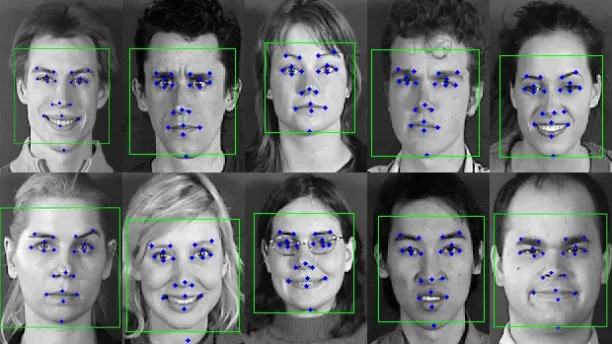

To normalize facial expressions, affective computing solutions working on images use these techniques:

- The face is extracted from the background

- Facial geometry (e.g., locations of eyes, nose, mouth) can be estimated.

- Based on facial geometry, facial expressions can be normalized, taking out the impact of head rotations or other head movements

Figure 1. Detection of Geometric Facial Features of different emotions

Source: Interaction Design Foundation4

Explore how text, audio, and visual inputs are analyzed for affect detection using emotional cues and signal-based representations:5

Textual-based emotion recognition

Text-based emotion recognition relies on analyzing textual data to extract emotional information, often leveraging machine learning techniques and artificial neural networks. Traditional approaches involve either statistical models requiring extensive emotional vocabularies or knowledge-based systems with large labeled datasets.

The rise of online platforms has generated significant textual data expressing human affect. Tools such as WordNet Affect, SenticNet, and SentiWordNet help recognize emotions based on semantic analysis.6 Deep learning (DL) models have further advanced this field, enabling emotionally intelligent computers to perform end-to-end sentiment analysis and uncover subtle emotional nuances within text.

For more information on sentiment analysis data, you may read these articles:

- Top 12 Sentiment Analysis Datasets

- Examples of ChatGPT Sentiment Analysis

- Sentiment Analysis Benchmark Testing: ChatGPT, Claude & DeepSeek

You may also find our sentiment analysis research category helpful.

Recent research has introduced innovative approaches like multi-label emotion classification architectures and emotion-enriched word representations, improving the system’s ability to detect emotional states and address cultural differences. By employing benchmark datasets from sources such as tweets or WikiArt, affective computing systems analyze and classify emotions in text across diverse applications of affective computing, including emotional experience in art and commerce.7

Audio-based emotion recognition

In audio emotion recognition, systems analyze human affect from speech signals by focusing on pattern recognition of acoustic features such as pitch, tone, and cadence. Affective wearable computers equipped with audio sensors use tools like OpenSMILE for feature extraction, while classifiers such as Hidden Markov Models (HMMs) and Support Vector Machines (SVMs) process this data to identify emotional cues.8

Advancements in deep learning eliminate the need for manual feature engineering by training convolutional neural networks (CNNs) directly on raw audio data. These networks achieve better performance by capturing both temporal and spectral characteristics of speech, contributing to the creation of emotionally intelligent computers that respond intelligently and interact naturally with users.

Visual-based emotion recognition

Visual emotion recognition focuses on identifying human feelings through facial expressions and other visual stimuli, utilizing facial recognition technologies and computer vision techniques. Systems rely on datasets like CK+ and JAFFE to train algorithms capable of detecting emotional states from facial movements, expressions, and other modalities.9

Methods like elastic bunch graph matching dynamically analyze facial deformations across frames, while attention-based modules enhance focus on significant facial regions.10 Techniques such as auto-encoders and local binary patterns (LBP) extract spatial and textural features, enabling systems to understand human emotions more effectively.

Research into micro-expressions—brief, involuntary facial movements—has further refined human affect recognition by uncovering hidden emotional cues. By integrating these findings, affective technologies can synthesize emotions and offer genuinely intelligent responses in human-machine interactions.

If you wish to work with an AI data service to obtain labeled training data, the following articles can help:

- Top 12 AI Data Collection Services & Selection Criteria

- Data Crowdsourcing Platform: 10+ Companies & Criteria

Affective computing use cases

Affective computing is an AI tool that can be useful in a wide variety of use cases including commercial functions and potentially even in HR. For example, having a department-wide employee engagement metric based on employee’s facial expressions could inform the company on how recent developments are impacting company morale. Current applications include

Marketing

There are numerous startups helping companies optimize marketing spend by allowing them to analyze emotions of viewers.

Customer service

Both in contact centers and retail locations, startups are providing companies estimates of customer emotions. These estimates are used to guide customer service responses and measure the effectiveness of customer service.

Human resources

Affective computing in HR enables businesses to enhance recruitment by assessing emotional communication, improve employee training through intelligent simulations, and track employee satisfaction by monitoring stress and anxiety levels, though ethical concerns about consent and reliance on accuracy must be addressed.

Healthcare industry

Wearables with the ability to detect emotions, such as Embrace by Empatica, have already been used by researchers to study stress, autism, epilepsy, and other disorders.

Other

Emotion recognition can complement security and fraud identification efforts as well. In addition, emotion recognition via affective computing helps in in-store shopping experience, autonomous driving, safety, driving performance improvement, education effectiveness measurement, adaptive games, and workplace design.

For more details, feel free to visit our affective computing applications guide with more than 20 use cases.

What are alternatives/substitutes to emotion recognition?

Depending on the specific use case, there are alternatives to affective computing. For example:

- Marketing: Instead of relying on emotions of potential customers, companies are more used to running pilots to assess the success of their potential marketing campaigns.

- Customer service: Voice is a good predictor of emotions which tends to correlate with customer satisfaction.11 Companies can also rely on customer satisfaction surveys to track customer satisfaction. However, surveys are completed at the end of the customer experience and, unfortunately, do not allow companies to make real-time adjustments or offer real-time guidance to their personnel.

External Links

- 1. Shoumy, N. J., Ang, L. M., Seng, K. P., Rahaman, D. M., & Zia, T. (2020). Multimodal big data affective analytics: A comprehensive survey using text, audio, visual and physiological signals. Journal of Network and Computer Applications, 149, 102447.

- 2. Kim, J., & André, E. (2008). Emotion recognition based on physiological changes in music listening. IEEE Transactions on Pattern Analysis and Machine Intelligence, 30, 2067–2083.

- 3. Dellaert, F., Polizin, t., and Waibel, A., Recognizing Emotion in Speech”, In Proc. Of ICSLP 1996, Philadelphia, PA, pp.1970–1973, 1996.

- 4. What is Affective Computing? — updated 2025 | IxDF. Interaction Design Foundation

- 5. Afzal, S., Khan, H. A., Piran, M. J., & Lee, J. W. (2024). A comprehensive survey on affective computing; challenges, trends, applications, and future directions. IEEE access.

- 6. Suttles and N. Ide, “Distant supervision for emotion classification with discrete binary values”, Proc. 14th Int. Conf. Comput. Linguistics Intell. Text Process. (CICLing), pp. 121-136, 2013.

- 7. S. Mohammad and S. Kiritchenko, “WikiArt emotions: An annotated dataset of emotions evoked by art”, Proc. 11th Int. Conf. Lang. Resour. Eval. (LREC), pp. 1-14, 2018.

- 8. F. Eyben, M. Wöllmer and B. Schuller, “OpenEAR—Introducing the Munich open-source emotion and affect recognition toolkit”, Proc. 3rd Int. Conf. Affect. Comput. Intell. Interact. Workshops, pp. 1-6, Sep. 2009.

- 9. S. Ghosh, E. Laksana, L.-P. Morency and S. Scherer, “Representation learning for speech emotion recognition”, Proc. Interspeech, pp. 3603-3607, Sep. 2016.

- 10. L. Chen, W. Su, Y. Feng, M. Wu, J. She and K. Hirota, “Two-layer fuzzy multiple random forest for speech emotion recognition in human-robot interaction”, Inf. Sci., vol. 509, pp. 150-163, Jan. 2020.

- 11. Meirovich, G., Bahnan, N., & Haran, E. (2013). The impact of quality and emotions in customer satisfaction. Journal of Applied Management and Entrepreneurship, 18(1), 27-50.

Comments

Your email address will not be published. All fields are required.