Healthcare systems face challenges in delivering accurate diagnoses, timely interventions, and personalized treatments, often because critical patient information is scattered across different data sources.

Multimodal AI offers a solution by combining medical images, clinical notes, lab results, and other data into a unified framework that mirrors how clinicians think and reason.

Discover how multimodal AI is applied in healthcare, its key use cases, real-world examples, and the benefits and limitations of this emerging approach.

What is multimodal AI in healthcare?

Multimodal artificial intelligence in healthcare refers to systems that learn from and process multiple forms of input data simultaneously.

Traditional artificial intelligence in the medical space often relied on a single modality, such as medical images or electronic health records. In contrast, multimodal models bring together imaging data like X-rays or MRIs, structured data such as laboratory values, tabular data from hospital records, free-text clinical notes, and, in some cases, genomic data or physiological signals.

By combining these individual data modalities into a unified processing framework, multimodal AI systems can extract complementary information that can improve diagnostic accuracy, risk prediction, and treatment planning compared to single-modality models.

This capacity to use diverse data mirrors how clinicians reason: by combining visual, numerical, and narrative information to arrive at a medical diagnosis.

Different approaches to multimodal AI in healthcare

Approach | Description | Advantages | Limitations | Examples |

|---|---|---|---|---|

Late Fusion | Each modality processed separately and outputs combined at decision level with ensemble methods | Flexible, works with missing data, easy to implement | Limited cross-modal interaction | Image model plus text model combined |

Intermediate Fusion | Each modality encoded into embeddings and fused before prediction | Learns interactions, better accuracy and generalization | Needs aligned data, computationally heavy | ClinicalBERT with imaging features |

Early Fusion | Raw inputs from different modalities integrated before feature extraction | Captures raw-level interactions | Hard to harmonize different data formats | MRI scans with segmentation and structured data |

Specialized Architectures | Domain-specific models such as graph neural networks and vision-language systems | Tailored to tasks, supports advanced healthcare uses | Still experimental, requires specialized datasets | GNNs for drug response, vision-language for radiology |

1. Late fusion

Late fusion is one of the most widely used approaches for building multimodal AI systems in healthcare.

In this method, each data modality is processed independently by a dedicated model. For example, convolutional neural networks are often used for medical images, while transformers or recurrent neural networks are applied to text data such as clinical notes. The outputs from these unimodal models are then combined at the decision level using ensemble methods like weighted averaging, boosting, or random forests.

The main advantage of late fusion is its flexibility, as it does not require paired training data and can easily handle incomplete datasets where one or more modalities are missing. However, its limitation is that it does not fully capture the complex interactions between modalities, since integration happens only at the end of the pipeline.

2. Intermediate fusion

Intermediate fusion offers richer integration by combining modalities at the feature level rather than the decision level. Each modality is first encoded into a dense vector representation or embedding using specialized models such as deep neural networks for imaging, ClinicalBERT for text, or statistical encoders for tabular data. These embeddings are then concatenated or otherwise fused before the prediction layer.

This approach enables the model to learn complex relationships across modalities, improving diagnostic accuracy and generalization. However, it requires careful alignment of input data in terms of timing, resolution, and structure, which can be computationally demanding and technically challenging.

ClinicalBERT

ClinicalBERT is a deep learning model that adapts BERT (Bidirectional Encoder Representations from Transformers) to process clinical notes from electronic health records.

Unlike standard BERT, which is trained on general corpora like Wikipedia, ClinicalBERT is pre-trained on large datasets of clinical notes to capture the jargon, abbreviations, and long-range dependencies typical of medical text.

This specialization allows it to generate high-quality representations of clinical language and improve predictions for important healthcare tasks. For example, ClinicalBERT was shown to outperform traditional models in predicting 30-day hospital readmission, both from discharge summaries and early admission notes, while also offering interpretable outputs through attention weights that highlight which parts of the notes influenced predictions.1

3. Early fusion

Early fusion is less common in healthcare, but it is still essential to consider. It integrates raw input data from different modalities before feature extraction. For example, imaging pixels can be combined with pixel-aligned segmentation maps, or structured clinical variables can be embedded alongside raw image features.

The difficulty lies in harmonizing different data formats and scales, which makes early fusion more challenging than other approaches. Despite these obstacles, early fusion can, in theory, allow the model to learn intense interactions between modalities directly from the raw data.

Figure 1: The graph showing different fusion stages illustrates early (before encoding), intermediate (after feature extraction), and late (after prediction) fusion.2

4. Specialized architectures

Beyond these three general fusion strategies, new model architectures are emerging to address domain-specific needs.

- Graph neural networks are being used to represent and learn relationships between clinical variables, imaging findings, and biological pathways. This approach is helpful in tasks such as drug response prediction or multi-organ disease modeling, where relational data is central.

- Vision-language models have gained traction in radiology, where medical images are paired with corresponding reports or natural language queries. These multimodal models support applications such as report drafting, image–text retrieval, and visual question answering.

How does multimodal AI in healthcare work?

1. Data collection and preprocessing

The development of a multimodal artificial intelligence system begins with the collection of diverse patient data. Typical data sources include electronic health records, imaging archives, genomic databases, and bedside monitoring systems.

Each modality has unique formats and requires tailored preprocessing. Clinical notes are tokenized and embedded using large language models such as ClinicalBERT. Medical images are processed through convolutional neural networks trained on datasets like X-rays or CT scans. Time-series data, including vitals and lab tests, may be summarized into statistical features or modeled using recurrent neural networks to capture temporal patterns. This process transforms raw clinical data into standardized and machine-readable representations.

2. Feature encoding and integration

Once individual modalities have been encoded into embeddings, they must be integrated to create a unified patient representation.

Intermediate fusion typically involves concatenating these embeddings to form a multimodal input vector, which is then processed by deep neural networks to perform supervised learning. In late fusion, predictions from unimodal models are combined using ensemble techniques or survival analysis models. The choice of integration method depends on the clinical task and the level of interaction required between data modalities.

3. Handling incomplete data

In practice, multimodal datasets are often incomplete due to missing imaging studies, absent lab results, or gaps in clinical documentation. To address this, researchers use a variety of strategies, including statistical imputation techniques like MICE, learnable embeddings that act as placeholders for missing modalities, or transformer-based architectures that can flexibly process variable numbers of inputs. These methods ensure that multimodal AI systems remain functional even when not all data sources are available.

4. Interpretability and validation

Interpretability is essential in the medical domain, where clinicians must trust and understand AI outputs. Techniques such as Shapley value analysis and attention visualization help quantify the contribution of each modality to the model’s predictions.

This allows developers and healthcare providers to verify that the system aligns with clinical reasoning. At the same time, advanced training procedures are required to ensure reliability. Preventing data leakage, for example, by splitting datasets at the patient level rather than the sample level, is a critical step to avoid artificially inflated performance metrics.

5. End-to-end decision support

The final output of a multimodal AI system is a unified prediction or recommendation for a medical task such as disease detection, risk stratification, or prognosis. By moving from raw multimodal input through preprocessing, encoding, integration, and interpretation, these systems provide clinicians with decision support that reflects a more comprehensive view of the patient than unimodal models can offer.

Multimodal AI in healthcare use cases

Clinical diagnostics

Multimodal AI is widely applied in diagnostic settings because of its ability to combine complementary signals. This combination improves accuracy in detecting diseases that are difficult to diagnose with a single modality.

- Pneumonia detection: By combining chest X-rays with electronic health record variables such as white blood cell counts and temperature, multimodal models detect pneumonia more reliably than imaging alone.

- Lung cancer risk prediction: Models that integrate imaging data with tabular data and patient history can estimate lung cancer risk more accurately, enabling earlier intervention.

- Fracture identification: Integrating radiographic images with clinical notes helps detect subtle fractures that might be missed when relying on imaging or text alone.

- Triage systems: In emergency or radiology workflows, multimodal models prioritize patient cases by combining both imaging findings and clinical data. This helps clinicians identify high-risk patients faster.

Prognostic tasks

Prognosis benefits significantly from multimodal approaches because survival and risk predictions depend on multiple data sources.

- Hospital length-of-stay prediction: Models combine structured data, clinical notes, and time-series variables such as vitals to predict how long patients are likely to remain in the hospital.

- ICU mortality prediction: Multimodal artificial intelligence integrates laboratory data, physiological monitoring, and notes to provide early warnings of patient deterioration.

- Readmission risk: By processing both tabular data and narrative notes, multimodal systems assess the likelihood of a patient being readmitted, therefore supporting targeted discharge planning.

Radiology workflows

Radiology is one of the most mature areas for multimodal AI, driven by advances in vision-language models that link text and images.

- Report drafting: Systems generate preliminary radiology reports by interpreting images and aligning them with reporting templates.

- Augmented review: Models highlight image regions corresponding to textual findings, reducing cognitive load on radiologists.

- Visual search and history review: Multimodal models allow clinicians to query images with text or crop regions of interest to find similar cases across a patient’s imaging history.

- Longitudinal analysis: AI systems summarize imaging changes across time, helping radiologists identify disease progression or treatment response.

Medical research

- Patient cohort identification: Researchers can query large multimodal datasets to find patients with similar profiles across different modalities.

- Support for statistical analysis: Combining structured and unstructured data improves the effectiveness of statistical modeling and clinical trials.

- Benchmarking with multimodal datasets: Public resources such as MIMIC, TCGA, and the Alzheimer’s Disease Neuroimaging Initiative (ADNI) provide researchers with diverse multimodal data for developing and validating AI systems.

- Personalized treatment strategies: Integrating genomic data with imaging and clinical notes enables the development of more targeted treatment recommendations.

Real-life examples

Med-PaLM M

Med-PaLM M is a multimodal version of Google’s medical language model. It can process text, medical images, and genomic data with a single set of model weights.

On the MultiMedBench benchmark, which covers 14 biomedical tasks with over 1 million samples, Med-PaLM M matched or outperformed specialist models across all tasks. For example, it improved chest X-ray report generation scores by more than 8% (micro-F1) and achieved over 10% gains on visual question answering metrics.

In a blinded evaluation of 246 chest X-rays, clinicians preferred Med-PaLM M’s reports to radiologist-written reports in about 40% of cases. Its reports averaged 0.25 clinically significant errors per case, a rate similar to human radiologists.3

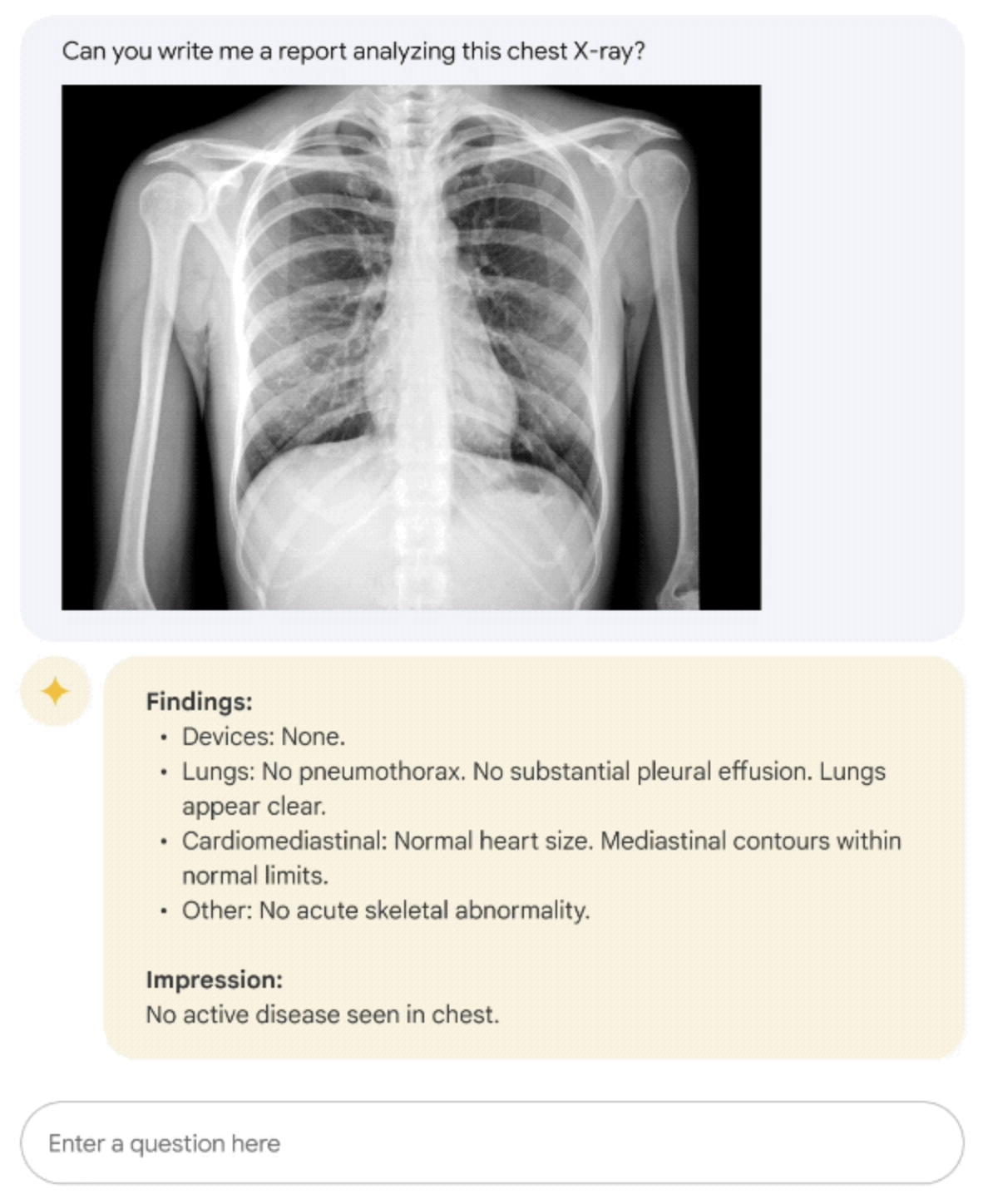

Figure 2: Med-PaLM M example on describing a chest X-Ray.4

Holistic AI in Medicine (HAIM)

The HAIM framework demonstrates the potential of multimodal AI in clinical settings. Separate models process clinical notes, imaging data, and time-series data, and their embeddings are fused into a single patient representation. This embedding supports downstream tasks such as disease classification, risk stratification, and mortality prediction.

Figure 3: The diagram showing the xHAIM pipeline outlines task definition, data summarization, focused prediction, and explainable insights for better data handling, interpretability, and knowledge integration.5

Benefits of multimodal AI in medical applications

- Improved diagnostic accuracy: Multimodal systems consistently outperform unimodal baselines.

- For example, the MDS-ED study developed a large-scale, open-source multimodal dataset from MIMIC-IV (including demographics, labs, vitals, and raw ECG waveforms) to benchmark AI models for predicting diagnoses and patient deterioration in the emergency department.

- Outcome: Multimodal models, especially those incorporating raw ECG waveforms, achieved high predictive accuracy (AUROC >0.8) for over 600 diagnoses and 14 of 15 deterioration outcomes, demonstrating strong potential for AI-assisted decision support in emergency care.6

- Predictions: By combining structured data, clinical notes, imaging data, and biomedical data, models reduce errors associated with missing or noisy information from a single modality.

- Reusability and scalability: Unified multimodal embeddings can be applied across a range of tasks, from classification models for disease detection to survival analysis, without rebuilding models from scratch.

- Better patient outcomes: Enhanced diagnostic accuracy and more reliable prognostic predictions translate into earlier interventions and better clinical decision-making.

Challenges of multimodal AI in healthcare

- Data availability and quality: Real-world multimodal datasets are often incomplete. Missing imaging studies, limited genomic data, or inconsistent clinical notes may damage model training.

- Generalizability issues: Many models are trained and validated on internal datasets only. This limits their applicability across different healthcare systems with varying data modalities.

- Handling incomplete data: Approaches such as deep generative models, recurrent neural networks, or transformers are needed to manage missing modalities effectively.

- Data leakage risks: If data splitting is not handled at the patient level, information can leak between training and test sets, leading to artificially inflated performance.

- Workflow integration: Healthcare providers require systems that integrate into existing workflows. Radiologists in particular demand that draft report generation systems operate with near-perfect accuracy and minimal latency.

- Regulatory and trust barriers: Adoption of multimodal medical AI requires evidence of safety, external validation, and regulatory approval, along with clinician confidence in the reliability of AI outputs.

💡Conclusion

Multimodal AI in healthcare enables the integration of diverse data sources into a more holistic view of the patient.

Rather than replacing clinical expertise, it complements decision-making with richer insights that single-modality systems cannot provide. Although issues with data, workflows, and trust remain, new advances are helping multimodal AI move from research into real-world healthcare. Ultimately, its success will depend on how it integrates into clinical care to deliver safer, more accurate, and more personalized outcomes.

Be the first to comment

Your email address will not be published. All fields are required.