AI scientists mark a major advance toward fully automatic scientific discovery, aiming to perform the entire research process independently. Unlike traditional tools, these automated labs can expedite research processes by generating hypotheses, designing and executing experiments, interpreting results, and communicating findings.

By combining large language models, machine learning, and robotics, an AI scientist can iteratively refine their understanding through experimentation.

Discover the top 6 AI scientist tools and frameworks, and the scientific processes that would allow them to bridge computer science and the natural sciences.

Top 6 AI scientist companies/frameworks

Tool / Framework | Description | Use cases | Category |

|---|---|---|---|

Periodic Labs | Builds AI scientists that run autonomous labs for physics, chemistry, and materials science. | Materials discovery, semiconductor design, experimental automation. | AI-driven physical science platform |

Claude for Life Sciences | Advanced language models with scientific tools to support end-to-end biomedical and life sciences research. | Literature analysis, bioinformatics, experimental design, regulatory documentation, and clinical compliance. | AI-augmented life sciences research system |

Potato | Scientific OS enabling AI-driven research from hypothesis to experiment using AI agents and automation. | Drug resistance prediction, protein engineering, automated biology experiments. | Comprehensive AI research system |

Lila Sciences | Creates AI Science Factories combining robotics and foundation models for life sciences and materials research. | Protein therapeutics, catalyst and material discovery, energy systems. | Scientific superintelligence platform |

AstroAgents | Multi-agent AI for analyzing mass spectrometry data in astrobiology. | Detecting biotic patterns, hypothesis generation, literature integration. | Multi-agent data analysis system |

The AI Scientist | End-to-end AI scientist framework automating hypothesis generation, experiments, and paper writing. | Full-cycle research automation, manuscript generation, system benchmarking. | Comprehensive AI scientist system |

Periodic Labs

Periodic Labs aims to develop systems that can conduct independent research, iteratively refine ideas, and contribute to the human scientific community by generating new knowledge. Their long-term goal is to enable fully automatic scientific discovery, where AI agents can propose hypotheses, design and run experiments, interpret results, and write scientific papers with minimal manual supervision.

The central concept is to merge artificial intelligence with real-world experimentation. Instead of relying solely on internet-scale text data, which is finite and already heavily used by frontier models, Periodic Labs focuses on creating autonomous laboratories that generate original, high-quality experimental data. These labs serve as environments where an AI scientist works, testing their ideas and learning directly from nature.

Research areas of Periodic Labs

Periodic Labs primarily focuses on the physical sciences as a starting point. This area was chosen because physical experiments offer high-quality signals, reliable modeling capabilities, and clear verification criteria. Their main research areas include:

- Materials science: Developing and discovering superconductors that operate at higher temperatures, which could improve transportation systems and reduce energy loss in power grids.

- Semiconductor research: Collaborating with industry partners to address challenges such as heat dissipation in chips by training AI agents to interpret and optimize experimental data.

- Physics and chemistry: Using AI research tools to design and synthesize new materials, automate hypothesis generation, and enhance simulation-based exploration.

These efforts aim to accelerate science by integrating AI scientists into domains where progress has traditionally required extensive manual experimentation. By doing so, Periodic Labs seeks to reduce the time between generating ideas and publishing research papers accepted for presentation at a machine learning conference or publication in a scientific journal.

Broader vision and ethical considerations

The broader ambition of Periodic Labs is to scale automatic scientific discovery across disciplines in computer science, natural sciences, and engineering. Their vision includes:

- Building systems that develop algorithms for autonomous reasoning and experiment design.

- Supporting research scientists in developing agents capable of addressing grand challenges in science.

- Creating comprehensive systems that integrate data collection, reasoning, and publication under one unified scientific framework.

They also emphasize the importance of ethical considerations in deploying autonomous AI systems, ensuring transparency, accountability, and collaboration with human scientists.

Claude for Life Sciences

Claude for Life Sciences is an initiative developed by Anthropic to accelerate research and innovation within the biomedical and life sciences sectors.

The platform integrates advanced artificial intelligence into the scientific process, supporting activities that range from hypothesis generation and experiment design to data analysis, regulatory compliance, and publication preparation.

Anthropic’s broader mission is to accelerate global scientific progress by developing AI systems that collaborate with human researchers and, over time, achieve a degree of autonomy in conducting scientific discovery.

Scientific vision and objectives

While earlier versions of Claude were primarily used for discrete tasks such as writing analytical code, summarizing academic literature, or preparing reports, the current framework enables comprehensive participation in the whole research lifecycle. This includes early-stage discovery, clinical translation, and eventual commercialization of scientific results.

Anthropic positions Claude as an intelligent research collaborator capable of interpreting scientific data, integrating information from multiple sources, and generating insights that contribute directly to experimental progress. The system is designed to assist laboratories, pharmaceutical organizations, and academic institutions by improving the efficiency, reproducibility, and quality of research outcomes.

Core capabilities and model performance

The Anthropic models demonstrate substantial gains in scientific reasoning, comprehension, and protocol interpretation, as evidenced by several benchmark results.

Integration with scientific and enterprise tools

Claude for Life Sciences includes an expanded suite of software connectors that enable direct interaction with scientific databases, data management systems, and collaborative research platforms.

These integrations allow researchers to query data, visualize results, and connect insights to verified experimental sources.

Major integrations include the following:

- Benchling: Provides access to laboratory notebooks, experimental data, and documentation systems.

- BioRender: Allows the creation of scientifically accurate figures, diagrams, and graphical abstracts.

- PubMed and Wiley’s Scholar Gateway: Offer access to millions of peer-reviewed biomedical publications for citation, summarization, and evidence synthesis.

- Synapse.org: Facilitates data sharing, version control, and collaboration among distributed research teams.

- 10x Genomics: Enables analysis of single-cell and spatial transcriptomics data through natural language interaction.

Agent skills and research automation

Anthropic has introduced Agent Skills, a framework that allows Claude to perform scientific tasks autonomously. Each skill is a structured package containing instructions, scripts, and resources that guide the model through specific research processes.

A prominent example is the single-cell-rna-qc skill, which performs quality control and filtering of single-cell RNA sequencing data following scverse best practices. Researchers can also design custom skills that define their laboratory’s procedures, enabling Claude to automate data processing, statistical analysis, and experimental validation steps.

Applications across the Life Sciences

Claude for Life Sciences supports a broad spectrum of use cases across research, clinical, and regulatory domains.

- Scientific research and hypothesis generation: Claude can conduct comprehensive literature reviews, identify relevant studies, synthesize findings, and generate testable hypotheses from existing data.

- Protocol creation and documentation: Through integration with Benchling, Claude assists in drafting study protocols, standard operating procedures, and informed consent forms that meet regulatory and ethical standards.

- Bioinformatics and computational analysis: Claude processes and interprets large datasets, including genomic and proteomic data, and produces results in formats suitable for technical reports, slide presentations, or code notebooks.

- Regulatory and clinical compliance: The model assists in drafting regulatory submissions, summarizing compliance requirements, and compiling supporting documentation for audits or reviews.

- Anthropic also provides a prompt library tailored for scientific research, enabling users to achieve consistent, optimized outputs across a range of research applications.

Watch the video below to see how Claude conducts data analysis and literature review, derives insights, and synthesizes them into a presentation featuring a BioRender illustration.

Potato

Potato functions as a comprehensive system that supports the entire research process, from hypothesis generation to experiment execution and data interpretation. By integrating artificial intelligence, automation, and computational biology, Potato enables fully automatic scientific discovery across domains such as life sciences, computer science, and bioinformatics.

The platform enables AI agents to conduct research independently or in collaboration with researchers. These agents can search the literature, generate ideas, design workflows, analyze results, and prepare generated papers ready for review.

By minimizing the need for extensive manual supervision, Potato accelerates the development and testing of novel research ideas by the human scientific community, supporting progress toward developing agents capable of conducting meaningful scientific discoveries autonomously.

The technology behind the Potato

Potato operates as a Scientific Operating System (OS) for the life sciences. It connects to hundreds of tools that make scientific research more efficient and reproducible. Its infrastructure is designed to enable AI agents to iteratively develop ideas, plan experiments, and interpret results in an open-ended manner.

Key technological components include:

- Specialized environment for agents: Potato provides a dedicated research environment that equips AI agents with data, tools, and memory needed to perform research tasks such as literature search, hypothesis generation, and running experiments.

- Parallel runtime environment: The cloud-based system automatically scales compute and GPU resources, allowing the AI to execute thousands of experiments simultaneously. This parallelization supports complex modeling tasks in machine learning and natural language processing.

- Branching research timelines: Researchers can explore multiple experimental variations with a single click. This branching feature encourages automatic scientific discovery, enabling exploration of alternative hypotheses and methods.

- Inter-tool communication: Tools within Potato communicate directly with one another, enhancing efficiency and enabling longer, uninterrupted workflows.

At the core of the system is TATER (Technical AI for Theoretical & Experimental Research), a multi-agent AI co-scientist. TATER can plan and execute experiments, analyze data, and translate research intent into robotic scripts. It represents a comprehensive framework for automatic scientific discovery, combining foundation models, diffusion modeling, and neural networks to advance the state of AI research.

Use case 1: Predicting resistance in SARS-CoV-2 main protease

In one application, researchers used Potato to perform sequence analysis focused on predicting viral resistance mutations.

- Challenge: Understanding which single-nucleotide variants of SARS-CoV-2’s main protease may cause drug resistance is a slow and costly process that typically requires weeks of computational and laboratory work.

- Approach: Using a simple prompt, researchers asked TATER to compute evolutionary scores for all possible missense variants and identify those near inhibitor-binding sites.

- Outcome:

- Generated over 2,000 possible variants and ranked them using evolutionary scoring models.

- Mapped each variant to multiple crystal structures to determine its proximity to drug-binding pockets.

- Delivered a prioritized list of mutations likely to alter inhibitor sensitivity.

Impact:

TATER condensed what would typically take a week of coding and analysis into a single interactive session. By combining structural data with evolutionary modeling, it guided drug developers toward high-priority mutations for further testing, thereby accelerating antiviral scientific discovery through healthcare AI research.

Use case 2: Engineering a brighter GFP

A second example highlights how Potato supports protein engineering.

- Challenge: Designing brighter GFP (Green Fluorescent Protein) variants typically requires manual literature review, mutation planning, and experimental setup, all of which are time-intensive.

- Approach: Researchers prompted TATER with a single request: “I want to make a brighter GFP.”

- The AI conducted a literature search to identify mutations that enhance brightness.

- Generated an optimized GFP scaffold and designed a library of functional variants.

- Produced a complete experimental workflow for cloning, expression, and fluorescence screening.

Figure 1: Tater, Potato’s AI scientist, generates research plans and literature reviews.1

- Outcome:

- Compiled a variant library including known and novel substitutions.

- Defined detailed data normalization and analysis protocols.

- Delivered ready-to-run lab protocols with documentation templates.

Impact:

TATER transformed a process that typically takes days or weeks into minutes. It provided a complete, reproducible workflow, from idea to experimental execution, illustrating how AI scientists can enable automatic scientific discovery and affordable creativity.

By integrating reasoning across literature and data, Potato advances scientific research, enabling AI agents and research scientists to collaborate and generate new knowledge with minimal friction.

Lila Sciences

Lila Sciences is a research company based in Cambridge, Massachusetts, that is developing a scientific superintelligence system. The company’s mission is to create a unified platform where AI scientists and human scientists collaborate within autonomous laboratories to accelerate scientific discovery across the life sciences, chemistry, and materials science.

Their goal is to develop an end-to-end infrastructure capable of managing the entire research process: from hypothesis generation and experiment design to data analysis and paper write-up. Lila refers to these environments as AI Science Factories (AISF): automated, physical labs where AI agents run thousands of experiments in parallel, analyze outcomes, and iteratively develop ideas with minimal manual supervision.

The technology of Lila Sciences

Lila Sciences combines large language models, neural networks, and robotic experimentation into what it calls a comprehensive system for automatic scientific discovery. The architecture of their AI Science Factories integrates reasoning, simulation, and experimentation into a unified feedback loop.

Key technological features include:

- AI-driven experiment loops: Lila’s foundation models use reinforcement learning and diffusion modeling to propose, execute, and evaluate experiments thousands of times faster than traditional approaches.

- Integration of reasoning and verification: The system combines computational predictions with real-world validation, allowing agentic AI procedures to refine their understanding of physical and biological systems through direct experimentation.

- Cross-domain capability: By connecting life sciences, materials science, and chemistry, Lila’s frontier models eliminate the barriers that typically separate disciplines, enabling the AI to generate ideas and discover correlations across scientific fields.

- Data-driven learning: Each experiment produces digital records that are fed back into the system to improve future predictions. This loop reduces the need for extensive manual supervision, allowing the AI to improve over time continually.

This represents a first comprehensive framework for developing algorithms that not only predict outcomes but also validate them experimentally. Lila envisions its AI scientist works as a partner capable of writing code, controlling lab hardware, and interpreting results, essentially turning the scientific method into a scalable computational process.

Lila Science research areas

Lila focuses on several major scientific research domains where AI research and automation can accelerate discovery:

- Life sciences: Lila’s agents design and validate novel protein therapeutics, gene editors, and diagnostic tools. In demonstration projects, the AI has already produced antibodies for disease treatment and identified potential new drugs in significantly shorter timeframes than traditional methods.

- Chemistry and materials: The company applies AI-driven hypothesis generation to create new catalysts for green hydrogen production, as well as advanced materials for carbon capture, energy storage, and manufacturing.

- Computing and energy: Lila’s models explore new materials for computational hardware and sustainable energy systems by linking simulation-based reasoning with physical experimentation.

In one example, an AI agent developed a new catalyst for hydrogen production in four months, a process that typically takes years for research scientists. This efficiency illustrates how AI scientists can handle challenging problems and extend the scientific process to fields requiring complex problem-solving.

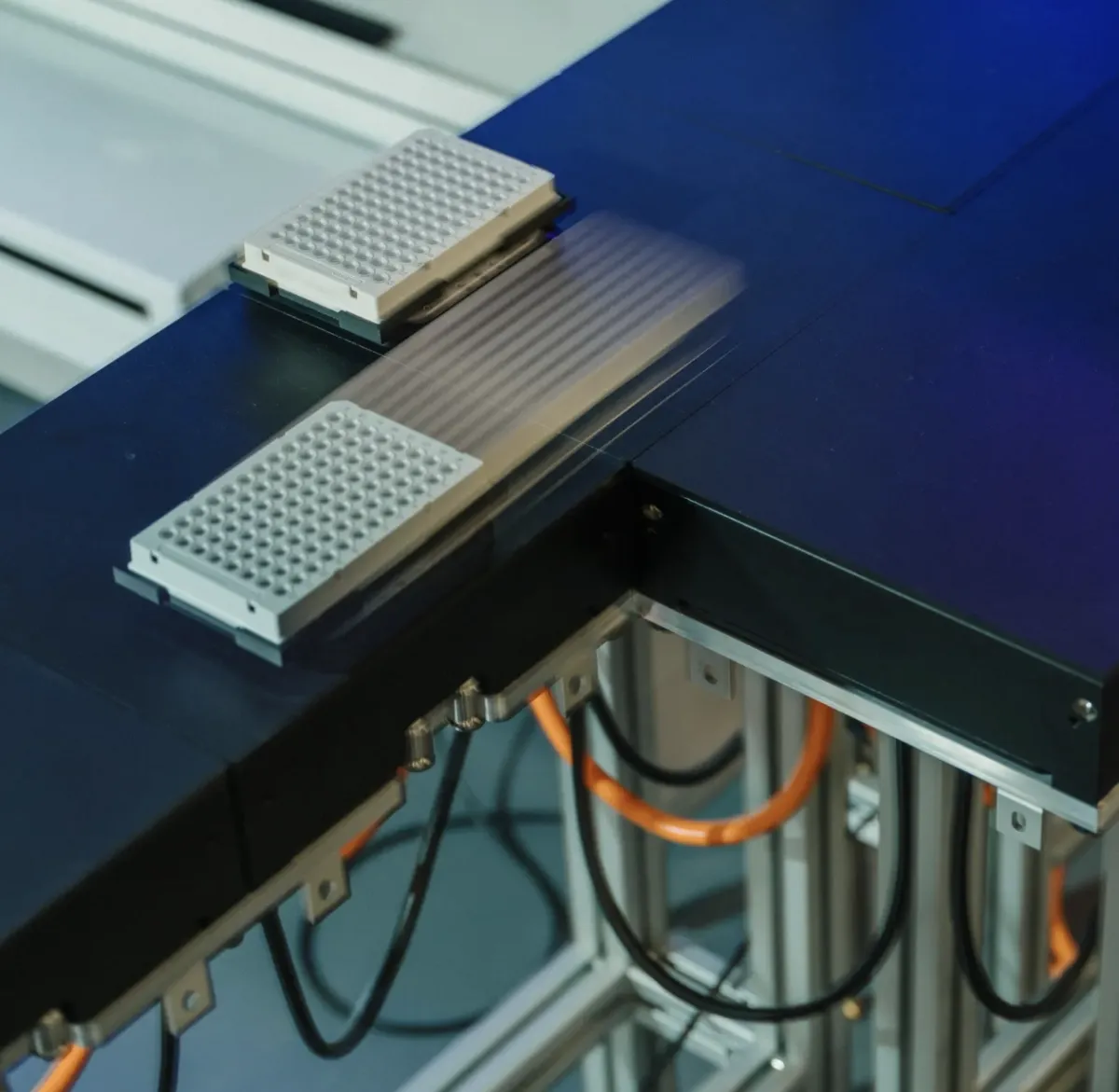

Figure 2: Image showing trays filled with protein solutions being transported across the laboratory on a magnetic platform.2

The concept of scientific superintelligence

The company’s founding principle is that science itself can be scaled in the same way that machine learning has scaled intelligence. Just as larger AI models have unlocked emergent abilities, scaling up experimentation and scientific reasoning can unlock discoveries.

Lila describes its mission as enabling an intelligence revolution for science:

- AI agents act as developing agents capable of conducting scientific research autonomously.

- Each AI Science Factory functions as a “body” for a superintelligent scientific mind, where the AI continuously tests and learns from the natural world.

- The combination of automation, foundation models, and computational reasoning allows for fully automatic scientific discovery across multiple domains.

Ethical considerations and scientific implications

As Lila advances toward scientific superintelligence, the company acknowledges the need for ethical considerations surrounding autonomy, data integrity, and the role of human oversight. The system’s capacity to generate ideas, design experiments, and produce research papers raises questions about authorship, accountability, and the integration of AI researchers within the broader human scientific community.

Lila emphasizes that AI research should enhance, not replace, human scientists. Its vision is to create a partnership where AI scientists amplify human creativity and help address grand challenges in health, energy, and sustainability.

AstroAgents

AstroAgents is a multi-agent AI system designed to assist scientists in generating hypotheses from mass spectrometry data, particularly in the field of astrobiology. Developed by researchers from the Georgia Institute of Technology and NASA Goddard Space Flight Center, the project introduces a comprehensive framework for automatic scientific discovery.

By integrating artificial intelligence with analytical chemistry and astrobiology, AstroAgents reduces the dependence on extensive manual supervision, supporting human scientists in conducting scientific research and producing generated papers that advance understanding in computer science and astrobiology.

Figure 3: An example literature review prompt and AstroAgents’ output.3

The system consists of eight specialized components working collaboratively:

- Data analyst: Interprets mass spectrometry data, identifies patterns, highlights anomalies, and detects contamination.

- Planner: Divides the data into focused tasks for multiple scientist agents to analyze.

- Scientist agents: Generate hypotheses related to assigned molecular patterns, supported by specific data points.

- Accumulator: Consolidates hypotheses, eliminates redundancy, and prepares a unified hypothesis list.

- Literature review agent: Searches Semantic Scholar for related studies and summarizes key findings.

- Critic: Evaluates hypotheses for novelty, plausibility, and scientific rigor, offering feedback for the next iteration.

Figure 4: The figure shows AstroAgents as a multi-agent system that collaboratively analyzes mass spectrometry data, generates and refines molecular distribution hypotheses, integrates literature reviews, and iteratively improves results through agent-based feedback and critique.

Experimental setup and evaluation of AstroAgents

The study utilized mass spectrometry data from eight meteorites and ten terrestrial soil samples, which were analyzed using GC×GC-HRTOF-MS. The goal was to discover molecular patterns that could indicate biotic or abiotic origins of organic compounds.

Two versions of AstroAgents were tested:

- Claude 3.5 Sonnet, which emphasized agent collaboration.

- Gemini 2.0 Flash, which used a large context window (up to 1 million tokens) to integrate more background literature.

An astrobiology expert evaluated over 100 hypotheses produced by these models using six criteria: novelty, consistency with literature, clarity, empirical support, generalizability, and predictive power.

The results showed that Claude 3.5 Sonnet achieved higher overall consistency and precision (average 6.58/10), while Gemini 2.0 Flash generated more novel research ideas (average novelty score of 4.26).

Key findings

AstroAgents demonstrated that multi-agent collaboration enhances scientific discovery compared with single-model reasoning. The system’s ability to analyze experimental data and integrate it with literature enables automatic scientific discovery that could extend to other fields such as chemistry, biology, and materials science.

The AI Scientist

The AI Scientist is a comprehensive system designed to automate the entire research process. Its primary goal is to enable fully automatic scientific discovery, supporting the vision of an AI scientist that can perform research independently and contribute new knowledge to the human scientific community.

The AI Scientist-v1

Version 1 (v1) is the initial prototype demonstrating that a large language model can autonomously handle each step of the scientific process. It includes modules for:

- Idea generation and literature search using scientific databases.

- Experiment design and execution through coding and simulation.

- Result analysis and automatic paper writing in LaTeX format.

However, v1 was heavily constrained in scope. It focused on proof-of-concept experiments, often in simplified domains of computer science or machine learning. The system required manual oversight to ensure logical consistency, code correctness, and data validity.

The AI Scientist-v2

Version 2 (v2) is a significant upgrade that expands the framework into a first comprehensive system for automatic scientific discovery, enabling foundation models. It improves every stage of the research process:

- Enhanced literature integration through sources like Semantic Scholar.

- Improved hypothesis generation using iterative reasoning and idea refinement.

- Advanced experiment automation with minimal human intervention.

- Paper write-up generation of full manuscripts ready for submission to a top machine learning conference.

V2 reduces the need for extensive manual supervision, integrates feedback loops similar to those used by human scientists in their iterative development of ideas, and introduces an automated reviewer that assesses generated papers for originality and scientific validity.

Figure 5: AI Scientist-v2 workflow, which automates idea generation, experimentation, visualization, writing, and review through an agentic tree search managed by an Experiment Progress Manager. This approach eliminates human-coded templates and iteratively refines code and hypotheses using top-performing checkpoints.4

Human evaluation of manuscripts generated by v2

Evaluation setup

- A group of experienced research scientists and senior editors reviewed a set of generated papers.

- Each paper was scored based on clarity, novelty, scientific soundness, and potential contribution.

- The reviewers were unsure whether AI or humans generated the manuscripts.

Findings

- Approximately 30–40% of the AI-generated papers met or approached the acceptance threshold typically seen at a major machine learning conference.

- Reviewers often found the writing coherent and well-structured, comparable to papers authored by human scientists.

- However, some manuscripts lacked deep insight or rigorous experimental validation, indicating that while AI can generate plausible research papers, it still struggles with conceptual depth and critical interpretation.

Conclusions from the evaluation

- The AI Scientist-v2 demonstrates that foundation models can make meaningful contributions to scientific research, generating ideas and complete manuscripts.

- It marks progress toward automatic scientific discovery, but human oversight remains essential for verifying results and ensuring ethical considerations.

Key features of AI scientist systems

AI scientist systems integrate multiple components to emulate the reasoning and experimentation cycle followed by human scientists. These systems combine foundation models, autonomous lab control, and scientific reasoning to enable automatic scientific discovery mechanisms.

1. AI-driven hypotheses and ideation

AI scientists use large language models and multi-agent reasoning to generate testable hypotheses. Through techniques such as debate, planning agents, and literature search across databases like Semantic Scholar, these systems identify potential research directions that might go unnoticed by humans.

2. Experimental design and planning

Once a hypothesis is proposed, the AI designs suitable experiments or simulations to test it. This includes selecting variables, controls, and evaluation criteria while balancing cost, time, and the gain in information. Some systems integrate specialized modules for running experiments and optimizing scientific processes.

3. Autonomous or robotic laboratories

An AI scientist works within automated or semi-automated laboratory environments equipped with robotic systems. These allow experiments to proceed with minimal manual supervision, ensuring continuous operation and high-quality data collection. Even negative results, often neglected in the human scientific community, are stored and used for iterative improvement.

4. Integration of AI and feedback loops

A defining feature of such systems is the integration of AI with lab feedback loops. The outcomes of experiments refine the AI’s internal models, enabling it to generate more accurate hypotheses in the next cycle. This self-correcting process mirrors how research scientists refine their approaches based on prior results.

5. Data analysis and interpretation

AI systems clean, structure, and interpret raw data to detect correlations, anomalies, and causal patterns. By integrating neural networks, diffusion modeling, and statistical analysis, these systems can efficiently evaluate hypotheses and update their reasoning models in real-time.

6. Communication and dissemination

Some advanced frameworks include modules that generate papers, paper write-ups, or technical summaries. These outputs can resemble submissions to scientific conferences, complete with structured reasoning, results, and references.

7. Cross-domain adaptability

A core aspiration of AI research in this area is the generalization of findings across various scientific domains. An ideal AI scientist should be able to transfer knowledge from one field, such as materials discovery, to others like biology or energy systems, without requiring retraining. This adaptability distinguishes AI scientists from task-specific machine learning models.

Limitations and current challenges

While the vision of an autonomous AI scientist is compelling, current systems face several practical and conceptual challenges that prevent the full realization of automatic scientific discovery.

Limited domain scope

Most implementations operate in narrow, well-defined scientific areas such as protein folding or material synthesis. The capacity to generalize across open-ended domains of science remains limited.

Complexity of physical execution

The transition from computational design to real-world experimentation introduces difficulties involving robotics, chemical safety, and instrumentation. Many systems can simulate or plan experiments, but still depend on human scientists for physical execution.

Trust and interpretability

For an AI scientist to contribute meaningfully to scientific research, the reasoning behind their work must be transparent and interpretable. Current models often behave as black boxes, making it difficult for researchers to assess the soundness of conclusions or underlying assumptions.

Resource constraints

Running experiments consumes time, materials, and energy. AI systems must optimize for cost-efficiency and information gain while managing limited laboratory throughput.

Risk of degenerate optimization

Without well-defined exploration strategies, AI agents may repeat trivial hypotheses or converge on local optima.

Scientific validation and publishing

Even if an AI system generates plausible results or papers generated from automated processes, they must undergo peer review and independent replication before being accepted by the human scientific community. Ensuring reproducibility remains essential.

Adaptability and generalization

Current systems often require retraining for each new domain. Developing comprehensive frameworks that generalize scientific reasoning across topics remains a grand challenge for AI researchers.

Be the first to comment

Your email address will not be published. All fields are required.