Proxy testing now extends beyond connectivity checks. As AI agents become more prevalent, it is essential to verify that tools support the Model Context Protocol (MCP) and implement fingerprinting management. These methods are key to modern anti-bot systems for detecting and blocking automated traffic.

See the most common proxy testing methods for easy proxy testing:

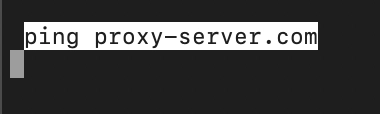

Method | Checks | Advantages | Limitations |

|---|---|---|---|

Free IP checker | IP address Location Internet service provider | Easy to use No installation required | No historical data Limited to basic checks |

Paid IP checker | Speed Anonymity level IP address Location Status | Customization options (e.g., bulk testing) Advanced analysis (e.g., speed, logs) | Requires installation and setup |

AI testing agents | Behavioral survival TLS/QUIC Fingerprinting | AI-driven firewalls | Requires agent orchestration |

IP database | Proxy type Geolocation Anonymity level Proxy availablity ISP | Detailed information about the IP location | Subscription fees |

Manual testing methods | Connectivity Functionality Authentication Speed Anonymity | Customization | Requires technical knowledge |

1. Online proxy checkers

An online IP checker (online proxy checker) is the most common method to test proxies, helping users to confirm whether their proxy setup is working properly. Many IP checkers require no technical expertise, you can simply visit a webpage, and the tool automatically displays the IP address being used with additional details.

Proxy testing tools, both free and paid, automatically identify the proxy IP address and offer details to confirm whether the proxy is effectively hiding the real IP address. Both free and paid proxy testing tools automatically detect proxy IP addresses and provide information about to determine whether the proxy is correctly disguising the real IP address. Here’s how they break down:

- Free IP checkers (web-based IP checkers) provide basic features for viewing your IP address and location, including the proxy’s location and connection type. Free services typically focus on whether the proxy is currently active and functional, rather than tracking historical data.

- Paid IP checkers (local installation) provide more thorough testing options, such as proxy speed, proxy server’s anonymity level, IP stability and proxy server’s status.

2. AI-Powered autonomous testing agents

Headless testing is no longer sufficient for maintaining strong anonymity. Modern anti-bot tools such as Cloudflare and Akamai can easily detect headless activity.

AI testing agents employ advanced methods to identify the true IP address behind a proxy and assess the safety of proxy traffic. Relying solely on the anonymity level is no longer adequate. AI-based checkers like Helicone and Langfuse also analyze behavioral fingerprinting.

3. Manual testing methods

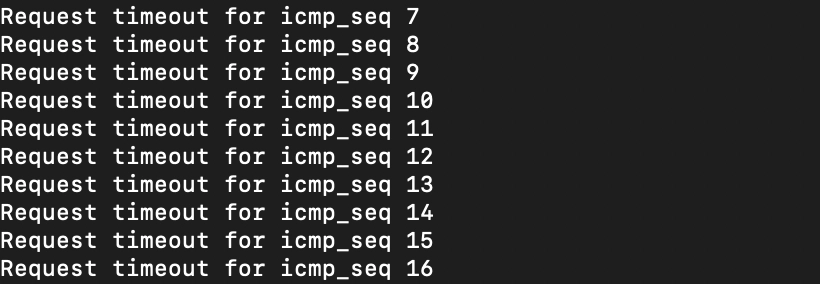

Using ping to test proxy connection speed: You can use ping to check if the proxy server is accessible and test proxy speed. Ping doesn’t provide information about the the functionality of a proxy server, check if the proxy is online and accepting incoming traffic.

- Open a terminal on your machine and type the command followed by the proxy server’s hostname or IP address. If the proxy service you are using is not online you may get a response like “Request Timed Out.”

Expected output:

Modern web servers use TLS fingerprinting to identify clients in addition to the User-Agent header. Websites match these fingerprints against databases of known browsers, making traditional curl testing unreliable. If your TLS handshake appears as “curl” but your header claims “Chrome,” you will likely be blocked.

You can leverage Zen-AI-Pentest, an open-source tool for stress-testing proxies. It uses AI agents to conduct real-world tests, ensuring your proxies can withstand fingerprint-based blocking and behavioral analysis.

4. IP databases

IP databases or geolocation APIs provide specific information about an IP address, such as its geolocation (country, city, region), ISP (Internet Service Provider), and proxy type whether the IP is associated with a data center or a residential proxy server. You can use an IP database service to obtain detailed geolocation data and determine whether an IP address belongs to a proxy or a data center. Here are some IP databases and geolocation services that you may use to check proxies:

- MaxMind GeoIP2 has both free and paid versions. GeoLite2 is free and GeoIP2 is for premium users.

- IP-api: The free API supports up to 45 requests per minute.

- Proxy-Check.io: Offers both free and premium services with varying levels of API access.

FAQs about proxy checkers

The frequency of checking your proxies depends on several factors. For example, when you make any changes to your proxy settings, you should check your proxy server to ensure the proxy is working properly.

You can verify proxy authentication with command-line tools such as curl or Postman. These techniques enable you to test how your proxy server handles authentication. Postman is a GUI-based utility for sending HTTP requests. However, if you are dealing with a large number of proxies or need to automate proxy authentication tests, you can test proxy authentication using scripts.

A proxy tester or proxy management software allows you to test multiple proxies at the same time, providing information on proxy usage, proxy status, and anonymity level.

Be the first to comment

Your email address will not be published. All fields are required.