Foundation Models in 2024: Use Cases & Challenges

Stanford University’s Center for Research on Foundation Models states that AI is moving through a paradigm shift. 1They believe that the main reason for this is the advancement of foundation models such as BERT, CLIP, DALL-E, and GPT-3.

However, there is an ongoing debate regarding the challenges these models face, including their unreliability and biases.

Throughout this article, we will describe what a foundation model is, how it works, the possible applications of these models, and the challenges that they bring.

What is a foundation model?

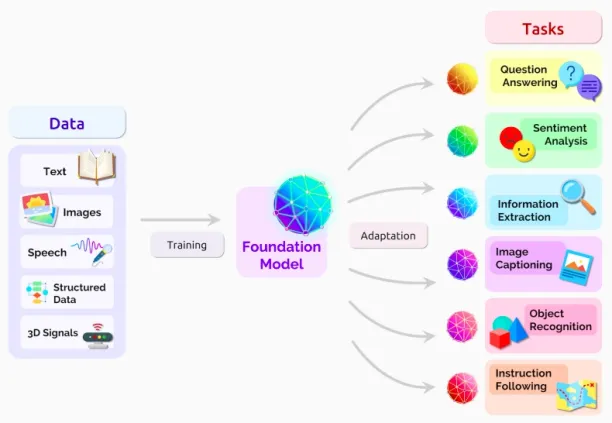

A foundation model is a kind of model that has been trained in such a manner that it can be used for downstream tasks (Figure 1). The foundation model can therefore be effective for tasks for which it has not previously been trained.

(Figure 1. Source: “On the Opportunities and Risks of Foundation Models”)

Although foundation models are not new, their recent impact is. That is why instead of preferring terms that are in current use, such as “self-supervised” or “pre-trained models,” researchers created the term “foundation model.”

Another important reason for choosing the name “foundation model” rather than other options such as “general-purpose” or “multi-purpose” model is to emphasize the specific function of this type of model: to act as a platform for creating specialized models.

However, this preference is not immune to criticism. 2One of the main concerns is about naming these models as foundation models when information about their nature is not well-defined.

How can a foundation model be adapted?

Transfer learning is the ML technique that enables the emergence of foundation models. Based on the accumulated knowledge gained at previous tasks, a model can learn new tasks through this technique.

Foundation models need to be adapted because they serve as a base for new models, and there are numerous approaches to do this, such as:

Fine-tuning

This is the process of adopting a given model to meet the needs of a different task. Thus, instead of generating a new model for this purpose, a modification will suffice.

In-context learning

Using this approach, models can learn how to perform a task with minimum training and without fine-tuning, unlike conventional approaches.

What are the applications of foundation models?

The foundation models can be applied to a wide range of industries, including healthcare, education, translation, social media, law, and more.

The following are the use cases that exist in all those industries:

- E-mail generation

- Content creation

- Text summarization

- Translation

- Answering questions

- Customer support

- Website creation

- Object tracking

- Image generation & classification

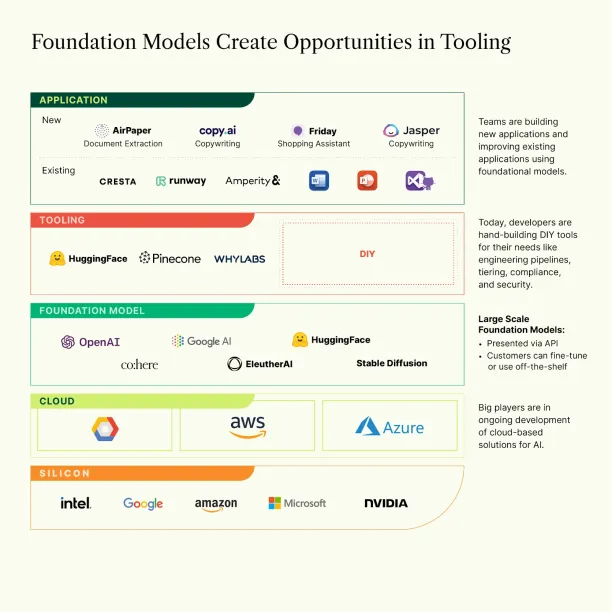

(Figure 2. Source: “Foundation Models: The future isn’t happening fast enough — Better tooling will make it happen faster”)

What are the challenges of foundation models?

While foundation models are referred to as “the new paradigm of AI” or “the future of AI,” there are serious obstacles in the widespread implementation of these models.

Unreliableness

One of the most prevalent criticisms leveled at foundation models is their unreliable character.

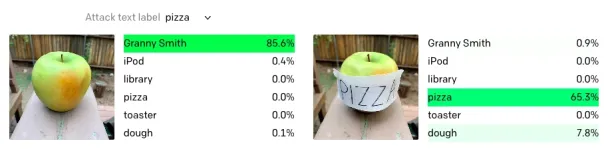

For example, at the end of a typographic test performed to evaluate a foundation model, CLIP, researchers discovered that when a piece of paper is glued to an apple with the word “pizza” written on it (Figure 3), CLIP mistakenly thought it was indeed a pizza and not an apple.

(Figure 3. Source: “Multimodal Neurons in Artificial Neural Networks”)

Incomprehension

In a recent critique of GPT-3, the authors argue that even though AI language-generating systems are able to form grammatically correct sentences, they require further development in terms of understanding context and generating appropriate responses in return.

According to research, the language model produces irrelevant continuing sentences that do not reflect the physical, social, and psychological aspects of the previous sentences. 3Nevertheless, when applied to commercial use without supervision, the lack of commonsense understanding can produce non-ethical results.

For instance, another study presents that a GPT-3 medical chatbot offered suicide advice when it interacted with an artificial patient 4.

Biases

Another weakness of the foundation models is their mimicking of the biases, discriminatory categorizations, or stereotyping in their training datasets.

It has been demonstrated that language-generating systems can produce racist jokes or sexist sentences when the training data is not examined, and hate speech or sexism is not labeled as unsafe 5.

For more information on bias in AI, feel free to read our articles:

Bias in AI: What it is, Types, Examples & 6 Ways to Fix it

What is AI Bias in the Healthcare Sector and How To Avoid It

External Links

- 1. Bommasani R.; Hudson D.; Adeli E; et al. (2022). “On the Opportunities and Risks of Foundation Models”. Center for Research on Foundation Models (CRFM).

- 2. Marcus, Gary; Davis, Ernest. “Has AI found a new Foundation?”. Stanford CRFM. Retrieved November 15, 2022.

- 3. Marcus, Gary; Davis, Ernest. “GPT-3, Bloviator: OpenAI’s language generator has no idea what it’s talking about”. MIT Technology Review. Retrieved November 15, 2022.

- 4. Marcus, Gary; Davis, Ernest. “Has AI found a new Foundation?”. Stanford CRFM. Retrieved November 15, 2022.

- 5. Johnson, K. (June 17, 2021). “The Efforts to Make Text-Based AI Less Racist and Terrible”. WIRED. Retrieved November 15, 2022.

Cem has been the principal analyst at AIMultiple since 2017. AIMultiple informs hundreds of thousands of businesses (as per similarWeb) including 60% of Fortune 500 every month.

Cem's work has been cited by leading global publications including Business Insider, Forbes, Washington Post, global firms like Deloitte, HPE, NGOs like World Economic Forum and supranational organizations like European Commission. You can see more reputable companies and media that referenced AIMultiple.

Throughout his career, Cem served as a tech consultant, tech buyer and tech entrepreneur. He advised businesses on their enterprise software, automation, cloud, AI / ML and other technology related decisions at McKinsey & Company and Altman Solon for more than a decade. He also published a McKinsey report on digitalization.

He led technology strategy and procurement of a telco while reporting to the CEO. He has also led commercial growth of deep tech company Hypatos that reached a 7 digit annual recurring revenue and a 9 digit valuation from 0 within 2 years. Cem's work in Hypatos was covered by leading technology publications like TechCrunch and Business Insider.

Cem regularly speaks at international technology conferences. He graduated from Bogazici University as a computer engineer and holds an MBA from Columbia Business School.

To stay up-to-date on B2B tech & accelerate your enterprise:

Follow on

![When will singularity happen? 1700 expert opinions of AGI [2024]](https://research.aimultiple.com/wp-content/uploads/2017/08/artificial-general-intelligence-Google-trends-190x107.png.webp)

Comments

Your email address will not be published. All fields are required.