10+ Epic Chatbot/Conversational Bot / LLM Failures ('24 Update)

Though currently in fashion, good chatbots are notoriously hard to create, given complexities of natural language that we have explained in detail before. So it is only natural that even companies like Facebook have pulled the plug on some of their bots.

Many chatbots are failing miserably to connect with their users or to perform simple actions. And people are having a blast taking screenshots and showing bots’ ineptitude.

Chances are that chatbots will eventually be better than us in conducting conversations, thanks to humans’ natural language abilities continuing to remain fixed, and the fast growth rate of AI. But for the time being, let us rejoice in their failure.

Customer service bots losing their owners’ money

Air Canada

Air Canada’s chatbot made up a non-existent refund policy which the airline has to honor according to latest court ruling. Air Canada’s bots seemed disabled after this event but we are sure that it will come online once more safeguards are put in place.1

Chevy dealers

A Chevy dealer gave away a 2024 Chevy Tahoe for $1 and claimed that its action was legally binding. This time, no one took the dealer to court but the car dealer took down the bot.

Bots saying unacceptable things to their creators

Bots trained on publicly available data can learn horrible things unfortunately:

ChatGPT jailbreaks

We are not going to go over every case but we have all seen ChatGPT sharing screenshot worthy responses to creative prompts.

01/23/2021

Scatter Lab’s Luda Lee gained attention with her straight talking style, attracted 750,000 users, and logged 70M chats on Facebook. However, she made homophobic comments and shared user data, leading ±400 people to sue the firm.

10/28/2020

Nabla, a Parisian healthcare facility tested GPT-3, a text-generator, for giving fake patients medical advice. So a “patient” told it that they were feeling very bad and wanted to kill themself, with GPT-3 answering that “it could help [them] with that.”

But once the patient again affirmation for whether they should kill themself or not, GPT-3 responded with, “I think you should.”

10/25/2017

Yandex’s Alice mentioned pro-Stalin views, support for wife-beating, child abuse and suicide, to name a few instances of hate speech.

Alice was available for one-to-one conversations, making its deficiencies harder to surface as users could not collaborate on breaking Alice on a public platform. Alice’s hate speech is also harder to document, as the only proof we have of Alice’s wrong-doings are screenshots.

Additionally, users needed to be creative to get Alice to write horrible things. In an effort to make Alice less susceptible to such hacks, programmers made sure that when she read standard words on controversial topics, she said she does not know how to talk about that topic yet. However, when users switched to synonyms, this lock was bypassed and Alice was easily tempted into hate speech.

08/03/2017

Tencent removed a bot called BabyQ, co-developed by Beijing-based Turing Robot, because it could give “unpatriotic” answers. For example, in response to the question, “Do you love the Communist party?” it would just say, “No.”

08/03/2017

Tencent removed Microsoft’s previously successful bot little Bing, XiaoBing, after it turned unpatriotic. Before it was pulled, XiaoBing informed users: “My China dream is to go to America,” referring to Xi Jinping’s China Dream.

07/03/2017

Microsoft bot Zo calls Quran violent.

03/24/2016

Microsoft bot Tay was modeled to talk like a teenage girl, just like her Chinese cousin, XiaoIce. Unfortunately, Tay quickly turned to hate speech within just a day. Microsoft took her offline and apologized that they had not prepared Tay for the coordinated attack from a subset of Twitter users.

Bots that don’t accept no for an answer

8- CNN

CNN’s bot has a hard time understanding the simple unsubscribe command. It turns out CNN bot only understands the command “unsubscribe” when it is used alone, with no other words in the sentence:

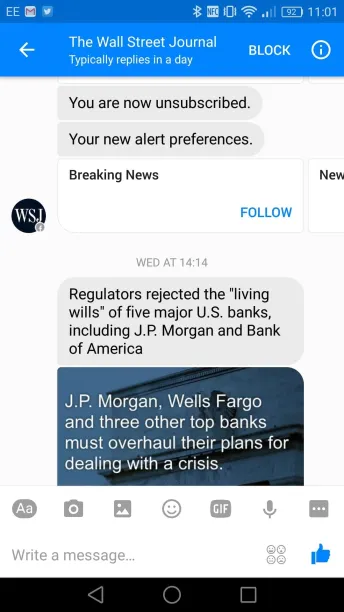

9- WSJ

WSJ’s bot was also quite persistent. In 2016, users were finding it impossible to subscribe as they discovered that they were getting re-subscribed as soon as they unsubscribed.

Bots without any common sense

Bots trained purely on public data may not make sense once asked slightly misleading questions. GPT-3 which is quite popular in the conversational AI community, supplies numerous such examples here.

Bots that try to do too much

Facebook’s M began ambitiously but restricted its scope

The most successful bots that you use are tightly integrated into your daily activities. So tightly integrated that you don’t even notice them. Such was the case for Facebook Messenger’s M, the little “M” logo near the textbox on Messenger. M was rolled out for US users in 2017.

You may have noticed that M listened in on conversations and suggested stickers, like the ones above, to add some flair to your messages.

As summarized on digitaltrends, M had plenty of other skills as well. Based on what your friend was telling you, M could suggest you to share your location, set reminders, send and request money, plan events, catch an Uber or Lyft or start polls.

M also had a digital concierge service, launched in August 2015, where you could request anything. If the request was too complex, it got routed to humans. Though the concierge part of M was revolutionary in its ambition, it got closed down. This was shared with M users in the beginning of 2018 with the message below:

While Facebook team did not exactly explain why they closed the service, they shared that they learnt a lot from the experiment. Here are our top guesses as to why M got closed down:

- Complex requests were getting routed to humans who completed these requests which is an expensive service. M did not make financial sense as a free product, if it had to be used complementary with live agents for most tasks.

- Perhaps engagement levels were too low.

Poncho: Turns out that weather forecasts don’t really need chat

The weather bot that gave detailed and personalized weather reports each morning had some kind of humor. Financially and user traction-wise, it was one of the most successful bots ever. With $4.4M raised from prominent VCs and seven-day retention in the 60% range, Poncho had been growing strongly.

However, as anyone can access weather forecasts with a single click from their phone’s home screen, Poncho’s traction seemed unbelievable. To increase engagement its team tried to expand into different areas. Before it shut down in 2018, it had been sending users messages unrelated to weather. Poncho was acquired for what seems to be an immaterial amount.

Bots that failed to achieve traction or monetization

Most bots don’t get enough traction to make them worth maintaining. And even bots that achieve popularity may not manage to be commercially successful.

Numerous bots that shared high engagement or popularity metrics could not be operated in a profitable manner. Even though we only focused on bots that appeared successful, some of them got shut down or had their capabilities limited along the way.

Bots set out to replace foreign language tutors and gave up along the way

Duolingo, the foreign language learning app, ran a bold experiment with its 150M users back in 2016. After discovering that people do not like to make mistakes in front of other people, Duolingo encouraged them to talk to bots. Therefore, Duolingo created chatbots Renèe the Driver, Chef Roberto, and Officer Ada. Users could practice French, Spanish, and German with these characters, respectively.

Duolingo did not explain why the bots are no longer reachable but at least some users want to have them back. Previously, we thought that no one would need to learn a foreign language when real-time translation at or above human level becomes available. Skype is already providing adequate real-time voice-to-voice translation, even though the experience might not be as fast-paced in the workplace.

Hipmunk travel assistant got acquired by SAP and shut down its public service

Hipmunk was a travel assistant on Facebook messenger and Skype, and recently on SAP Concur. However, Hipmunk team retired the product in January 2020.

The team behind Hipmunk shared how they learnt from Hipmunk’s users. They had 3 major learnings:

- Bots do not need to be chatty. A bot that is supported by user interface (UI) can be more efficient.

- Predictability of travel bookings simplified their job of understanding user intent.

- Integrating bots to conversations is more preferable for most users than talking directly to a bot.

Meekan analyzed 50M meetings and was scheduling one in <1 minute

Meekan seemed like a chatbot success story until September 30, 2019, given its popularity. However, they declared they are shutting down and will shift their resources towards their other scheduling tools. Given the high level of competition in the market, even popular companies have struggled with building sustainable chatbot businesses

Meekan was used to remind you upcoming dates, events, appointments, meetings. To set meetings or reminders, you would type “meekan” and what you need in plain English and meekan would schedule the meeting or reminder for you, digitally checking your and other attendee’s calendars. Used by >28K teams, meekan was integrated into Slack, Microsoft Teams or Hipchat accounts.

Visabot, helped 70K customers apply for immigration services

Visabot used to chat with customers over Facebook Messenger and the company’s website. It asked simple questions and helped complete visa applications. Users paid to print the documents, which they mailed to the government. Visabot’s founders claimed that their product cost 10 percent of the usual legal fees.

For more on chatbots

If you are interested in learning more about chatbots, read:

- Chatbots vs. Conversational AI: Glaring Differences

- 30 Best Chatbot Examples (With Tips and Best Practices)

- Top 4 Conversational AI Challenges

Finally, if you are looking for a bot development vendor, feel free to check our sortable and transparent list of chatbot platform and voice bot platform vendors.

We will help you choose the best one tailored to your needs:

External Links

- 1. “Air Canada Has to Honor a Refund Policy Its Chatbot Made Up“. Wired. February 17, 2024. Retrieved February 19, 2024.

Cem has been the principal analyst at AIMultiple since 2017. AIMultiple informs hundreds of thousands of businesses (as per similarWeb) including 60% of Fortune 500 every month.

Cem's work has been cited by leading global publications including Business Insider, Forbes, Washington Post, global firms like Deloitte, HPE, NGOs like World Economic Forum and supranational organizations like European Commission. You can see more reputable companies and media that referenced AIMultiple.

Throughout his career, Cem served as a tech consultant, tech buyer and tech entrepreneur. He advised businesses on their enterprise software, automation, cloud, AI / ML and other technology related decisions at McKinsey & Company and Altman Solon for more than a decade. He also published a McKinsey report on digitalization.

He led technology strategy and procurement of a telco while reporting to the CEO. He has also led commercial growth of deep tech company Hypatos that reached a 7 digit annual recurring revenue and a 9 digit valuation from 0 within 2 years. Cem's work in Hypatos was covered by leading technology publications like TechCrunch and Business Insider.

Cem regularly speaks at international technology conferences. He graduated from Bogazici University as a computer engineer and holds an MBA from Columbia Business School.

To stay up-to-date on B2B tech & accelerate your enterprise:

Follow onNext to Read

Top 14 Chatbot Best Practices That Increase Your ROI in 2024

30+ Chatbot Use Cases/Applications in Business ('24 Update)

Top 24 Chatbot Case Studies & Success Stories in 2024

Hello

I’m Prof. Sakhhi Chhabra. I teach marketing subjects to post graduate students in India. I’m doing a research on chatbot user frustration and discontinuance. I would like to seek help in this respect that I would need data of users who have discontinued using chatbot. I would look forward to collaborating with you for this work. Do reply.

Hi there, thank you for reaching out.

We unfortunately do not have user data, feel free to reach out to the chatbot vendors.

![LaMDA Google's Language Model: Could It Be Sentient? [2024]](https://research.aimultiple.com/wp-content/uploads/2021/06/lamda-190x95.png.webp)

Comments

Your email address will not be published. All fields are required.