Top 9 Dilemmas of AI Ethics in 2024 & How to Navigate Them

Though artificial intelligence is changing how businesses work, there are concerns about how it may influence our lives. This is not just an academic or a societal concern but a reputational risk for companies, no company wants to be marred with data or AI ethics scandals that impact companies like Amazon. For example, there was significant backlash due to the sale of Rekognition to law enforcement. This was followed by Amazon’s decision to stop providing this technology to law enforcement for a year since they anticipated the proper legal framework to be in place by then.

This article provides insights on ethical issues that arise with the use of AI, examples from misuses of AI, and best practices to build a responsible AI.

What are the ethical dilemmas of artificial intelligence?

Automated decisions / AI bias

Al algorithms and training data may contain biases as humans do since those are also generated by humans. These biases prevent AI systems from making fair decisions. We encounter biases in AI systems due to two reasons

- Developers may program biased AI systems without even noticing

- Historical data that will train AI algorithms may not be enough to represent the whole population fairly.

Biased AI algorithms may lead to discrimination of minority groups. For instance, Amazon shut down its AI recruiting tool after using it for one year.1 Developers in Amazon state that the tool was penalizing women. About 60% of the candidates the AI tool chose were male, which was due to patterns in data on Amazon’s historical recruitments.

To build an ethical & responsible AI, getting rid of biases in AI systems is necessary. Yet, only 47% of organizations test for bias in data, models, and human use of algorithms.2

Though getting rid of all biases in AI systems is almost impossible due to the existing numerous human biases and ongoing identification of new biases, minimizing them can be a business’s goal.

If you want to learn more, feel free to check our comprehensive guide on AI biases and how to minimize them using best practices and tools. Also, a data-centric approach to AI development can help address bias in AI systems.

Autonomous things

Autonomous Things (AuT) are devices and machines that work on specific tasks autonomously without human interaction. These machines include self-driving cars, drones, and robotics. Since robot ethics is a broad topic, we focus on unethical issues that arise due to the use of self-driving vehicles and drones.

Self-driving cars

The autonomous vehicles market was valued at $54 billion in 2019 and is projected to reach $557 billion by 2026.3 However, autonomous vehicles pose various risks to AI ethics guidelines. People and governments still question the liability and accountability of autonomous vehicles.

For example, in 2018, an Uber self-driving car hit a pedestrian who later died at a hospital.4 The accident was recorded as the first death involving a self-driving car. After the investigation by the Arizona Police Department and the US National Transportation Safety Board (NTSB), prosecutors have decided that the company is not criminally liable for the pedestrian’s death. This is because the safety driver was distracted with her cell phone, and police reports label the accident as “completely avoidable.”

Lethal Autonomous Weapons (LAWs)

LAWs are one of the weapons in the artificial intelligence arms race. LAWs independently identify and engage targets based on programmed constraints and descriptions. There have been debates on the ethics of using weaponized AI in the military. For example, in 2018, the United Nations gathered to discuss the issue. Specifically, countries that favor LAWs have been vocal on the issue. (Including South Korea, Russia, and America.)

Counterarguments for the usage of LAWs are widely shared by non-governmental communities. For instance, a community called Campaign to Stop Killer Robots wrote a letter to warn about the threat of an artificial intelligence arms race. Some renowned faces such as Stephen Hawking, Elon Musk, Steve Wozniak, Noam Chomsky, Jaan Tallinn, and Demis Hassabis also signed the letter.

Unemployment and income inequality due to automation

This is currently the greatest fear against AI. According to a CNBC survey, 27% of US citizens believe that AI will eliminate their jobs within five years.5 The percentage increases to 37% for citizens whose age is between 18-24.

Though these numbers may not look huge for “the greatest AI fear”, don’t forget that this is just a prediction for the upcoming five years.

According to Mckinsey estimates, intelligent agents and robots could replace as much as 30% of the world’s current human labor by 2030. Depending upon various adoption scenarios, automation will displace between 400 and 800 million jobs, requiring as many as 375 million people to entirely switch job categories.

Comparing society’s 5-year expectations and McKinsey’s forecast for 10 years shows that people’s expectations of unemployment are more pronounced than industry experts’ estimates. However, both point to a significant share of the population being unemployed due to advances in AI.

Another concern about the impacts of AI-driven automation is rising income inequality. A study found that automation has reduced or degraded the wages of US workers specialized in routine tasks by 50% to 70% since 1980.

Misuses of AI

Surveillance practices limiting privacy

“Big Brother is watching you.” This was a quote from George Orwell’s dystopian social science fiction novel called 1984. Though it was written as science fiction, it may have become a reality as governments deploy AI for mass surveillance. Implementation of facial recognition technology into surveillance systems concerns privacy rights.

According to AI Global Surveillance (AIGS) Index, 176 countries are using AI surveillance systems and liberal democracies are major users of AI surveillance.6 The same study shows that 51% of advanced democracies deploy AI surveillance systems compared to 37% of closed autocratic states. However, this is likely due to the wealth gap between these 2 groups of countries.

From an ethical perspective, the important question is whether governments are abusing the technology or using it lawfully. “Orwellian” surveillance methods are against human rights.

Some tech giants also state ethical concerns about AI-powered surveillance. For example, Microsoft President Brad Smith published a blog post calling for government regulation of facial recognition.7 Also, IBM stopped offering the technology for mass surveillance due to its potential for misuse, such as racial profiling, which violates fundamental human rights.8

Manipulation of human judgment

AI-powered analytics can provide actionable insights into human behavior, yet, abusing analytics to manipulate human decisions is ethically wrong. The best-known example of misuse of analytics is the data scandal by Facebook and Cambridge Analytica.9

Cambridge Analytica sold American voters’ data crawled on Facebook to political campaigns and provided assistance and analytics to the 2016 presidential campaigns of Ted Cruz and Donald Trump. Information about the data breach was disclosed in 2018, and the Federal Trade Commission fined Facebook $5 billion due to its privacy violations.10

Proliferation of deepfakes

Deepfakes are synthetically generated images or videos in which a person in a media is replaced with someone else’s likeness.

Though about 96 % of deepfakes are pornographic videos with over 134 million views on the top four deepfake pornographic websites, the real danger and ethical concerns of society about deepfakes are how they can be used to misrepresent political leaders’ speeches.11

Creating a false narrative using deepfakes can harm people’s trust in the media (which is already at an all time low).12 This mistrust is dangerous for societies considering mass media is still the number one option of governments to inform people about emergency events (e.g., pandemic).

Artificial general intelligence (AGI) / Singularity

A machine capable of human-level understanding could be a threat to humanity and such research may need to be regulated. Although most AI experts don’t expect a singularity (AGI) any time soon (before 2060), as AI capabilities increase, this is an important topic from an ethical perspective.

When people talk about AI, they mostly mean narrow AI systems, also referred to as weak AI, which is specified to handle a singular or limited task. On the other hand, AGI is the form of artificial intelligence that we see in science fiction books and movies. AGI means machines can understand or learn any intellectual task that a human being can.

Robot ethics

Robot ethics, also referred to as roboethics, includes how humans design, build, use, and treat robots. There have been debates on roboethics since the early 1940s. And arguments mostly originate in the question of whether robots have rights like humans and animals do. These questions have gained increased importance with increased AI capabilities and now institutes like AI Now focus on exploring these questions with academic rigor.

Author Isaac Asimov is the first one who talk about laws for robots in his short story called “Runaround”. He introduced Three Laws of Robotics13:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given by human beings except where such orders would conflict with the First Law.

- A robot must protect its existence as long as such protection does not conflict with the First or Second Law.

Generative AI-specific ethical concerns

The ethics of generative AI is relatively new and has gained attention with the release of various generative models, particularly ChatGPT by OpenAI. ChatGPT quickly gained popularity due to its ability to create authentic content on a wide range of subjects. With that, it also brought some genuine ethical concerns as well.

Truthfulness & Accuracy

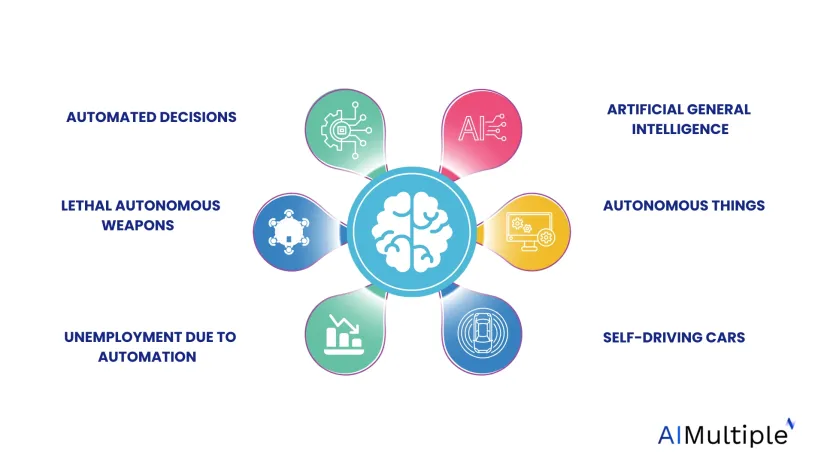

Generative AI employs machine learning techniques to generate new information, which may result in inaccuracies (see Figure 1). Additionally, pre-trained language models like ChatGPT cannot update and adapt to new information.

Recently, language models have become more skilled in their ability to speak persuasively and eloquently. However, this advanced proficiency has also brought the potential to spread false information or even create false statements.

Figure 1. On average, most generative models are truthful only 25% of the time, according to the TruthfulQA benchmark test.

Source14: Stanford University Artificial Intelligence Index Report 2022

Copyright ambiguities

Another ethical consideration with generative AI is the uncertainty surrounding the authorship and copyright of content created by the AI. This raises questions about who holds the rights to such works and how they can be utilized. The issue of copyright centers around three main questions:

- Are works created by AI should be eligible for copyright protection?

- Who would have the ownership rights over the created content?

- Can copyrighted-generated data be used for training purposes?

For a detailed discussion on this concern, you can check our article on the copyright concerns around generative AI.

Misuse of generative AI

- Education: Generative AI has the potential to be misused by creating false or inaccurate information that is presented as true. This could result in students receiving incorrect information or being misled. Also, students can use generative AI tools like ChatGPT to prepare their homework on a wide range of subjects.

How to navigate these dilemmas?

These are hard questions and innovative and controversial solutions like the universal basic income may be necessary to solve them. There are numerous initiatives and organizations aimed at minimizing the potential negative impact of AI. For instance, the Institute for Ethics in Artificial Intelligence (IEAI) at the Technical University of Munich conducts AI research across various domains such as mobility, employment, healthcare, and sustainability.

Some best practices to navigate these ethical dilemmas are:

Transparency

AI developers have an ethical obligation to be transparent in a structured, accessible way since AI technology has the potential to break laws and negatively impact the human experience. To make AI accessible and transparent, knowledge sharing can help. Some initiatives are:

- AI research even if it takes place in private, for-profit companies, tends to be publicly shared

- OpenAI is a non-profit AI research company created by Elon Musk, Sam Altman, and others to develop open-source AI beneficial to humanity. However, by selling one of its exclusive models to Microsoft rather than releasing the source code, OpenAI has reduced its level of transparency.

- Google developed TensorFlow, a widely used open-source machine learning library, to facilitate the adoption of AI.

- AI researchers Ben Goertzel and David Hart, created OpenCog as an open-source framework for AI development

- Google (and other tech giants) has an AI-specific blog that enables them to spread its AI knowledge to the world.

Explainability

AI developers and businesses need to explain how their algorithms arrive at their predictions to overcome ethical issues that arise with inaccurate predictions. Various technical approaches can explain how these algorithms reach these conclusions and what factors affect the decision. We’ve covered explainable AI before, feel free to check it out.

Inclusiveness

AI research tends to be done by male researchers in wealthy countries. This contributes to the biases in AI models. The increasing diversity of the AI community is key to improving model quality and reducing bias. There are numerous initiatives like this one supported by Harvard to increase diversity within the community but their impact has so far been limited.

This can help solve problems such as unemployment and discrimination which can be caused by automated decision-making systems.

Alignment

Numerous countries, companies, and universities are building AI systems and in most areas, there is no legal framework adapted to the recent developments in AI. Modernizing legal frameworks at both country and higher levels (e.g. UN) will clarify the path to ethical AI development. Pioneering companies should spearhead these efforts to create clarity for their industry.

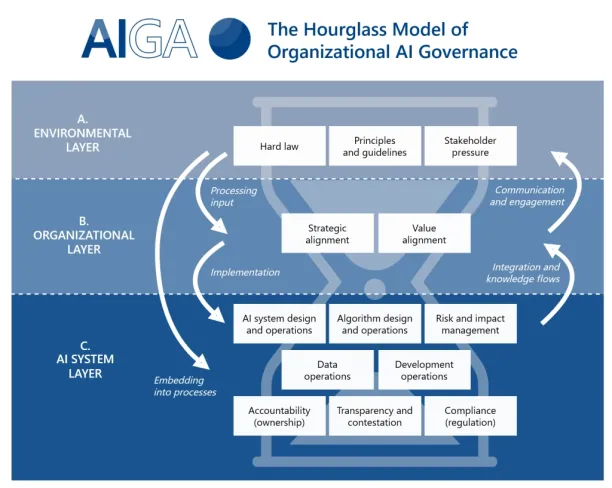

AI ethics frameworks

Academics are also working on frameworks to achieve ethical AI at enterprises. An example is the hourglass model presented below which outlines how organizations, AI systems, and the environment interact.15 It also comes with an extensive task list for those looking for a structured approach to AI ethics.16

Figure: AI ethics frameworks

If you are looking for AI vendors, check our data-driven lists of:

And if you need an expert opinion on vendors and products, feel free to contact us:

External Links

- 1. “Amazon scrapped ‘sexist AI’ tool.” BBC, 10 October 2018. Accessed 2 January 2024.

- 2. “Responsible AI Toolkit.” PwC. Accessed 2 January 2024.

- 3. “Autonomous Vehicle Market Size Worth $448.6 Billion by 2035.” Allied Market Research. Accessed 2 January 2024.

- 4. “Uber’s self-driving operator charged over fatal crash.” BBC, 16 September 2020. Accessed 2 January 2024.

- 5. “This is the industry that has some of the happiest workers in America.” CNBC, 4 November 2019. Accessed 2 January 2024.

- 6. “The Global Expansion of AI Surveillance.” Carnegie Endowment for International Peace, 17 September 2019. Accessed 2 January 2024.

- 7. “Facial recognition technology: The need for public regulation and corporate responsibility – Microsoft On the Issues.” Microsoft Blog, 13 July 2018. Accessed 2 January 2024.

- 8. “IBM will no longer offer, develop, or research facial recognition technology.” The Verge, 8 June 2020. Accessed 2 January 2024.

- 9. “Facebook–Cambridge Analytica data scandal.” Wikipedia. Accessed 2 January 2024.

- 10. “FTC Imposes $5 Billion Penalty and Sweeping New Privacy Restrictions on Facebook.” Federal Trade Commission, 24 July 2019. Accessed 2 January 2024.

- 11. “Debating the ethics of deepfakes.” ORF, 27 August 2020. Accessed 2 January 2024.

- 12. “News Media Credibility Rating Falls to a New Low.” Morning Consult Pro, 22 April 2020. Accessed 2 January 2024.

- 13. “Three Laws of Robotics.” Wikipedia. Accessed 2 January 2024.

- 14. “Artificial Intelligence Index Report 2022.” AI Index. Accessed 22 February 2023.

- 15. “The hourglass model“. Accessed June 24, 2023

- 16. “List of AI Governance Tasks“. Accessed June 24, 2023

Cem has been the principal analyst at AIMultiple since 2017. AIMultiple informs hundreds of thousands of businesses (as per similarWeb) including 60% of Fortune 500 every month.

Cem's work has been cited by leading global publications including Business Insider, Forbes, Washington Post, global firms like Deloitte, HPE, NGOs like World Economic Forum and supranational organizations like European Commission. You can see more reputable companies and media that referenced AIMultiple.

Throughout his career, Cem served as a tech consultant, tech buyer and tech entrepreneur. He advised businesses on their enterprise software, automation, cloud, AI / ML and other technology related decisions at McKinsey & Company and Altman Solon for more than a decade. He also published a McKinsey report on digitalization.

He led technology strategy and procurement of a telco while reporting to the CEO. He has also led commercial growth of deep tech company Hypatos that reached a 7 digit annual recurring revenue and a 9 digit valuation from 0 within 2 years. Cem's work in Hypatos was covered by leading technology publications like TechCrunch and Business Insider.

Cem regularly speaks at international technology conferences. He graduated from Bogazici University as a computer engineer and holds an MBA from Columbia Business School.

To stay up-to-date on B2B tech & accelerate your enterprise:

Follow on

Comments

Your email address will not be published. All fields are required.